系统环境:Ubuntu 16.04.4

安装流程

-

从 Docker 容器官网 pull 容器镜像文件:

nvidia/cuda:10.1-cudnn7-devel$ docker pull nvidia/cuda:10.1-cudnn7-devel 10.1-cudnn7-devel: Pulling from nvidia/cuda 7ddbc47eeb70: Already exists c1bbdc448b72: Already exists 8c3b70e39044: Already exists 45d437916d57: Already exists d8f1569ddae6: Pull complete 85386706b020: Pull complete ee9b457b77d0: Pull complete be4f3343ecd3: Pull complete 30b4effda4fd: Pull complete b398e882f414: Pull complete Digest: sha256:557de4ba2cb674029ffb602bed8f748d44d59bb7db9daa746ea72a102406d3ec Status: Downloaded newer image for nvidia/cuda:10.1-cudnn7-devel docker.io/nvidia/cuda:10.1-cudnn7-devel # 新建 Dockerfile 配置文件 $ vi Dockerfile -

新建 Dockerfile 配置文件, 内容如下:

FROM nvidia/cuda:10.1-cudnn7-devel ENV DEBIAN_FRONTEND noninteractive RUN apt-get update && apt-get install -y \ python3-opencv ca-certificates python3-dev git wget sudo && \ rm -rf /var/lib/apt/lists/* # create a non-root user ARG USER_ID=1000 RUN useradd -m --no-log-init --system --uid ${USER_ID} leaf -g sudo RUN echo '%sudo ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoers USER leaf WORKDIR /home/leaf ENV PATH="/home/leaf/.local/bin:${PATH}" RUN wget https://bootstrap.pypa.io/get-pip.py && \ python3 get-pip.py --user && \ rm get-pip.py # install dependencies # See https://pytorch.org/ for other options if you use a different version of CUDA RUN pip install --user torch torchvision tensorboard cython -i https://pypi.tuna.tsinghua.edu.cn/simple RUN pip install --user 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI' RUN pip install --user 'git+https://github.com/facebookresearch/fvcore' # install detectron2 RUN git clone https://github.com/facebookresearch/detectron2 detectron2_repo ENV FORCE_CUDA="1" # This will build detectron2 for all common cuda architectures and take a lot more time, # because inside `docker build`, there is no way to tell which architecture will be used. ENV TORCH_CUDA_ARCH_LIST="Kepler;Kepler+Tesla;Maxwell;Maxwell+Tegra;Pascal;Volta;Turing" RUN pip install --user -e detectron2_repo # Set a fixed model cache directory. ENV FVCORE_CACHE="/tmp" WORKDIR /home/leaf/detectron2_repo -

根据 Dockfile 配置文件,构建 detecron2 镜像。

# -t 选项指定“要创建的目标镜像名”,. 表示 Dockerfile 文件所在目录,也可指定 Dockerfile 的绝对路径 $ docker build -t dawn/centos:dev . +++ ... Successfully installed Pillow-6.2.2 cloudpickle-1.2.2 detectron2 tabulate-0.8.6 Removing intermediate container 34080cfc4186 ---> 1f7f046d0540 Step 18/19 : ENV FVCORE_CACHE="/tmp" ---> Running in 1e604b777530 Removing intermediate container 1e604b777530 ---> 5b5496be4934 Step 19/19 : WORKDIR /home/leaf/detectron2_repo ---> Running in 9a38d8cb57d4 Removing intermediate container 9a38d8cb57d4 ---> 22e3d06ee1a9 Successfully built 22e3d06ee1a9 Successfully tagged detectron2:latest # 查看自定义的镜像是否构建成功 $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE detectron2 latest 22e3d06ee1a9 6 minutes ago 6.74GB ... -

测试环境是否搭建成功

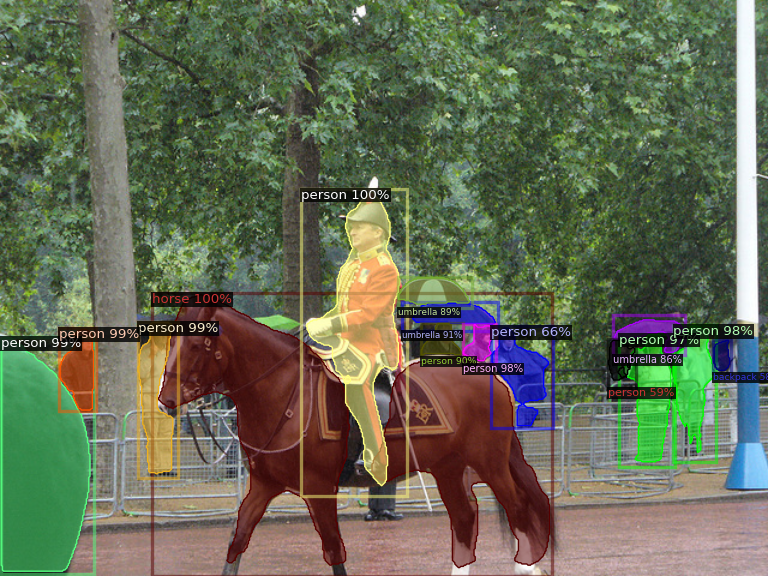

# 164 服务器:由于 nvcc 显示的版本为 10.1, 而 nvidia-smi 版本为 10.2,是否会导致未知错误暂时未知 s164@ml_2:~/shared_dir$ docker run --gpus all -it detectron2 /bin/bash leaf@a81cf98eac7e:~/detectron2_repo$ nvidia-smi Thu Jan 9 03:32:14 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 430.26 Driver Version: 430.26 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce GTX 108... Off | 00000000:02:00.0 Off | N/A | | 25% 39C P0 59W / 250W | 0MiB / 11178MiB | 0% Default | +-------------------------------+----------------------+----------------------+ | 1 GeForce GTX 108... Off | 00000000:82:00.0 Off | N/A | | 19% 32C P0 53W / 250W | 0MiB / 11178MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ leaf@a81cf98eac7e:~/detectron2_repo$ nvcc --version nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2019 NVIDIA Corporation Built on Sun_Jul_28_19:07:16_PDT_2019 Cuda compilation tools, release 10.1, V10.1.243 leaf@a81cf98eac7e:~/detectron2_repo$ ls GETTING_STARTED.md README.md demo docker setup.py INSTALL.md build detectron2 docs tests LICENSE configs detectron2.egg-info projects tools MODEL_ZOO.md datasets dev setup.cfg # 准备测试用图片 leaf@a81cf98eac7e:~/detectron2_repo$ wget http://images.cocodataset.org/val2017/000000439715.jpg -O input.jpg # 开始测试 leaf@a81cf98eac7e:~/detectron2_repo$ python3 Python 3.6.9 (default, Nov 7 2019, 10:44:02) [GCC 8.3.0] on linux Type "help", "copyright", "credits" or "license" for more information. >>> import torch, torchvision >>> import detectron2 Failed to load OpenCL runtime >>> from detectron2.utils.logger import setup_logger >>> setup_logger() <Logger detectron2 (DEBUG)> >>> >>> # import some common libraries ... import numpy as np >>> import cv2 >>> import random >>> from detectron2 import model_zoo >>> from detectron2.engine import DefaultPredictor >>> from detectron2.config import get_cfg >>> from detectron2.utils.visualizer import Visualizer >>> from detectron2.data import MetadataCatalog >>> im = cv2.imread("./input.jpg") >>> im.shape (480, 640, 3) >>> cfg = get_cfg() >>> # add project-specific config (e.g., TensorMask) here if you're not running a model in detectron2's core library ... cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mas k_rcnn_R_50_FPN_3x.yaml")) >>> cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model >>> # Find a model from detectron2's model zoo. You can either use the https://d l.fbaipublicfiles.... url, or use the detectron2:// shorthand ... cfg.MODEL.WEIGHTS = "detectron2://COCO-InstanceSegmentation/mask_rcnn_R_50_F PN_3x/137849600/model_final_f10217.pkl" >>> predictor = DefaultPredictor(cfg) >>> outputs = predictor(im) >>> outputs["instances"].pred_classes tensor([17, 0, 0, 0, 0, 0, 0, 0, 25, 0, 25, 25, 0, 0, 24], device='cuda:0') >>> outputs["instances"].pred_boxes Boxes(tensor([[126.6035, 244.8977, 459.8291, 480.0000], [251.1083, 157.8127, 338.9731, 413.6379], [114.8496, 268.6864, 148.2352, 398.8111], [ 0.8217, 281.0327, 78.6072, 478.4210], [ 49.3954, 274.1229, 80.1545, 342.9808], [561.2248, 271.5816, 596.2755, 385.2552], [385.9072, 270.3125, 413.7130, 304.0397], [515.9295, 278.3744, 562.2792, 389.3802], [335.2409, 251.9167, 414.7491, 275.9375], [350.9300, 269.2060, 386.0984, 297.9081], [331.6292, 230.9996, 393.2759, 257.2009], [510.7349, 263.2656, 570.9865, 295.9194], [409.0841, 271.8646, 460.5582, 356.8722], [506.8767, 283.3257, 529.9403, 324.0392], [594.5663, 283.4820, 609.0577, 311.4124]], device='cuda:0')) >>> v = Visualizer(im[:, :, ::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2) >>> v = v.draw_instance_predictions(outputs["instances"].to("cpu")) >>> v.save('pred_result.png') >>> exit() # 退出 python leaf@a81cf98eac7e:~/detectron2_repo$

由于每次退出 Docker 容器后,会默认删除下载的文件或安装的软件。为了保存 Docker 容器中所进行的操作,可以使用 docker commit 命令:

# 首先需要另外开启一个终端,此时不能退出正在运行的终端,否则文件等信息将都会被清除。 上述正在运行的 Docker 容器镜像 ID 为:a81cf98eac7e

s164@ml_2:~$ docker commit --help

Usage: docker commit [OPTIONS] CONTAINER [REPOSITORY[:TAG]]

Create a new image from a container's changes

Options:

-a, --author string Author (e.g., "John Hannibal Smith

<hannibal@a-team.com>")

-c, --change list Apply Dockerfile instruction to the created image

-m, --message string Commit message

-p, --pause Pause container during commit (default true)

s164@ml_2:~$ docker commit -m="vim installed" -a="s164" a81cf98eac7e detectron2

sha256:7ff9a53a9f656fc30cf50f9b5b04ddadebfc9e57c81021ad07c0a354880e4b83

由于服务器中无法查看图片,可以通过复制 Docker 容器中的图片到宿主机进行查看。

# 复制 docker 容器中的文件到宿主机

$ s164@ml_2:~/shared_dir$ docker cp a81cf98eac7e:/home/leaf/detectron2_repo/pred_result.png Documents

常见报错

(1) 在创建 Docker 容器时,因为网络原因,可能会出现如下错误:

pip._vendor.urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='files.pythonhosted.org', port=443): Read timed out.

The command '/bin/sh -c pip install --user 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'' returned a non-zero code: 2

解决办法:重新运行一遍 Docker 生成命令

(2) OpenCL 问题,错误提示

Failed to load OpenCL runtime

此为 OpenCL 自身的问题,属正常情况。在 opencv>=3.4 后,不会有该提示。

(3) 因启动 Docker 时未加 gpu 参数而引起:

AssertionError: Found no NVIDIA driver on your system.

解决办法: docker run --gpus all -it [IMAGE]

参考资料:

[1] https://www.cnblogs.com/offduty/p/11797061.html

[2] https://github.com/facebookresearch/detectron2/issues/87

[3] https://github.com/NVIDIA/nvidia-docker

来源:oschina

链接:https://my.oschina.net/u/4395108/blog/3317512