一、先查看有无安装Na卡独显驱动:

我的电脑(Ubuntu 18.04.4 LTS 5.4.0-38-generic)已通过以下步骤安装了 440.95.01版本的驱动

sudo add-apt-repository ppa:graphics-drivers/ppa

sudo apt-get update

ubuntu-drivers devices

sudo ubuntu-drivers autoinstall

sudo reboot

可以通过nvidia-smi来验证

二、选择合适的CUDA Toolkit 版本

可以查询:

https://developer.nvidia.com/cuda-toolkit-archive

440支持的是 CUDA Version: 10.2

下面链接是ubuntu18.04下安装cuda10.2步骤:https://developer.nvidia.com/cuda-10.2-download-archive?target_os=Linux&target_arch=x86_64&target_distro=Ubuntu&target_version=1804&target_type=deblocal

https://developer.nvidia.com/cuda-10.2-download-archive?target_os=Linux&target_arch=x86_64&target_distro=Ubuntu&target_version=1804&target_type=deblocal

CUDA Toolkit 10.2(440)

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/cuda-ubuntu1804.pin

sudo mv cuda-ubuntu1804.pin /etc/apt/preferences.d/cuda-repository-pin-600

wget http://developer.download.nvidia.com/compute/cuda/10.2/Prod/local_installers/cuda-repo-ubuntu1804-10-2-local-10.2.89-440.33.01_1.0-1_amd64.deb

sudo dpkg -i cuda-repo-ubuntu1804-10-2-local-10.2.89-440.33.01_1.0-1_amd64.deb

sudo apt-key add /var/cuda-repo-10-2-local-10.2.89-440.33.01/7fa2af80.pub

sudo apt-get update

sudo apt-get -y install cuda

安装 CUDA 10.2 后会把显卡驱动降到440.33.01

$ nvidia-smi

Sun Jul 5 08:29:28 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 440.33.01 Driver Version: 440.33.01 CUDA Version: 10.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce MX250 On | 00000000:01:00.0 Off | N/A |

| N/A 47C P0 N/A / N/A | 622MiB / 2002MiB | 3% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 2568 G /usr/lib/xorg/Xorg 28MiB |

| 0 3339 G /usr/bin/gnome-shell 47MiB |

| 0 3721 G /usr/lib/xorg/Xorg 216MiB |

| 0 3924 G /usr/bin/gnome-shell 140MiB |

| 0 4688 G ...AAAAAAAAAAAACAAAAAAAAAA= --shared-files 185MiB |

| 0 29475 G /opt/pycharm-2019.3/jbr/bin/java 1MiB |

+-----------------------------------------------------------------------------+

如没安装独显卡驱动建议直接安装 CUDA 11,CUDA 11依赖450,如果已经装了440或以前版本,必须匹配对应的CUDA版本,不然安装CUDA的时候就会出错,而且会破坏独显驱动.

CUDA Toolkit 10.1 update2 Archive(ubuntu16.04+418)

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/cuda-ubuntu1604.pin

sudo mv cuda-ubuntu1604.pin /etc/apt/preferences.d/cuda-repository-pin-600

wget http://developer.download.nvidia.com/compute/cuda/10.1/Prod/local_installers/cuda-repo-ubuntu1604-10-1-local-10.1.243-418.87.00_1.0-1_amd64.deb

sudo dpkg -i cuda-repo-ubuntu1604-10-1-local-10.1.243-418.87.00_1.0-1_amd64.deb

sudo apt-key add /var/cuda-repo-10-1-local-10.1.243-418.87.00/7fa2af80.pub

sudo apt-get update

sudo apt-get -y install cuda

三、设置环境变量

在.bashrc加上下面内容:

export CUDA_HOME=/usr/local/cuda-10.2

export CUDA_ROOT=/usr/local/cuda-10.2

export PATH=$PATH:$CUDA_HOME/bin:$CUDA_HOME/include:$CUDA_HOME

export LD_LIBRARY_PATH=$CUDA_HOME/lib64:$LD_LIBRARY_PATH:$CUDA_HOME/include

export CUDA_INC_DIR=$CUDA_INC_DIR:$CUDA_HOME:$CUDA_HOME/include

四、测试:

一维数组相加代码

$ cat > cudatest1.cu

#include <cuda_runtime.h>

#include <device_launch_parameters.h>

#include <stdio.h>

#define N 10

__global__ void add(int *a, int *b, int *c)

{

int tid =blockIdx.x;

c[tid] = a[tid] + b[tid];

}

int main()

{

int a[N], b[N], c[N];

int *deva, *devb, *devc;

//在GPU上分配内存

cudaMalloc((void **)&deva, N*sizeof(int));

cudaMalloc((void **)&devb, N*sizeof(int));

cudaMalloc((void **)&devc, N*sizeof(int));

//在CPU上为数组赋值

for (int i = 0; i < N; i++)

{

a[i] = -i;

b[i] = i*i;

}

//将数组a和b传到GPU

cudaMemcpy(deva, a, N*sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(devb, b, N*sizeof(int), cudaMemcpyHostToDevice);

cudaMemcpy(devc, c, N*sizeof(int), cudaMemcpyHostToDevice);

add <<<N, 1 >> >(deva, devb, devc);

//将数组c从GPU传到CPU

cudaMemcpy(c, devc, N*sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < N; i++)

{

printf("%d+%d=%d\n", a[i], b[i], c[i]);

}

cudaFree(deva);

cudaFree(devb);

cudaFree(devc);

return 0;

}

运行:

$ nvcc -o cudatest1 cudatest1.cu

$ ./cudatest1

0+0=0

-1+1=0

-2+4=2

-3+9=6

-4+16=12

-5+25=20

-6+36=30

-7+49=42

-8+64=56

-9+81=72

官方例子:deviceQuery.cpp

/*

$ nvcc -ccbin g++ -I$CUDA_HOME/include -I$CUDA_HOME/targets/x86_64-linux/include -I$CUDA_HOME/samples/common/inc -m64 deviceQuery.cpp -o deviceQuery

*/

#include <cuda_runtime.h>

#include <helper_cuda.h>

#include <iostream>

#include <memory>

#include <string>

int *pArgc = NULL;

char **pArgv = NULL;

#if CUDART_VERSION < 5000

// CUDA-C includes

#include <cuda.h>

// This function wraps the CUDA Driver API into a template function

template <class T>

inline void getCudaAttribute(T *attribute, CUdevice_attribute device_attribute,

int device) {

CUresult error = cuDeviceGetAttribute(attribute, device_attribute, device);

if (CUDA_SUCCESS != error) {

fprintf(

stderr,

"cuSafeCallNoSync() Driver API error = %04d from file <%s>, line %i.\n",

error, __FILE__, __LINE__);

exit(EXIT_FAILURE);

}

}

#endif /* CUDART_VERSION < 5000 */

////////////////////////////////////////////////////////////////////////////////

// Program main

////////////////////////////////////////////////////////////////////////////////

int main(int argc, char **argv) {

pArgc = &argc;

pArgv = argv;

printf("%s Starting...\n\n", argv[0]);

printf(

" CUDA Device Query (Runtime API) version (CUDART static linking)\n\n");

int deviceCount = 0;

cudaError_t error_id = cudaGetDeviceCount(&deviceCount);

if (error_id != cudaSuccess) {

printf("cudaGetDeviceCount returned %d\n-> %s\n",

static_cast<int>(error_id), cudaGetErrorString(error_id));

printf("Result = FAIL\n");

exit(EXIT_FAILURE);

}

// This function call returns 0 if there are no CUDA capable devices.

if (deviceCount == 0) {

printf("There are no available device(s) that support CUDA\n");

} else {

printf("Detected %d CUDA Capable device(s)\n", deviceCount);

}

int dev, driverVersion = 0, runtimeVersion = 0;

for (dev = 0; dev < deviceCount; ++dev) {

cudaSetDevice(dev);

cudaDeviceProp deviceProp;

cudaGetDeviceProperties(&deviceProp, dev);

printf("\nDevice %d: \"%s\"\n", dev, deviceProp.name);

// Console log

cudaDriverGetVersion(&driverVersion);

cudaRuntimeGetVersion(&runtimeVersion);

printf(" CUDA Driver Version / Runtime Version %d.%d / %d.%d\n",

driverVersion / 1000, (driverVersion % 100) / 10,

runtimeVersion / 1000, (runtimeVersion % 100) / 10);

printf(" CUDA Capability Major/Minor version number: %d.%d\n",

deviceProp.major, deviceProp.minor);

char msg[256];

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

sprintf_s(msg, sizeof(msg),

" Total amount of global memory: %.0f MBytes "

"(%llu bytes)\n",

static_cast<float>(deviceProp.totalGlobalMem / 1048576.0f),

(unsigned long long)deviceProp.totalGlobalMem);

#else

snprintf(msg, sizeof(msg),

" Total amount of global memory: %.0f MBytes "

"(%llu bytes)\n",

static_cast<float>(deviceProp.totalGlobalMem / 1048576.0f),

(unsigned long long)deviceProp.totalGlobalMem);

#endif

printf("%s", msg);

printf(" (%2d) Multiprocessors, (%3d) CUDA Cores/MP: %d CUDA Cores\n",

deviceProp.multiProcessorCount,

_ConvertSMVer2Cores(deviceProp.major, deviceProp.minor),

_ConvertSMVer2Cores(deviceProp.major, deviceProp.minor) *

deviceProp.multiProcessorCount);

printf(

" GPU Max Clock rate: %.0f MHz (%0.2f "

"GHz)\n",

deviceProp.clockRate * 1e-3f, deviceProp.clockRate * 1e-6f);

#if CUDART_VERSION >= 5000

// This is supported in CUDA 5.0 (runtime API device properties)

printf(" Memory Clock rate: %.0f Mhz\n",

deviceProp.memoryClockRate * 1e-3f);

printf(" Memory Bus Width: %d-bit\n",

deviceProp.memoryBusWidth);

if (deviceProp.l2CacheSize) {

printf(" L2 Cache Size: %d bytes\n",

deviceProp.l2CacheSize);

}

#else

// This only available in CUDA 4.0-4.2 (but these were only exposed in the

// CUDA Driver API)

int memoryClock;

getCudaAttribute<int>(&memoryClock, CU_DEVICE_ATTRIBUTE_MEMORY_CLOCK_RATE,

dev);

printf(" Memory Clock rate: %.0f Mhz\n",

memoryClock * 1e-3f);

int memBusWidth;

getCudaAttribute<int>(&memBusWidth,

CU_DEVICE_ATTRIBUTE_GLOBAL_MEMORY_BUS_WIDTH, dev);

printf(" Memory Bus Width: %d-bit\n",

memBusWidth);

int L2CacheSize;

getCudaAttribute<int>(&L2CacheSize, CU_DEVICE_ATTRIBUTE_L2_CACHE_SIZE, dev);

if (L2CacheSize) {

printf(" L2 Cache Size: %d bytes\n",

L2CacheSize);

}

#endif

printf(

" Maximum Texture Dimension Size (x,y,z) 1D=(%d), 2D=(%d, "

"%d), 3D=(%d, %d, %d)\n",

deviceProp.maxTexture1D, deviceProp.maxTexture2D[0],

deviceProp.maxTexture2D[1], deviceProp.maxTexture3D[0],

deviceProp.maxTexture3D[1], deviceProp.maxTexture3D[2]);

printf(

" Maximum Layered 1D Texture Size, (num) layers 1D=(%d), %d layers\n",

deviceProp.maxTexture1DLayered[0], deviceProp.maxTexture1DLayered[1]);

printf(

" Maximum Layered 2D Texture Size, (num) layers 2D=(%d, %d), %d "

"layers\n",

deviceProp.maxTexture2DLayered[0], deviceProp.maxTexture2DLayered[1],

deviceProp.maxTexture2DLayered[2]);

printf(" Total amount of constant memory: %zu bytes\n",

deviceProp.totalConstMem);

printf(" Total amount of shared memory per block: %zu bytes\n",

deviceProp.sharedMemPerBlock);

printf(" Total number of registers available per block: %d\n",

deviceProp.regsPerBlock);

printf(" Warp size: %d\n",

deviceProp.warpSize);

printf(" Maximum number of threads per multiprocessor: %d\n",

deviceProp.maxThreadsPerMultiProcessor);

printf(" Maximum number of threads per block: %d\n",

deviceProp.maxThreadsPerBlock);

printf(" Max dimension size of a thread block (x,y,z): (%d, %d, %d)\n",

deviceProp.maxThreadsDim[0], deviceProp.maxThreadsDim[1],

deviceProp.maxThreadsDim[2]);

printf(" Max dimension size of a grid size (x,y,z): (%d, %d, %d)\n",

deviceProp.maxGridSize[0], deviceProp.maxGridSize[1],

deviceProp.maxGridSize[2]);

printf(" Maximum memory pitch: %zu bytes\n",

deviceProp.memPitch);

printf(" Texture alignment: %zu bytes\n",

deviceProp.textureAlignment);

printf(

" Concurrent copy and kernel execution: %s with %d copy "

"engine(s)\n",

(deviceProp.deviceOverlap ? "Yes" : "No"), deviceProp.asyncEngineCount);

printf(" Run time limit on kernels: %s\n",

deviceProp.kernelExecTimeoutEnabled ? "Yes" : "No");

printf(" Integrated GPU sharing Host Memory: %s\n",

deviceProp.integrated ? "Yes" : "No");

printf(" Support host page-locked memory mapping: %s\n",

deviceProp.canMapHostMemory ? "Yes" : "No");

printf(" Alignment requirement for Surfaces: %s\n",

deviceProp.surfaceAlignment ? "Yes" : "No");

printf(" Device has ECC support: %s\n",

deviceProp.ECCEnabled ? "Enabled" : "Disabled");

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

printf(" CUDA Device Driver Mode (TCC or WDDM): %s\n",

deviceProp.tccDriver ? "TCC (Tesla Compute Cluster Driver)"

: "WDDM (Windows Display Driver Model)");

#endif

printf(" Device supports Unified Addressing (UVA): %s\n",

deviceProp.unifiedAddressing ? "Yes" : "No");

printf(" Device supports Compute Preemption: %s\n",

deviceProp.computePreemptionSupported ? "Yes" : "No");

printf(" Supports Cooperative Kernel Launch: %s\n",

deviceProp.cooperativeLaunch ? "Yes" : "No");

printf(" Supports MultiDevice Co-op Kernel Launch: %s\n",

deviceProp.cooperativeMultiDeviceLaunch ? "Yes" : "No");

printf(" Device PCI Domain ID / Bus ID / location ID: %d / %d / %d\n",

deviceProp.pciDomainID, deviceProp.pciBusID, deviceProp.pciDeviceID);

const char *sComputeMode[] = {

"Default (multiple host threads can use ::cudaSetDevice() with device "

"simultaneously)",

"Exclusive (only one host thread in one process is able to use "

"::cudaSetDevice() with this device)",

"Prohibited (no host thread can use ::cudaSetDevice() with this "

"device)",

"Exclusive Process (many threads in one process is able to use "

"::cudaSetDevice() with this device)",

"Unknown",

NULL};

printf(" Compute Mode:\n");

printf(" < %s >\n", sComputeMode[deviceProp.computeMode]);

}

// If there are 2 or more GPUs, query to determine whether RDMA is supported

if (deviceCount >= 2) {

cudaDeviceProp prop[64];

int gpuid[64]; // we want to find the first two GPUs that can support P2P

int gpu_p2p_count = 0;

for (int i = 0; i < deviceCount; i++) {

checkCudaErrors(cudaGetDeviceProperties(&prop[i], i));

// Only boards based on Fermi or later can support P2P

if ((prop[i].major >= 2)

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

// on Windows (64-bit), the Tesla Compute Cluster driver for windows

// must be enabled to support this

&& prop[i].tccDriver

#endif

) {

// This is an array of P2P capable GPUs

gpuid[gpu_p2p_count++] = i;

}

}

// Show all the combinations of support P2P GPUs

int can_access_peer;

if (gpu_p2p_count >= 2) {

for (int i = 0; i < gpu_p2p_count; i++) {

for (int j = 0; j < gpu_p2p_count; j++) {

if (gpuid[i] == gpuid[j]) {

continue;

}

checkCudaErrors(

cudaDeviceCanAccessPeer(&can_access_peer, gpuid[i], gpuid[j]));

printf("> Peer access from %s (GPU%d) -> %s (GPU%d) : %s\n",

prop[gpuid[i]].name, gpuid[i], prop[gpuid[j]].name, gpuid[j],

can_access_peer ? "Yes" : "No");

}

}

}

}

// csv masterlog info

// *****************************

// exe and CUDA driver name

printf("\n");

std::string sProfileString = "deviceQuery, CUDA Driver = CUDART";

char cTemp[16];

// driver version

sProfileString += ", CUDA Driver Version = ";

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

sprintf_s(cTemp, 10, "%d.%d", driverVersion/1000, (driverVersion%100)/10);

#else

snprintf(cTemp, sizeof(cTemp), "%d.%d", driverVersion / 1000,

(driverVersion % 100) / 10);

#endif

sProfileString += cTemp;

// Runtime version

sProfileString += ", CUDA Runtime Version = ";

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

sprintf_s(cTemp, 10, "%d.%d", runtimeVersion/1000, (runtimeVersion%100)/10);

#else

snprintf(cTemp, sizeof(cTemp), "%d.%d", runtimeVersion / 1000,

(runtimeVersion % 100) / 10);

#endif

sProfileString += cTemp;

// Device count

sProfileString += ", NumDevs = ";

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

sprintf_s(cTemp, 10, "%d", deviceCount);

#else

snprintf(cTemp, sizeof(cTemp), "%d", deviceCount);

#endif

sProfileString += cTemp;

sProfileString += "\n";

printf("%s", sProfileString.c_str());

printf("Result = PASS\n");

// finish

exit(EXIT_SUCCESS);

}

编译运行:

$ nvcc -ccbin g++ -I$CUDA_HOME/include -I$CUDA_HOME/targets/x86_64-linux/include -I$CUDA_HOME/samples/common/inc -m64 deviceQuery.cpp -o deviceQuery

$ ./deviceQuery

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "GeForce MX250"

CUDA Driver Version / Runtime Version 10.2 / 10.2

CUDA Capability Major/Minor version number: 6.1

Total amount of global memory: 2003 MBytes (2099904512 bytes)

( 3) Multiprocessors, (128) CUDA Cores/MP: 384 CUDA Cores

GPU Max Clock rate: 1582 MHz (1.58 GHz)

Memory Clock rate: 3004 Mhz

Memory Bus Width: 64-bit

L2 Cache Size: 524288 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 10.2, CUDA Runtime Version = 10.2, NumDevs = 1

Result = PASS

mymotif@mymotif-S40-51:~/prg/myopencl$ rm deviceQuery

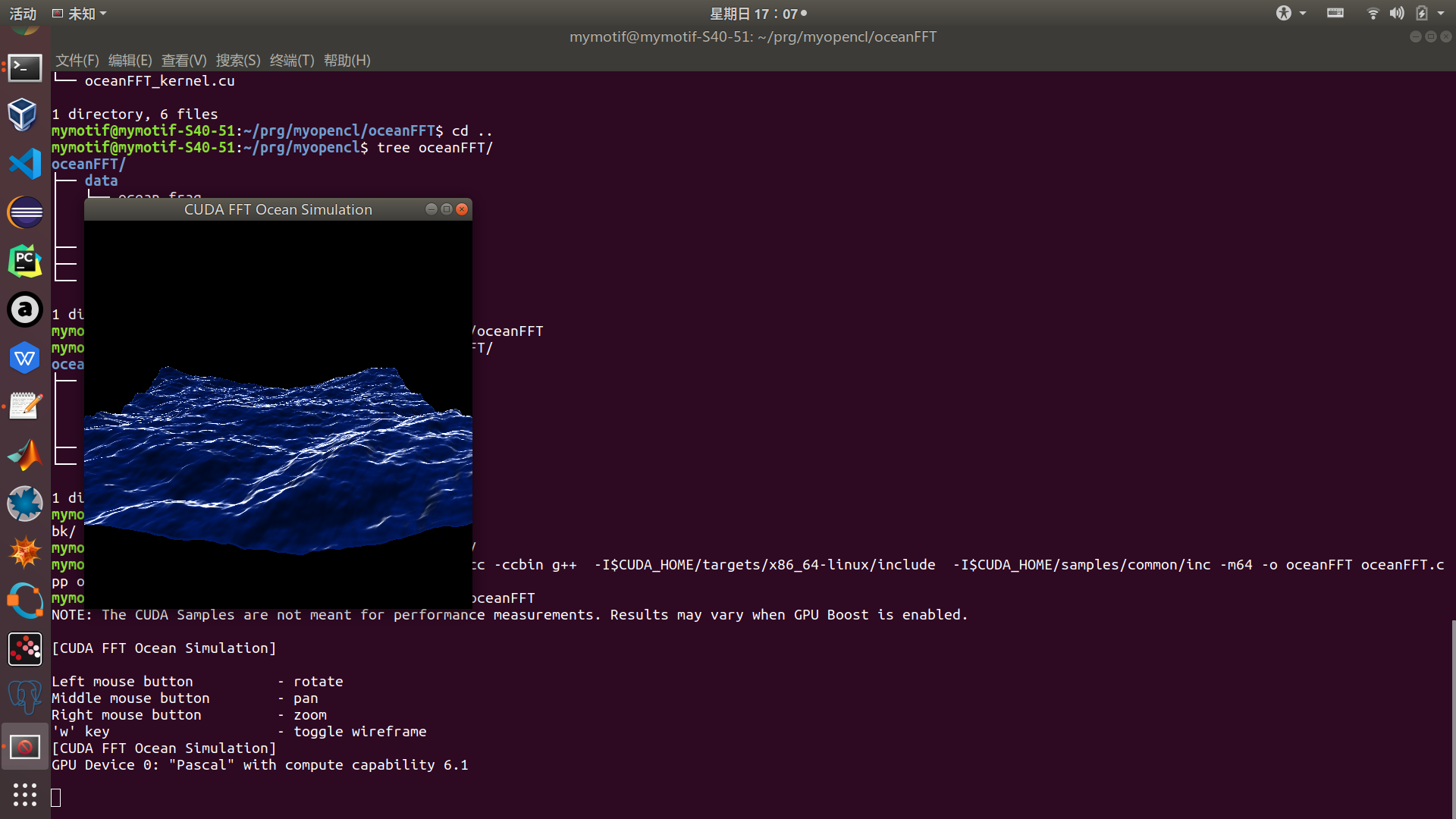

五、使用FFT方法来模拟海水波动 (官方例子/usr/local/cuda/samples/5_Simulations/oceanFFT/)

必要的文件(精简过的):

~/prg/mycuda$ tree oceanFFT/

oceanFFT/

├── data

│ ├── ocean.frag

│ ├── ocean.vert

│ └── reference.ppm

├── oceanFFT.cpp

└── oceanFFT_kernel.cu

1 directory, 5 files

代码:

oceanFFT.cpp

/*

* Copyright 1993-2015 NVIDIA Corporation. All rights reserved.

*

* Please refer to the NVIDIA end user license agreement (EULA) associated

* with this source code for terms and conditions that govern your use of

* this software. Any use, reproduction, disclosure, or distribution of

* this software and related documentation outside the terms of the EULA

* is strictly prohibited.

*

*/

/*

FFT-based Ocean simulation

based on original code by Yury Uralsky and Calvin Lin

This sample demonstrates how to use CUFFT to synthesize and

render an ocean surface in real-time.

See Jerry Tessendorf's Siggraph course notes for more details:

http://tessendorf.org/reports.html

It also serves as an example of how to generate multiple vertex

buffer streams from CUDA and render them using GLSL shaders.

$ nvcc -ccbin g++ -I$CUDA_HOME/targets/x86_64-linux/include -I$CUDA_HOME/samples/common/inc -m64 -o oceanFFT oceanFFT.cpp oceanFFT_kernel.cu -lGL -lGLU -lglut -lcufft

*/

#if defined(WIN32) || defined(_WIN32) || defined(WIN64) || defined(_WIN64)

# define WINDOWS_LEAN_AND_MEAN

# define NOMINMAX

# include <windows.h>

#endif

// includes

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <math.h>

#include <helper_gl.h>

#include <cuda_runtime.h>

#include <cuda_gl_interop.h>

#include <cufft.h>

#include <helper_cuda.h>

#include <helper_functions.h>

#include <math_constants.h>

#if defined(__APPLE__) || defined(MACOSX)

#pragma clang diagnostic ignored "-Wdeprecated-declarations"

#include <GLUT/glut.h>

#else

#include <GL/freeglut.h>

#endif

#include <rendercheck_gl.h>

const char *sSDKsample = "CUDA FFT Ocean Simulation";

#define MAX_EPSILON 0.10f

#define THRESHOLD 0.15f

#define REFRESH_DELAY 10 //ms

////////////////////////////////////////////////////////////////////////////////

// constants

unsigned int windowW = 512, windowH = 512;

const unsigned int meshSize = 256;

const unsigned int spectrumW = meshSize + 4;

const unsigned int spectrumH = meshSize + 1;

const int frameCompare = 4;

// OpenGL vertex buffers

GLuint posVertexBuffer;

GLuint heightVertexBuffer, slopeVertexBuffer;

struct cudaGraphicsResource *cuda_posVB_resource, *cuda_heightVB_resource, *cuda_slopeVB_resource; // handles OpenGL-CUDA exchange

GLuint indexBuffer;

GLuint shaderProg;

char *vertShaderPath = 0, *fragShaderPath = 0;

// mouse controls

int mouseOldX, mouseOldY;

int mouseButtons = 0;

float rotateX = 20.0f, rotateY = 0.0f;

float translateX = 0.0f, translateY = 0.0f, translateZ = -2.0f;

bool animate = true;

bool drawPoints = false;

bool wireFrame = false;

bool g_hasDouble = false;

// FFT data

cufftHandle fftPlan;

float2 *d_h0 = 0; // heightfield at time 0

float2 *h_h0 = 0;

float2 *d_ht = 0; // heightfield at time t

float2 *d_slope = 0;

// pointers to device object

float *g_hptr = NULL;

float2 *g_sptr = NULL;

// simulation parameters

const float g = 9.81f; // gravitational constant

const float A = 1e-7f; // wave scale factor

const float patchSize = 100; // patch size

float windSpeed = 100.0f;

float windDir = CUDART_PI_F/3.0f;

float dirDepend = 0.07f;

StopWatchInterface *timer = NULL;

float animTime = 0.0f;

float prevTime = 0.0f;

float animationRate = -0.001f;

// Auto-Verification Code

const int frameCheckNumber = 4;

int fpsCount = 0; // FPS count for averaging

int fpsLimit = 1; // FPS limit for sampling

unsigned int frameCount = 0;

unsigned int g_TotalErrors = 0;

////////////////////////////////////////////////////////////////////////////////

// kernels

//#include <oceanFFT_kernel.cu>

extern "C"

void cudaGenerateSpectrumKernel(float2 *d_h0,

float2 *d_ht,

unsigned int in_width,

unsigned int out_width,

unsigned int out_height,

float animTime,

float patchSize);

extern "C"

void cudaUpdateHeightmapKernel(float *d_heightMap,

float2 *d_ht,

unsigned int width,

unsigned int height,

bool autoTest);

extern "C"

void cudaCalculateSlopeKernel(float *h, float2 *slopeOut,

unsigned int width, unsigned int height);

////////////////////////////////////////////////////////////////////////////////

// forward declarations

void runAutoTest(int argc, char **argv);

void runGraphicsTest(int argc, char **argv);

// GL functionality

bool initGL(int *argc, char **argv);

void createVBO(GLuint *vbo, int size);

void deleteVBO(GLuint *vbo);

void createMeshIndexBuffer(GLuint *id, int w, int h);

void createMeshPositionVBO(GLuint *id, int w, int h);

GLuint loadGLSLProgram(const char *vertFileName, const char *fragFileName);

// rendering callbacks

void display();

void keyboard(unsigned char key, int x, int y);

void mouse(int button, int state, int x, int y);

void motion(int x, int y);

void reshape(int w, int h);

void timerEvent(int value);

// Cuda functionality

void runCuda();

void runCudaTest(char *exec_path);

void generate_h0(float2 *h0);

////////////////////////////////////////////////////////////////////////////////

// Program main

////////////////////////////////////////////////////////////////////////////////

int main(int argc, char **argv)

{

printf("NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.\n\n");

// check for command line arguments

if (checkCmdLineFlag(argc, (const char **)argv, "qatest"))

{

animate = false;

fpsLimit = frameCheckNumber;

runAutoTest(argc, argv);

}

else

{

printf("[%s]\n\n"

"Left mouse button - rotate\n"

"Middle mouse button - pan\n"

"Right mouse button - zoom\n"

"'w' key - toggle wireframe\n", sSDKsample);

runGraphicsTest(argc, argv);

}

exit(EXIT_SUCCESS);

}

////////////////////////////////////////////////////////////////////////////////

//! Run test

////////////////////////////////////////////////////////////////////////////////

void runAutoTest(int argc, char **argv)

{

printf("%s Starting...\n\n", argv[0]);

// Cuda init

int dev = findCudaDevice(argc, (const char **)argv);

cudaDeviceProp deviceProp;

checkCudaErrors(cudaGetDeviceProperties(&deviceProp, dev));

printf("Compute capability %d.%d\n", deviceProp.major, deviceProp.minor);

// create FFT plan

checkCudaErrors(cufftPlan2d(&fftPlan, meshSize, meshSize, CUFFT_C2C));

// allocate memory

int spectrumSize = spectrumW*spectrumH*sizeof(float2);

checkCudaErrors(cudaMalloc((void **)&d_h0, spectrumSize));

h_h0 = (float2 *) malloc(spectrumSize);

generate_h0(h_h0);

checkCudaErrors(cudaMemcpy(d_h0, h_h0, spectrumSize, cudaMemcpyHostToDevice));

int outputSize = meshSize*meshSize*sizeof(float2);

checkCudaErrors(cudaMalloc((void **)&d_ht, outputSize));

checkCudaErrors(cudaMalloc((void **)&d_slope, outputSize));

sdkCreateTimer(&timer);

sdkStartTimer(&timer);

prevTime = sdkGetTimerValue(&timer);

runCudaTest(argv[0]);

checkCudaErrors(cudaFree(d_ht));

checkCudaErrors(cudaFree(d_slope));

checkCudaErrors(cudaFree(d_h0));

checkCudaErrors(cufftDestroy(fftPlan));

free(h_h0);

exit(g_TotalErrors==0 ? EXIT_SUCCESS : EXIT_FAILURE);

}

////////////////////////////////////////////////////////////////////////////////

//! Run test

////////////////////////////////////////////////////////////////////////////////

void runGraphicsTest(int argc, char **argv)

{

#if defined(__linux__)

setenv ("DISPLAY", ":0", 0);

#endif

printf("[%s] ", sSDKsample);

printf("\n");

if (checkCmdLineFlag(argc, (const char **)argv, "device"))

{

printf("[%s]\n", argv[0]);

printf(" Does not explicitly support -device=n in OpenGL mode\n");

printf(" To use -device=n, the sample must be running w/o OpenGL\n\n");

printf(" > %s -device=n -qatest\n", argv[0]);

printf("exiting...\n");

exit(EXIT_SUCCESS);

}

// First initialize OpenGL context, so we can properly set the GL for CUDA.

// This is necessary in order to achieve optimal performance with OpenGL/CUDA interop.

if (false == initGL(&argc, argv))

{

return;

}

findCudaDevice(argc, (const char **)argv);

// create FFT plan

checkCudaErrors(cufftPlan2d(&fftPlan, meshSize, meshSize, CUFFT_C2C));

// allocate memory

int spectrumSize = spectrumW*spectrumH*sizeof(float2);

checkCudaErrors(cudaMalloc((void **)&d_h0, spectrumSize));

h_h0 = (float2 *) malloc(spectrumSize);

generate_h0(h_h0);

checkCudaErrors(cudaMemcpy(d_h0, h_h0, spectrumSize, cudaMemcpyHostToDevice));

int outputSize = meshSize*meshSize*sizeof(float2);

checkCudaErrors(cudaMalloc((void **)&d_ht, outputSize));

checkCudaErrors(cudaMalloc((void **)&d_slope, outputSize));

sdkCreateTimer(&timer);

sdkStartTimer(&timer);

prevTime = sdkGetTimerValue(&timer);

// create vertex buffers and register with CUDA

createVBO(&heightVertexBuffer, meshSize*meshSize*sizeof(float));

checkCudaErrors(cudaGraphicsGLRegisterBuffer(&cuda_heightVB_resource, heightVertexBuffer, cudaGraphicsMapFlagsWriteDiscard));

createVBO(&slopeVertexBuffer, outputSize);

checkCudaErrors(cudaGraphicsGLRegisterBuffer(&cuda_slopeVB_resource, slopeVertexBuffer, cudaGraphicsMapFlagsWriteDiscard));

// create vertex and index buffer for mesh

createMeshPositionVBO(&posVertexBuffer, meshSize, meshSize);

createMeshIndexBuffer(&indexBuffer, meshSize, meshSize);

runCuda();

// register callbacks

glutDisplayFunc(display);

glutKeyboardFunc(keyboard);

glutMouseFunc(mouse);

glutMotionFunc(motion);

glutReshapeFunc(reshape);

glutTimerFunc(REFRESH_DELAY, timerEvent, 0);

// start rendering mainloop

glutMainLoop();

}

float urand()

{

return rand() / (float)RAND_MAX;

}

// Generates Gaussian random number with mean 0 and standard deviation 1.

float gauss()

{

float u1 = urand();

float u2 = urand();

if (u1 < 1e-6f)

{

u1 = 1e-6f;

}

return sqrtf(-2 * logf(u1)) * cosf(2*CUDART_PI_F * u2);

}

// Phillips spectrum

// (Kx, Ky) - normalized wave vector

// Vdir - wind angle in radians

// V - wind speed

// A - constant

float phillips(float Kx, float Ky, float Vdir, float V, float A, float dir_depend)

{

float k_squared = Kx * Kx + Ky * Ky;

if (k_squared == 0.0f)

{

return 0.0f;

}

// largest possible wave from constant wind of velocity v

float L = V * V / g;

float k_x = Kx / sqrtf(k_squared);

float k_y = Ky / sqrtf(k_squared);

float w_dot_k = k_x * cosf(Vdir) + k_y * sinf(Vdir);

float phillips = A * expf(-1.0f / (k_squared * L * L)) / (k_squared * k_squared) * w_dot_k * w_dot_k;

// filter out waves moving opposite to wind

if (w_dot_k < 0.0f)

{

phillips *= dir_depend;

}

// damp out waves with very small length w << l

//float w = L / 10000;

//phillips *= expf(-k_squared * w * w);

return phillips;

}

// Generate base heightfield in frequency space

void generate_h0(float2 *h0)

{

for (unsigned int y = 0; y<=meshSize; y++)

{

for (unsigned int x = 0; x<=meshSize; x++)

{

float kx = (-(int)meshSize / 2.0f + x) * (2.0f * CUDART_PI_F / patchSize);

float ky = (-(int)meshSize / 2.0f + y) * (2.0f * CUDART_PI_F / patchSize);

float P = sqrtf(phillips(kx, ky, windDir, windSpeed, A, dirDepend));

if (kx == 0.0f && ky == 0.0f)

{

P = 0.0f;

}

//float Er = urand()*2.0f-1.0f;

//float Ei = urand()*2.0f-1.0f;

float Er = gauss();

float Ei = gauss();

float h0_re = Er * P * CUDART_SQRT_HALF_F;

float h0_im = Ei * P * CUDART_SQRT_HALF_F;

int i = y*spectrumW+x;

h0[i].x = h0_re;

h0[i].y = h0_im;

}

}

}

////////////////////////////////////////////////////////////////////////////////

//! Run the Cuda kernels

////////////////////////////////////////////////////////////////////////////////

void runCuda()

{

size_t num_bytes;

// generate wave spectrum in frequency domain

cudaGenerateSpectrumKernel(d_h0, d_ht, spectrumW, meshSize, meshSize, animTime, patchSize);

// execute inverse FFT to convert to spatial domain

checkCudaErrors(cufftExecC2C(fftPlan, d_ht, d_ht, CUFFT_INVERSE));

// update heightmap values in vertex buffer

checkCudaErrors(cudaGraphicsMapResources(1, &cuda_heightVB_resource, 0));

checkCudaErrors(cudaGraphicsResourceGetMappedPointer((void **)&g_hptr, &num_bytes, cuda_heightVB_resource));

cudaUpdateHeightmapKernel(g_hptr, d_ht, meshSize, meshSize, false);

// calculate slope for shading

checkCudaErrors(cudaGraphicsMapResources(1, &cuda_slopeVB_resource, 0));

checkCudaErrors(cudaGraphicsResourceGetMappedPointer((void **)&g_sptr, &num_bytes, cuda_slopeVB_resource));

cudaCalculateSlopeKernel(g_hptr, g_sptr, meshSize, meshSize);

checkCudaErrors(cudaGraphicsUnmapResources(1, &cuda_heightVB_resource, 0));

checkCudaErrors(cudaGraphicsUnmapResources(1, &cuda_slopeVB_resource, 0));

}

void runCudaTest(char *exec_path)

{

checkCudaErrors(cudaMalloc((void **)&g_hptr, meshSize*meshSize*sizeof(float)));

checkCudaErrors(cudaMalloc((void **)&g_sptr, meshSize*meshSize*sizeof(float2)));

// generate wave spectrum in frequency domain

cudaGenerateSpectrumKernel(d_h0, d_ht, spectrumW, meshSize, meshSize, animTime, patchSize);

// execute inverse FFT to convert to spatial domain

checkCudaErrors(cufftExecC2C(fftPlan, d_ht, d_ht, CUFFT_INVERSE));

// update heightmap values

cudaUpdateHeightmapKernel(g_hptr, d_ht, meshSize, meshSize, true);

{

float *hptr = (float *)malloc(meshSize*meshSize*sizeof(float));

cudaMemcpy((void *)hptr, (void *)g_hptr, meshSize*meshSize*sizeof(float), cudaMemcpyDeviceToHost);

sdkDumpBin((void *)hptr, meshSize*meshSize*sizeof(float), "spatialDomain.bin");

if (!sdkCompareBin2BinFloat("spatialDomain.bin", "ref_spatialDomain.bin", meshSize*meshSize,

MAX_EPSILON, THRESHOLD, exec_path))

{

g_TotalErrors++;

}

free(hptr);

}

// calculate slope for shading

cudaCalculateSlopeKernel(g_hptr, g_sptr, meshSize, meshSize);

{

float2 *sptr = (float2 *)malloc(meshSize*meshSize*sizeof(float2));

cudaMemcpy((void *)sptr, (void *)g_sptr, meshSize*meshSize*sizeof(float2), cudaMemcpyDeviceToHost);

sdkDumpBin(sptr, meshSize*meshSize*sizeof(float2), "slopeShading.bin");

if (!sdkCompareBin2BinFloat("slopeShading.bin", "ref_slopeShading.bin", meshSize*meshSize*2,

MAX_EPSILON, THRESHOLD, exec_path))

{

g_TotalErrors++;

}

free(sptr);

}

checkCudaErrors(cudaFree(g_hptr));

checkCudaErrors(cudaFree(g_sptr));

}

//void computeFPS()

//{

// frameCount++;

// fpsCount++;

//

// if (fpsCount == fpsLimit) {

// fpsCount = 0;

// }

//}

////////////////////////////////////////////////////////////////////////////////

//! Display callback

////////////////////////////////////////////////////////////////////////////////

void display()

{

// run CUDA kernel to generate vertex positions

if (animate)

{

runCuda();

}

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

// set view matrix

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

glTranslatef(translateX, translateY, translateZ);

glRotatef(rotateX, 1.0, 0.0, 0.0);

glRotatef(rotateY, 0.0, 1.0, 0.0);

// render from the vbo

glBindBuffer(GL_ARRAY_BUFFER, posVertexBuffer);

glVertexPointer(4, GL_FLOAT, 0, 0);

glEnableClientState(GL_VERTEX_ARRAY);

glBindBuffer(GL_ARRAY_BUFFER, heightVertexBuffer);

glClientActiveTexture(GL_TEXTURE0);

glTexCoordPointer(1, GL_FLOAT, 0, 0);

glEnableClientState(GL_TEXTURE_COORD_ARRAY);

glBindBuffer(GL_ARRAY_BUFFER, slopeVertexBuffer);

glClientActiveTexture(GL_TEXTURE1);

glTexCoordPointer(2, GL_FLOAT, 0, 0);

glEnableClientState(GL_TEXTURE_COORD_ARRAY);

glUseProgram(shaderProg);

// Set default uniform variables parameters for the vertex shader

GLuint uniHeightScale, uniChopiness, uniSize;

uniHeightScale = glGetUniformLocation(shaderProg, "heightScale");

glUniform1f(uniHeightScale, 0.5f);

uniChopiness = glGetUniformLocation(shaderProg, "chopiness");

glUniform1f(uniChopiness, 1.0f);

uniSize = glGetUniformLocation(shaderProg, "size");

glUniform2f(uniSize, (float) meshSize, (float) meshSize);

// Set default uniform variables parameters for the pixel shader

GLuint uniDeepColor, uniShallowColor, uniSkyColor, uniLightDir;

uniDeepColor = glGetUniformLocation(shaderProg, "deepColor");

glUniform4f(uniDeepColor, 0.0f, 0.1f, 0.4f, 1.0f);

uniShallowColor = glGetUniformLocation(shaderProg, "shallowColor");

glUniform4f(uniShallowColor, 0.1f, 0.3f, 0.3f, 1.0f);

uniSkyColor = glGetUniformLocation(shaderProg, "skyColor");

glUniform4f(uniSkyColor, 1.0f, 1.0f, 1.0f, 1.0f);

uniLightDir = glGetUniformLocation(shaderProg, "lightDir");

glUniform3f(uniLightDir, 0.0f, 1.0f, 0.0f);

// end of uniform settings

glColor3f(1.0, 1.0, 1.0);

if (drawPoints)

{

glDrawArrays(GL_POINTS, 0, meshSize * meshSize);

}

else

{

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexBuffer);

glPolygonMode(GL_FRONT_AND_BACK, wireFrame ? GL_LINE : GL_FILL);

glDrawElements(GL_TRIANGLE_STRIP, ((meshSize*2)+2)*(meshSize-1), GL_UNSIGNED_INT, 0);

glPolygonMode(GL_FRONT_AND_BACK, GL_FILL);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, 0);

}

glDisableClientState(GL_VERTEX_ARRAY);

glClientActiveTexture(GL_TEXTURE0);

glDisableClientState(GL_TEXTURE_COORD_ARRAY);

glClientActiveTexture(GL_TEXTURE1);

glDisableClientState(GL_TEXTURE_COORD_ARRAY);

glUseProgram(0);

glutSwapBuffers();

//computeFPS();

}

void timerEvent(int value)

{

float time = sdkGetTimerValue(&timer);

if (animate)

{

animTime += (time - prevTime) * animationRate;

}

glutPostRedisplay();

prevTime = time;

glutTimerFunc(REFRESH_DELAY, timerEvent, 0);

}

void cleanup()

{

sdkDeleteTimer(&timer);

checkCudaErrors(cudaGraphicsUnregisterResource(cuda_heightVB_resource));

checkCudaErrors(cudaGraphicsUnregisterResource(cuda_slopeVB_resource));

deleteVBO(&posVertexBuffer);

deleteVBO(&heightVertexBuffer);

deleteVBO(&slopeVertexBuffer);

checkCudaErrors(cudaFree(d_h0));

checkCudaErrors(cudaFree(d_slope));

checkCudaErrors(cudaFree(d_ht));

free(h_h0);

cufftDestroy(fftPlan);

}

////////////////////////////////////////////////////////////////////////////////

//! Keyboard events handler

////////////////////////////////////////////////////////////////////////////////

void keyboard(unsigned char key, int /*x*/, int /*y*/)

{

switch (key)

{

case (27) :

cleanup();

exit(EXIT_SUCCESS);

case 'w':

wireFrame = !wireFrame;

break;

case 'p':

drawPoints = !drawPoints;

break;

case ' ':

animate = !animate;

break;

}

}

////////////////////////////////////////////////////////////////////////////////

//! Mouse event handlers

////////////////////////////////////////////////////////////////////////////////

void mouse(int button, int state, int x, int y)

{

if (state == GLUT_DOWN)

{

mouseButtons |= 1<<button;

}

else if (state == GLUT_UP)

{

mouseButtons = 0;

}

mouseOldX = x;

mouseOldY = y;

glutPostRedisplay();

}

void motion(int x, int y)

{

float dx, dy;

dx = (float)(x - mouseOldX);

dy = (float)(y - mouseOldY);

if (mouseButtons == 1)

{

rotateX += dy * 0.2f;

rotateY += dx * 0.2f;

}

else if (mouseButtons == 2)

{

translateX += dx * 0.01f;

translateY -= dy * 0.01f;

}

else if (mouseButtons == 4)

{

translateZ += dy * 0.01f;

}

mouseOldX = x;

mouseOldY = y;

}

void reshape(int w, int h)

{

glViewport(0, 0, w, h);

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

gluPerspective(60.0, (double) w / (double) h, 0.1, 10.0);

windowW = w;

windowH = h;

}

////////////////////////////////////////////////////////////////////////////////

//! Initialize GL

////////////////////////////////////////////////////////////////////////////////

bool initGL(int *argc, char **argv)

{

// Create GL context

glutInit(argc, argv);

glutInitDisplayMode(GLUT_RGBA | GLUT_DOUBLE | GLUT_DEPTH);

glutInitWindowSize(windowW, windowH);

glutCreateWindow("CUDA FFT Ocean Simulation");

vertShaderPath = sdkFindFilePath("ocean.vert", argv[0]);

fragShaderPath = sdkFindFilePath("ocean.frag", argv[0]);

if (vertShaderPath == NULL || fragShaderPath == NULL)

{

fprintf(stderr, "Error unable to find GLSL vertex and fragment shaders!\n");

exit(EXIT_FAILURE);

}

// initialize necessary OpenGL extensions

if (! isGLVersionSupported(2,0))

{

fprintf(stderr, "ERROR: Support for necessary OpenGL extensions missing.");

fflush(stderr);

return false;

}

if (!areGLExtensionsSupported("GL_ARB_vertex_buffer_object GL_ARB_pixel_buffer_object"))

{

fprintf(stderr, "Error: failed to get minimal extensions for demo\n");

fprintf(stderr, "This sample requires:\n");

fprintf(stderr, " OpenGL version 1.5\n");

fprintf(stderr, " GL_ARB_vertex_buffer_object\n");

fprintf(stderr, " GL_ARB_pixel_buffer_object\n");

cleanup();

exit(EXIT_FAILURE);

}

// default initialization

glClearColor(0.0, 0.0, 0.0, 1.0);

glEnable(GL_DEPTH_TEST);

// load shader

shaderProg = loadGLSLProgram(vertShaderPath, fragShaderPath);

SDK_CHECK_ERROR_GL();

return true;

}

////////////////////////////////////////////////////////////////////////////////

//! Create VBO

////////////////////////////////////////////////////////////////////////////////

void createVBO(GLuint *vbo, int size)

{

// create buffer object

glGenBuffers(1, vbo);

glBindBuffer(GL_ARRAY_BUFFER, *vbo);

glBufferData(GL_ARRAY_BUFFER, size, 0, GL_DYNAMIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

SDK_CHECK_ERROR_GL();

}

////////////////////////////////////////////////////////////////////////////////

//! Delete VBO

////////////////////////////////////////////////////////////////////////////////

void deleteVBO(GLuint *vbo)

{

glDeleteBuffers(1, vbo);

*vbo = 0;

}

// create index buffer for rendering quad mesh

void createMeshIndexBuffer(GLuint *id, int w, int h)

{

int size = ((w*2)+2)*(h-1)*sizeof(GLuint);

// create index buffer

glGenBuffers(1, id);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, *id);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, size, 0, GL_STATIC_DRAW);

// fill with indices for rendering mesh as triangle strips

GLuint *indices = (GLuint *) glMapBuffer(GL_ELEMENT_ARRAY_BUFFER, GL_WRITE_ONLY);

if (!indices)

{

return;

}

for (int y=0; y<h-1; y++)

{

for (int x=0; x<w; x++)

{

*indices++ = y*w+x;

*indices++ = (y+1)*w+x;

}

// start new strip with degenerate triangle

*indices++ = (y+1)*w+(w-1);

*indices++ = (y+1)*w;

}

glUnmapBuffer(GL_ELEMENT_ARRAY_BUFFER);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, 0);

}

// create fixed vertex buffer to store mesh vertices

void createMeshPositionVBO(GLuint *id, int w, int h)

{

createVBO(id, w*h*4*sizeof(float));

glBindBuffer(GL_ARRAY_BUFFER, *id);

float *pos = (float *) glMapBuffer(GL_ARRAY_BUFFER, GL_WRITE_ONLY);

if (!pos)

{

return;

}

for (int y=0; y<h; y++)

{

for (int x=0; x<w; x++)

{

float u = x / (float)(w-1);

float v = y / (float)(h-1);

*pos++ = u*2.0f-1.0f;

*pos++ = 0.0f;

*pos++ = v*2.0f-1.0f;

*pos++ = 1.0f;

}

}

glUnmapBuffer(GL_ARRAY_BUFFER);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

// Attach shader to a program

int attachShader(GLuint prg, GLenum type, const char *name)

{

GLuint shader;

FILE *fp;

int size, compiled;

char *src;

fp = fopen(name, "rb");

if (!fp)

{

return 0;

}

fseek(fp, 0, SEEK_END);

size = ftell(fp);

src = (char *)malloc(size);

fseek(fp, 0, SEEK_SET);

fread(src, sizeof(char), size, fp);

fclose(fp);

shader = glCreateShader(type);

glShaderSource(shader, 1, (const char **)&src, (const GLint *)&size);

glCompileShader(shader);

glGetShaderiv(shader, GL_COMPILE_STATUS, (GLint *)&compiled);

if (!compiled)

{

char log[2048];

int len;

glGetShaderInfoLog(shader, 2048, (GLsizei *)&len, log);

printf("Info log: %s\n", log);

glDeleteShader(shader);

return 0;

}

free(src);

glAttachShader(prg, shader);

glDeleteShader(shader);

return 1;

}

// Create shader program from vertex shader and fragment shader files

GLuint loadGLSLProgram(const char *vertFileName, const char *fragFileName)

{

GLint linked;

GLuint program;

program = glCreateProgram();

if (!attachShader(program, GL_VERTEX_SHADER, vertFileName))

{

glDeleteProgram(program);

fprintf(stderr, "Couldn't attach vertex shader from file %s\n", vertFileName);

return 0;

}

if (!attachShader(program, GL_FRAGMENT_SHADER, fragFileName))

{

glDeleteProgram(program);

fprintf(stderr, "Couldn't attach fragment shader from file %s\n", fragFileName);

return 0;

}

glLinkProgram(program);

glGetProgramiv(program, GL_LINK_STATUS, &linked);

if (!linked)

{

glDeleteProgram(program);

char temp[256];

glGetProgramInfoLog(program, 256, 0, temp);

fprintf(stderr, "Failed to link program: %s\n", temp);

return 0;

}

return program;

}

oceanFFT_kernel.cu

/*

* Copyright 1993-2015 NVIDIA Corporation. All rights reserved.

*

* Please refer to the NVIDIA end user license agreement (EULA) associated

* with this source code for terms and conditions that govern your use of

* this software. Any use, reproduction, disclosure, or distribution of

* this software and related documentation outside the terms of the EULA

* is strictly prohibited.

*

*/

///////////////////////////////////////////////////////////////////////////////

#include <cufft.h>

#include <math_constants.h>

//Round a / b to nearest higher integer value

int cuda_iDivUp(int a, int b)

{

return (a + (b - 1)) / b;

}

// complex math functions

__device__

float2 conjugate(float2 arg)

{

return make_float2(arg.x, -arg.y);

}

__device__

float2 complex_exp(float arg)

{

return make_float2(cosf(arg), sinf(arg));

}

__device__

float2 complex_add(float2 a, float2 b)

{

return make_float2(a.x + b.x, a.y + b.y);

}

__device__

float2 complex_mult(float2 ab, float2 cd)

{

return make_float2(ab.x * cd.x - ab.y * cd.y, ab.x * cd.y + ab.y * cd.x);

}

// generate wave heightfield at time t based on initial heightfield and dispersion relationship

__global__ void generateSpectrumKernel(float2 *h0,

float2 *ht,

unsigned int in_width,

unsigned int out_width,

unsigned int out_height,

float t,

float patchSize)

{

unsigned int x = blockIdx.x*blockDim.x + threadIdx.x;

unsigned int y = blockIdx.y*blockDim.y + threadIdx.y;

unsigned int in_index = y*in_width+x;

unsigned int in_mindex = (out_height - y)*in_width + (out_width - x); // mirrored

unsigned int out_index = y*out_width+x;

// calculate wave vector

float2 k;

k.x = (-(int)out_width / 2.0f + x) * (2.0f * CUDART_PI_F / patchSize);

k.y = (-(int)out_width / 2.0f + y) * (2.0f * CUDART_PI_F / patchSize);

// calculate dispersion w(k)

float k_len = sqrtf(k.x*k.x + k.y*k.y);

float w = sqrtf(9.81f * k_len);

if ((x < out_width) && (y < out_height))

{

float2 h0_k = h0[in_index];

float2 h0_mk = h0[in_mindex];

// output frequency-space complex values

ht[out_index] = complex_add(complex_mult(h0_k, complex_exp(w * t)), complex_mult(conjugate(h0_mk), complex_exp(-w * t)));

//ht[out_index] = h0_k;

}

}

// update height map values based on output of FFT

__global__ void updateHeightmapKernel(float *heightMap,

float2 *ht,

unsigned int width)

{

unsigned int x = blockIdx.x*blockDim.x + threadIdx.x;

unsigned int y = blockIdx.y*blockDim.y + threadIdx.y;

unsigned int i = y*width+x;

// cos(pi * (m1 + m2))

float sign_correction = ((x + y) & 0x01) ? -1.0f : 1.0f;

heightMap[i] = ht[i].x * sign_correction;

}

// update height map values based on output of FFT

__global__ void updateHeightmapKernel_y(float *heightMap,

float2 *ht,

unsigned int width)

{

unsigned int x = blockIdx.x*blockDim.x + threadIdx.x;

unsigned int y = blockIdx.y*blockDim.y + threadIdx.y;

unsigned int i = y*width+x;

// cos(pi * (m1 + m2))

float sign_correction = ((x + y) & 0x01) ? -1.0f : 1.0f;

heightMap[i] = ht[i].y * sign_correction;

}

// generate slope by partial differences in spatial domain

__global__ void calculateSlopeKernel(float *h, float2 *slopeOut, unsigned int width, unsigned int height)

{

unsigned int x = blockIdx.x*blockDim.x + threadIdx.x;

unsigned int y = blockIdx.y*blockDim.y + threadIdx.y;

unsigned int i = y*width+x;

float2 slope = make_float2(0.0f, 0.0f);

if ((x > 0) && (y > 0) && (x < width-1) && (y < height-1))

{

slope.x = h[i+1] - h[i-1];

slope.y = h[i+width] - h[i-width];

}

slopeOut[i] = slope;

}

// wrapper functions

extern "C"

void cudaGenerateSpectrumKernel(float2 *d_h0,

float2 *d_ht,

unsigned int in_width,

unsigned int out_width,

unsigned int out_height,

float animTime,

float patchSize)

{

dim3 block(8, 8, 1);

dim3 grid(cuda_iDivUp(out_width, block.x), cuda_iDivUp(out_height, block.y), 1);

generateSpectrumKernel<<<grid, block>>>(d_h0, d_ht, in_width, out_width, out_height, animTime, patchSize);

}

extern "C"

void cudaUpdateHeightmapKernel(float *d_heightMap,

float2 *d_ht,

unsigned int width,

unsigned int height,

bool autoTest)

{

dim3 block(8, 8, 1);

dim3 grid(cuda_iDivUp(width, block.x), cuda_iDivUp(height, block.y), 1);

if (autoTest)

{

updateHeightmapKernel_y<<<grid, block>>>(d_heightMap, d_ht, width);

}

else

{

updateHeightmapKernel<<<grid, block>>>(d_heightMap, d_ht, width);

}

}

extern "C"

void cudaCalculateSlopeKernel(float *hptr, float2 *slopeOut,

unsigned int width, unsigned int height)

{

dim3 block(8, 8, 1);

dim3 grid2(cuda_iDivUp(width, block.x), cuda_iDivUp(height, block.y), 1);

calculateSlopeKernel<<<grid2, block>>>(hptr, slopeOut, width, height);

}

data/ocean.vert

// GLSL vertex shader

varying vec3 eyeSpacePos;

varying vec3 worldSpaceNormal;

varying vec3 eyeSpaceNormal;

uniform float heightScale; // = 0.5;

uniform float chopiness; // = 1.0;

uniform vec2 size; // = vec2(256.0, 256.0);

void main()

{

float height = gl_MultiTexCoord0.x;

vec2 slope = gl_MultiTexCoord1.xy;

// calculate surface normal from slope for shading

vec3 normal = normalize(cross( vec3(0.0, slope.y*heightScale, 2.0 / size.x), vec3(2.0 / size.y, slope.x*heightScale, 0.0)));

worldSpaceNormal = normal;

// calculate position and transform to homogeneous clip space

vec4 pos = vec4(gl_Vertex.x, height * heightScale, gl_Vertex.z, 1.0);

gl_Position = gl_ModelViewProjectionMatrix * pos;

eyeSpacePos = (gl_ModelViewMatrix * pos).xyz;

eyeSpaceNormal = (gl_NormalMatrix * normal).xyz;

}

data/ocean.frag

// GLSL fragment shader

varying vec3 eyeSpacePos;

varying vec3 worldSpaceNormal;

varying vec3 eyeSpaceNormal;

uniform vec4 deepColor;

uniform vec4 shallowColor;

uniform vec4 skyColor;

uniform vec3 lightDir;

void main()

{

vec3 eyeVector = normalize(eyeSpacePos);

vec3 eyeSpaceNormalVector = normalize(eyeSpaceNormal);

vec3 worldSpaceNormalVector = normalize(worldSpaceNormal);

float facing = max(0.0, dot(eyeSpaceNormalVector, -eyeVector));

float fresnel = pow(1.0 - facing, 5.0); // Fresnel approximation

float diffuse = max(0.0, dot(worldSpaceNormalVector, lightDir));

// vec4 waterColor = mix(shallowColor, deepColor, facing);

vec4 waterColor = deepColor;

// gl_FragColor = gl_Color;

// gl_FragColor = vec4(fresnel);

// gl_FragColor = vec4(diffuse);

// gl_FragColor = waterColor;

// gl_FragColor = waterColor*diffuse;

gl_FragColor = waterColor*diffuse + skyColor*fresnel;

}

data/reference.ppm

编译运行:

$ nvcc -ccbin g++ -I$CUDA_HOME/targets/x86_64-linux/include -I$CUDA_HOME/samples/common/inc -m64 -o oceanFFT oceanFFT.cpp oceanFFT_kernel.cu -lGL -lGLU -lglut -lcufft

$ ./oceanFFT

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

[CUDA FFT Ocean Simulation]

Left mouse button - rotate

Middle mouse button - pan

Right mouse button - zoom

'w' key - toggle wireframe

[CUDA FFT Ocean Simulation]

GPU Device 0: "Pascal" with compute capability 6.1

六、安装cudnn:

到官网下载对应的deb包:https://developer.nvidia.com/rdp/cudnn-download

在下载之前需要注册用户,这里(18.04+cuda10.2)是:libcudnn7_7.6.5.32-1+cuda10.2_amd64.deb libcudnn7-doc_7.6.5.32-1+cuda10.2_amd64.deb libcudnn7-dev_7.6.5.32-1+cuda10.2_amd64.deb三个包,然后安装:

sudo dpkg -i *.deb

测试:

$ cat cudnntest1.cu

/*

nvcc -ccbin g++ -m64 -o cudnntest1 cudnntest1.cu -lcudnn

*/

#include <iomanip>

#include <iostream>

#include <cstdlib>

#include <vector>

#include <stdio.h>

#include <cuda.h>

#include <cudnn_v7.h>

#define CUDA_CALL(f) { \

cudaError_t err = (f); \

if (err != cudaSuccess) { \

std::cout \

<< " Error occurred: " << err << std::endl; \

std::exit(1); \

} \

}

#define CUDNN_CALL(f) { \

cudnnStatus_t err = (f); \

if (err != CUDNN_STATUS_SUCCESS) { \

std::cout \

<< " Error occurred: " << err << std::endl; \

std::exit(1); \

} \

}

__global__ void dev_const(float *px, float k) {

int tid = threadIdx.x + blockIdx.x * blockDim.x;

px[tid] = k;

}

__global__ void dev_iota(float *px) {

int tid = threadIdx.x + blockIdx.x * blockDim.x;

px[tid] = tid;

}

void print(const float *data, int n, int c, int h, int w) {

std::vector<float> buffer(1 << 20);

CUDA_CALL(cudaMemcpy(

buffer.data(), data,

n * c * h * w * sizeof(float),

cudaMemcpyDeviceToHost));

int a = 0;

for (int i = 0; i < n; ++i) {

for (int j = 0; j < c; ++j) {

std::cout << "n=" << i << ", c=" << j << ":" << std::endl;

for (int k = 0; k < h; ++k) {

for (int l = 0; l < w; ++l) {

std::cout << std::setw(4) << std::right << buffer[a];

++a;

}

std::cout << std::endl;

}

}

}

std::cout << std::endl;

}

int main() {

cudnnHandle_t cudnn;

CUDNN_CALL(cudnnCreate(&cudnn));

// input

const int in_n = 1;

const int in_c = 1;

const int in_h = 5;

const int in_w = 5;

std::cout << "in_n: " << in_n << std::endl;

std::cout << "in_c: " << in_c << std::endl;

std::cout << "in_h: " << in_h << std::endl;

std::cout << "in_w: " << in_w << std::endl;

std::cout << std::endl;

cudnnTensorDescriptor_t in_desc;

CUDNN_CALL(cudnnCreateTensorDescriptor(&in_desc));

CUDNN_CALL(cudnnSetTensor4dDescriptor(

in_desc, CUDNN_TENSOR_NCHW, CUDNN_DATA_FLOAT,

in_n, in_c, in_h, in_w));

float *in_data;

CUDA_CALL(cudaMalloc(

&in_data, in_n * in_c * in_h * in_w * sizeof(float)));

// filter

const int filt_k = 1;

const int filt_c = 1;

const int filt_h = 2;

const int filt_w = 2;

std::cout << "filt_k: " << filt_k << std::endl;

std::cout << "filt_c: " << filt_c << std::endl;

std::cout << "filt_h: " << filt_h << std::endl;

std::cout << "filt_w: " << filt_w << std::endl;

std::cout << std::endl;

cudnnFilterDescriptor_t filt_desc;

CUDNN_CALL(cudnnCreateFilterDescriptor(&filt_desc));

CUDNN_CALL(cudnnSetFilter4dDescriptor(

filt_desc, CUDNN_DATA_FLOAT, CUDNN_TENSOR_NCHW,

filt_k, filt_c, filt_h, filt_w));

float *filt_data;

CUDA_CALL(cudaMalloc(

&filt_data, filt_k * filt_c * filt_h * filt_w * sizeof(float)));

// convolution

const int pad_h = 1;

const int pad_w = 1;

const int str_h = 1;

const int str_w = 1;

const int dil_h = 1;

const int dil_w = 1;

std::cout << "pad_h: " << pad_h << std::endl;

std::cout << "pad_w: " << pad_w << std::endl;

std::cout << "str_h: " << str_h << std::endl;

std::cout << "str_w: " << str_w << std::endl;

std::cout << "dil_h: " << dil_h << std::endl;

std::cout << "dil_w: " << dil_w << std::endl;

std::cout << std::endl;

cudnnConvolutionDescriptor_t conv_desc;

CUDNN_CALL(cudnnCreateConvolutionDescriptor(&conv_desc));

CUDNN_CALL(cudnnSetConvolution2dDescriptor(

conv_desc,

pad_h, pad_w, str_h, str_w, dil_h, dil_w,

CUDNN_CONVOLUTION, CUDNN_DATA_FLOAT));

// output

int out_n;

int out_c;

int out_h;

int out_w;

CUDNN_CALL(cudnnGetConvolution2dForwardOutputDim(

conv_desc, in_desc, filt_desc,

&out_n, &out_c, &out_h, &out_w));

std::cout << "out_n: " << out_n << std::endl;

std::cout << "out_c: " << out_c << std::endl;

std::cout << "out_h: " << out_h << std::endl;

std::cout << "out_w: " << out_w << std::endl;

std::cout << std::endl;

cudnnTensorDescriptor_t out_desc;

CUDNN_CALL(cudnnCreateTensorDescriptor(&out_desc));

CUDNN_CALL(cudnnSetTensor4dDescriptor(

out_desc, CUDNN_TENSOR_NCHW, CUDNN_DATA_FLOAT,

out_n, out_c, out_h, out_w));

float *out_data;

CUDA_CALL(cudaMalloc(

&out_data, out_n * out_c * out_h * out_w * sizeof(float)));

// algorithm

cudnnConvolutionFwdAlgo_t algo;

CUDNN_CALL(cudnnGetConvolutionForwardAlgorithm(

cudnn,

in_desc, filt_desc, conv_desc, out_desc,

CUDNN_CONVOLUTION_FWD_PREFER_FASTEST, 0, &algo));

std::cout << "Convolution algorithm: " << algo << std::endl;

std::cout << std::endl;

// workspace

size_t ws_size;

CUDNN_CALL(cudnnGetConvolutionForwardWorkspaceSize(

cudnn, in_desc, filt_desc, conv_desc, out_desc, algo, &ws_size));

float *ws_data;

CUDA_CALL(cudaMalloc(&ws_data, ws_size));

std::cout << "Workspace size: " << ws_size << std::endl;

std::cout << std::endl;

// perform

float alpha = 1.f;

float beta = 0.f;

dev_iota<<<in_w * in_h, in_n * in_c>>>(in_data);

dev_const<<<filt_w * filt_h, filt_k * filt_c>>>(filt_data, 1.f);

CUDNN_CALL(cudnnConvolutionForward(

cudnn,

&alpha, in_desc, in_data, filt_desc, filt_data,

conv_desc, algo, ws_data, ws_size,

&beta, out_desc, out_data));

// results

std::cout << "in_data:" << std::endl;

print(in_data, in_n, in_c, in_h, in_w);

std::cout << "filt_data:" << std::endl;

print(filt_data, filt_k, filt_c, filt_h, filt_w);

std::cout << "out_data:" << std::endl;

print(out_data, out_n, out_c, out_h, out_w);

// finalizing

CUDA_CALL(cudaFree(ws_data));

CUDA_CALL(cudaFree(out_data));

CUDNN_CALL(cudnnDestroyTensorDescriptor(out_desc));

CUDNN_CALL(cudnnDestroyConvolutionDescriptor(conv_desc));

CUDA_CALL(cudaFree(filt_data));

CUDNN_CALL(cudnnDestroyFilterDescriptor(filt_desc));

CUDA_CALL(cudaFree(in_data));

CUDNN_CALL(cudnnDestroyTensorDescriptor(in_desc));

CUDNN_CALL(cudnnDestroy(cudnn));

return 0;

}

$ nvcc -ccbin g++ -m64 -o cudnntest1 cudnntest1.cu -lcudnn

$ ./cudnntest1

in_n: 1

in_c: 1

in_h: 5

in_w: 5

filt_k: 1

filt_c: 1

filt_h: 2

filt_w: 2

pad_h: 1

pad_w: 1

str_h: 1

str_w: 1

dil_h: 1

dil_w: 1

out_n: 1

out_c: 1

out_h: 6

out_w: 6

Convolution algorithm: 1

Workspace size: 224

in_data:

n=0, c=0:

0 1 2 3 4

5 6 7 8 9

10 11 12 13 14

15 16 17 18 19

20 21 22 23 24

filt_data:

n=0, c=0:

1 1

1 1

out_data:

n=0, c=0:

0 1 3 5 7 4

5 12 16 20 24 13

15 32 36 40 44 23

25 52 56 60 64 33

35 72 76 80 84 43

20 41 43 45 47 24

官方例子mnistCUDNN:

$ dpkg -L libcudnn7-doc

/.

/usr

/usr/share

/usr/share/doc

/usr/share/doc/libcudnn7-doc

/usr/share/doc/libcudnn7-doc/changelog.Debian.gz

/usr/share/doc/libcudnn7-doc/copyright

/usr/share/doc/libcudnn7-doc/cuDNN-Developer-Guide.pdf

/usr/share/doc/libcudnn7-doc/cuDNN-SLA.pdf

/usr/share/lintian

/usr/share/lintian/overrides

/usr/share/lintian/overrides/libcudnn7-doc

/usr/src

/usr/src/cudnn_samples_v7

/usr/src/cudnn_samples_v7/RNN

/usr/src/cudnn_samples_v7/RNN/Makefile

/usr/src/cudnn_samples_v7/RNN/RNN_example.cu

/usr/src/cudnn_samples_v7/RNN/compare.py

.............

$cp -Rf /usr/src/cudnn_samples_v7 .

$ cd cudnn_samples_v7/mnistCUDNN/

~/cudnn_samples_v7/mnistCUDNN$ make

Linking agains cublasLt = true

CUDA VERSION: 10020

TARGET ARCH: x86_64

HOST_ARCH: x86_64

TARGET OS: linux

SMS: 30 35 50 53 60 61 62 70 72 75

/usr/local/cuda/bin/nvcc -ccbin g++ -I/usr/local/cuda/include -I/usr/local/cuda/include -IFreeImage/include -m64 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_53,code=sm_53 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_62,code=sm_62 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_72,code=sm_72 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_75,code=compute_75 -o fp16_dev.o -c fp16_dev.cu

g++ -I/usr/local/cuda/include -I/usr/local/cuda/include -IFreeImage/include -o fp16_emu.o -c fp16_emu.cpp

g++ -I/usr/local/cuda/include -I/usr/local/cuda/include -IFreeImage/include -o mnistCUDNN.o -c mnistCUDNN.cpp

/usr/local/cuda/bin/nvcc -ccbin g++ -m64 -gencode arch=compute_30,code=sm_30 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_53,code=sm_53 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_62,code=sm_62 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_72,code=sm_72 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_75,code=compute_75 -o mnistCUDNN fp16_dev.o fp16_emu.o mnistCUDNN.o -I/usr/local/cuda/include -I/usr/local/cuda/include -IFreeImage/include -L/usr/local/cuda/lib64 -L/usr/local/cuda/lib64 -lcublasLt -LFreeImage/lib/linux/x86_64 -LFreeImage/lib/linux -lcudart -lcublas -lcudnn -lfreeimage -lstdc++ -lm

~/cudnn_samples_v7/mnistCUDNN$ ./mnistCUDNN

cudnnGetVersion() : 7605 , CUDNN_VERSION from cudnn.h : 7605 (7.6.5)

Host compiler version : GCC 8.4.0

There are 1 CUDA capable devices on your machine :

device 0 : sms 3 Capabilities 6.1, SmClock 1582.0 Mhz, MemSize (Mb) 2002, MemClock 3004.0 Mhz, Ecc=0, boardGroupID=0

Using device 0

Testing single precision

Loading image data/one_28x28.pgm

Performing forward propagation ...

Testing cudnnGetConvolutionForwardAlgorithm ...

Fastest algorithm is Algo 1

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 0.015360 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 0.030720 time requiring 3464 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 0.033792 time requiring 57600 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: 0.151552 time requiring 207360 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 0.157664 time requiring 203008 memory

Resulting weights from Softmax:

0.0000000 0.9999399 0.0000000 0.0000000 0.0000561 0.0000000 0.0000012 0.0000017 0.0000010 0.0000000

Loading image data/three_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000000 0.0000000 0.9999288 0.0000000 0.0000711 0.0000000 0.0000000 0.0000000 0.0000000

Loading image data/five_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000008 0.0000000 0.0000002 0.0000000 0.9999820 0.0000154 0.0000000 0.0000012 0.0000006

Result of classification: 1 3 5

Test passed!

Testing half precision (math in single precision)

Loading image data/one_28x28.pgm

Performing forward propagation ...

Testing cudnnGetConvolutionForwardAlgorithm ...

Fastest algorithm is Algo 1

Testing cudnnFindConvolutionForwardAlgorithm ...

^^^^ CUDNN_STATUS_SUCCESS for Algo 0: 0.015360 time requiring 0 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 2: 0.038912 time requiring 28800 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 1: 0.131072 time requiring 3464 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 5: 0.147456 time requiring 203008 memory

^^^^ CUDNN_STATUS_SUCCESS for Algo 4: 0.260096 time requiring 207360 memory

Resulting weights from Softmax:

0.0000001 1.0000000 0.0000001 0.0000000 0.0000563 0.0000001 0.0000012 0.0000017 0.0000010 0.0000001

Loading image data/three_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000000 0.0000000 1.0000000 0.0000000 0.0000714 0.0000000 0.0000000 0.0000000 0.0000000

Loading image data/five_28x28.pgm

Performing forward propagation ...

Resulting weights from Softmax:

0.0000000 0.0000008 0.0000000 0.0000002 0.0000000 1.0000000 0.0000154 0.0000000 0.0000012 0.0000006

Result of classification: 1 3 5

Test passed!

使用CuDNN进行卷积操作的例子(cuduu+opencv):

/*

nvcc -ccbin g++ -m64 -o cudnnOpencvt1 cudnnOpencvt1.cu -lcudnn `pkg-config --libs opencv`

$ rm cudnnOpencvt1 cudnn-out.png

原文链接:https://blog.csdn.net/qq_39790992/article/details/90486348

*/

#include <cudnn.h>

#include<iostream>

#include <opencv2/opencv.hpp>

using namespace cv;

using namespace std;

#define checkCUDNN(expression) \

{ \

cudnnStatus_t status = (expression); \

if (status != CUDNN_STATUS_SUCCESS) { \

std::cerr << "Error on line " << __LINE__ << ": " \

<< cudnnGetErrorString(status) << std::endl; \

std::exit(EXIT_FAILURE); \

} \

}

cv::Mat load_image(const char* image_path) {

cv::Mat image = cv::imread(image_path, CV_LOAD_IMAGE_COLOR);

image.convertTo(image, CV_32FC3);

cv::normalize(image, image, 0, 1, cv::NORM_MINMAX);

return image;

}

void save_image(const char* output_filename,

float* buffer,

int height,

int width) {

cv::Mat output_image(height, width, CV_32FC3, buffer);

// Make negative values zero.

cv::threshold(output_image,

output_image,

/*threshold=*/0,

/*maxval=*/0,

cv::THRESH_TOZERO);

cv::normalize(output_image, output_image, 0.0, 255.0, cv::NORM_MINMAX);

output_image.convertTo(output_image, CV_8UC3);

cv::imwrite(output_filename, output_image);

}

int main(int argc, const char* argv[]) {

int gpu_id = 0;

std::cerr << "GPU: " << gpu_id << std::endl;

bool with_sigmoid = 0;

std::cerr << "With sigmoid: " << std::boolalpha << with_sigmoid << std::endl;

cv::Mat image = load_image("1.jpg");

cudaSetDevice(gpu_id);

cudnnHandle_t cudnn;

cudnnCreate(&cudnn);

// 输入张量的描述

cudnnTensorDescriptor_t input_descriptor;

checkCUDNN(cudnnCreateTensorDescriptor(&input_descriptor));

checkCUDNN(cudnnSetTensor4dDescriptor(input_descriptor,

/*format=*/CUDNN_TENSOR_NHWC, // 注意是 NHWC,TensorFlow更喜欢以 NHWC 格式存储张量(通道是变化最频繁的地方,即 BGR),而其他一些更喜欢将通道放在前面

/*dataType=*/CUDNN_DATA_FLOAT,

/*batch_size=*/1,

/*channels=*/3,

/*image_height=*/image.rows,

/*image_width=*/image.cols));

// 卷积核的描述(形状、格式)

cudnnFilterDescriptor_t kernel_descriptor;

checkCUDNN(cudnnCreateFilterDescriptor(&kernel_descriptor));

checkCUDNN(cudnnSetFilter4dDescriptor(kernel_descriptor,

/*dataType=*/CUDNN_DATA_FLOAT,

/*format=*/CUDNN_TENSOR_NCHW, // 注意是 NCHW

/*out_channels=*/3,

/*in_channels=*/3,

/*kernel_height=*/3,

/*kernel_width=*/3));

// 卷积操作的描述(步长、填充等等)

cudnnConvolutionDescriptor_t convolution_descriptor;

checkCUDNN(cudnnCreateConvolutionDescriptor(&convolution_descriptor));

checkCUDNN(cudnnSetConvolution2dDescriptor(convolution_descriptor,

/*pad_height=*/1,

/*pad_width=*/1,

/*vertical_stride=*/1,

/*horizontal_stride=*/1,

/*dilation_height=*/1,

/*dilation_width=*/1,

/*mode=*/CUDNN_CROSS_CORRELATION, // CUDNN_CONVOLUTION

/*computeType=*/CUDNN_DATA_FLOAT));

// 计算卷积后图像的维数

int batch_size{ 0 }, channels{ 0 }, height{ 0 }, width{ 0 };

checkCUDNN(cudnnGetConvolution2dForwardOutputDim(convolution_descriptor,

input_descriptor,

kernel_descriptor,

&batch_size,

&channels,

&height,

&width));

std::cerr << "Output Image: " << height << " x " << width << " x " << channels

<< std::endl;

// 卷积输出张量的描述

cudnnTensorDescriptor_t output_descriptor;

checkCUDNN(cudnnCreateTensorDescriptor(&output_descriptor));

checkCUDNN(cudnnSetTensor4dDescriptor(output_descriptor,

/*format=*/CUDNN_TENSOR_NHWC,

/*dataType=*/CUDNN_DATA_FLOAT,

/*batch_size=*/1,

/*channels=*/3,

/*image_height=*/image.rows,

/*image_width=*/image.cols));

// 卷积算法的描述

// cudnn_tion_fwd_algo_gemm——将卷积建模为显式矩阵乘法,

// cudnn_tion_fwd_algo_fft——它使用快速傅立叶变换(FFT)进行卷积或

// cudnn_tion_fwd_algo_winograd——它使用Winograd算法执行卷积。

cudnnConvolutionFwdAlgo_t convolution_algorithm;

checkCUDNN(

cudnnGetConvolutionForwardAlgorithm(cudnn,

input_descriptor,

kernel_descriptor,

convolution_descriptor,

output_descriptor,

CUDNN_CONVOLUTION_FWD_PREFER_FASTEST, // CUDNN_CONVOLUTION_FWD_SPECIFY_WORKSPACE_LIMIT(在内存受限的情况下,memoryLimitInBytes 设置非 0 值)

/*memoryLimitInBytes=*/0,

&convolution_algorithm));

// 计算 cuDNN 它的操作需要多少内存

size_t workspace_bytes{ 0 };

checkCUDNN(cudnnGetConvolutionForwardWorkspaceSize(cudnn,

input_descriptor,

kernel_descriptor,

convolution_descriptor,

output_descriptor,

convolution_algorithm,

&workspace_bytes));

std::cerr << "Workspace size: " << (workspace_bytes / 1048576.0) << "MB"

<< std::endl;

assert(workspace_bytes > 0);

// *************************************************************************

// 分配内存, 从 cudnnGetConvolutionForwardWorkspaceSize 计算而得

void* d_workspace{ nullptr };

cudaMalloc(&d_workspace, workspace_bytes);

// 从 cudnnGetConvolution2dForwardOutputDim 计算而得

int image_bytes = batch_size * channels * height * width * sizeof(float);

float* d_input{ nullptr };

cudaMalloc(&d_input, image_bytes);

cudaMemcpy(d_input, image.ptr<float>(0), image_bytes, cudaMemcpyHostToDevice);

float* d_output{ nullptr };

cudaMalloc(&d_output, image_bytes);

cudaMemset(d_output, 0, image_bytes);

// *************************************************************************

// clang-format off

const float kernel_template[3][3] = {

{ 1, 1, 1 },

{ 1, -8, 1 },

{ 1, 1, 1 }

};

// clang-format on

float h_kernel[3][3][3][3]; // NCHW

for (int kernel = 0; kernel < 3; ++kernel) {

for (int channel = 0; channel < 3; ++channel) {

for (int row = 0; row < 3; ++row) {

for (int column = 0; column < 3; ++column) {

h_kernel[kernel][channel][row][column] = kernel_template[row][column];

}

}

}

}

float* d_kernel{ nullptr };

cudaMalloc(&d_kernel, sizeof(h_kernel));

cudaMemcpy(d_kernel, h_kernel, sizeof(h_kernel), cudaMemcpyHostToDevice);

// *************************************************************************

const float alpha = 1.0f, beta = 0.0f;

// 真正的卷积操作 !!!前向卷积

checkCUDNN(cudnnConvolutionForward(cudnn,

&alpha,

input_descriptor,

d_input,

kernel_descriptor,

d_kernel,

convolution_descriptor,

convolution_algorithm,

d_workspace, // 注意,如果我们选择不需要额外内存的卷积算法,d_workspace可以为nullptr。

workspace_bytes,

&beta,

output_descriptor,

d_output));

if (with_sigmoid) {

// 描述激活

cudnnActivationDescriptor_t activation_descriptor;

checkCUDNN(cudnnCreateActivationDescriptor(&activation_descriptor));

checkCUDNN(cudnnSetActivationDescriptor(activation_descriptor,

CUDNN_ACTIVATION_SIGMOID,

CUDNN_PROPAGATE_NAN,

/*relu_coef=*/0));

// 前向 sigmoid 激活函数

checkCUDNN(cudnnActivationForward(cudnn,

activation_descriptor,

&alpha,

output_descriptor,

d_output,

&beta,

output_descriptor,

d_output));

cudnnDestroyActivationDescriptor(activation_descriptor);

}

float* h_output = new float[image_bytes];

cudaMemcpy(h_output, d_output, image_bytes, cudaMemcpyDeviceToHost);

save_image("./cudnn-out.png", h_output, height, width);

delete[] h_output;

cudaFree(d_kernel);

cudaFree(d_input);

cudaFree(d_output);

cudaFree(d_workspace);

// 销毁

cudnnDestroyTensorDescriptor(input_descriptor);

cudnnDestroyTensorDescriptor(output_descriptor);

cudnnDestroyFilterDescriptor(kernel_descriptor);

cudnnDestroyConvolutionDescriptor(convolution_descriptor);

cudnnDestroy(cudnn);

return 0;

}

运行:

$ nvcc -ccbin g++ -m64 -o cudnnOpencvt1 cudnnOpencvt1.cu -lcudnn `pkg-config --libs opencv`

$ ./cudnnOpencvt1

GPU: 0

With sigmoid: false

Output Image: 966 x 725 x 3

Workspace size: 4.00744MB

输入文件./1.jpg

输出:./cudnn-out.png

七、theano使用cudnn的配置

$ cat .theanorc

[global]

floatX=float32

device = cuda

#device=gpu

optimizer=fast_compile

optimizer_including=cudnn # if you have successfully installed cudnn, otherwise remove it.

[lib]

cnmem=0.8

[blas]

ldflas=-lopenblas

[nvcc]

flags=--machine=64

fastmath=True

[cuda]

root=/usr/local/cuda-10.2/

测试程序testing_theano.py,摘自:http://deeplearning.net/software/theano/tutorial/using_gpu.html

from theano import function, config, shared, tensor

import numpy

import time

vlen = 10 * 30 * 768 # 10 x #cores x # threads per core

iters = 1000

rng = numpy.random.RandomState(22)

x = shared(numpy.asarray(rng.rand(vlen), config.floatX))

f = function([], tensor.exp(x))

print(f.maker.fgraph.toposort())

t0 = time.time()

for i in range(iters):

r = f()

t1 = time.time()

print("Looping %d times took %f seconds" % (iters, t1 - t0))

print("Result is %s" % (r,))

if numpy.any([isinstance(x.op, tensor.Elemwise) and

('Gpu' not in type(x.op).__name__)

for x in f.maker.fgraph.toposort()]):

print('Used the cpu')

else:

print('Used the gpu')

来源:oschina

链接:https://my.oschina.net/u/2245781/blog/4335343