1.为什么使用代理池

- 许多⽹网站有专⻔门的反爬⾍虫措施,可能遇到封IP等问题。

- 互联⽹网上公开了了⼤大量量免费代理理,利利⽤用好资源。

- 通过定时的检测维护同样可以得到多个可⽤用代理理。

2.代理池的要求

- 多站抓取, 异步检测

- 定时筛选, 持续更新

- 提供接口, 易于提取

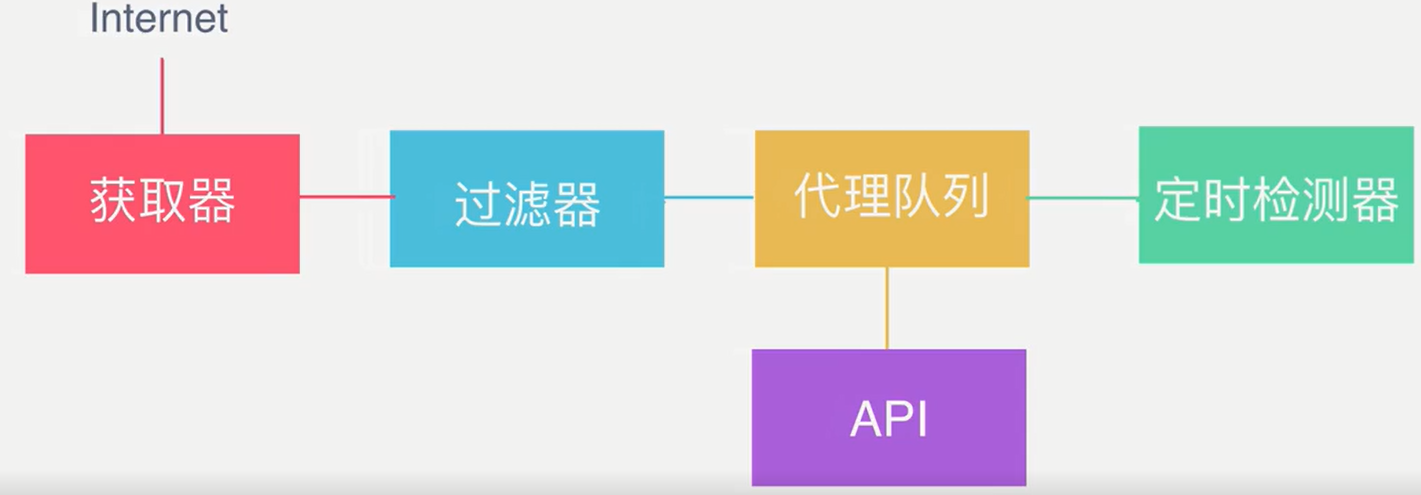

3.代理池架构

4.github上下载代理池维护的代码

https://github.com/Germey/ProxyPool

安装Python

至少Python3.5以上

安装Redis

安装好之后将Redis服务开启

配置代理池

cd proxypool

进入proxypool目录,修改settings.py文件

PASSWORD为Redis密码,如果为空,则设置为None

安装依赖

pip3 install -r requirements.txt

打开代理池和API

python3 run.py

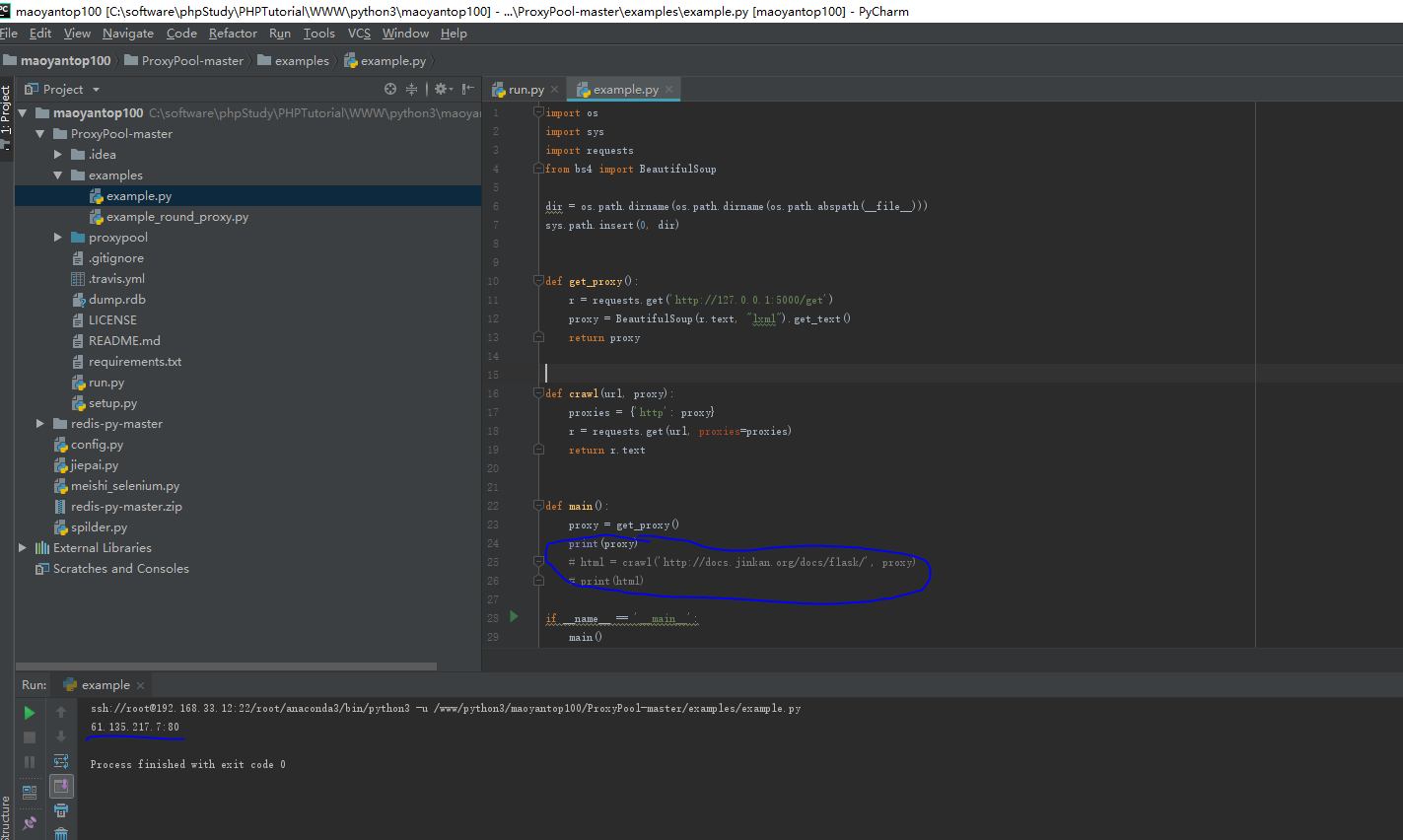

获取代理

利用requests获取方法如下

import requests

PROXY_POOL_URL = 'http://localhost:5000/get'

def get_proxy():

try:

response = requests.get(PROXY_POOL_URL)

if response.status_code == 200:

return response.text

except ConnectionError:

return None

各模块功能

getter.py

爬虫模块

class proxypool.getter.FreeProxyGetter

爬虫类,用于抓取代理源网站的代理,用户可复写和补充抓取规则。

schedule.py

调度器模块

class proxypool.schedule.ValidityTester

异步检测类,可以对给定的代理的可用性进行异步检测。

class proxypool.schedule.PoolAdder

代理添加器,用来触发爬虫模块,对代理池内的代理进行补充,代理池代理数达到阈值时停止工作。

class proxypool.schedule.Schedule

代理池启动类,运行RUN函数时,会创建两个进程,负责对代理池内容的增加和更新。

db.py

Redis数据库连接模块

class proxypool.db.RedisClient

数据库操作类,维持与Redis的连接和对数据库的增删查该,

error.py

异常模块

class proxypool.error.ResourceDepletionError

资源枯竭异常,如果从所有抓取网站都抓不到可用的代理资源,

则抛出此异常。

class proxypool.error.PoolEmptyError

代理池空异常,如果代理池长时间为空,则抛出此异常。

api.py

API模块,启动一个Web服务器,使用Flask实现,对外提供代理的获取功能。

utils.py

工具箱

setting.py

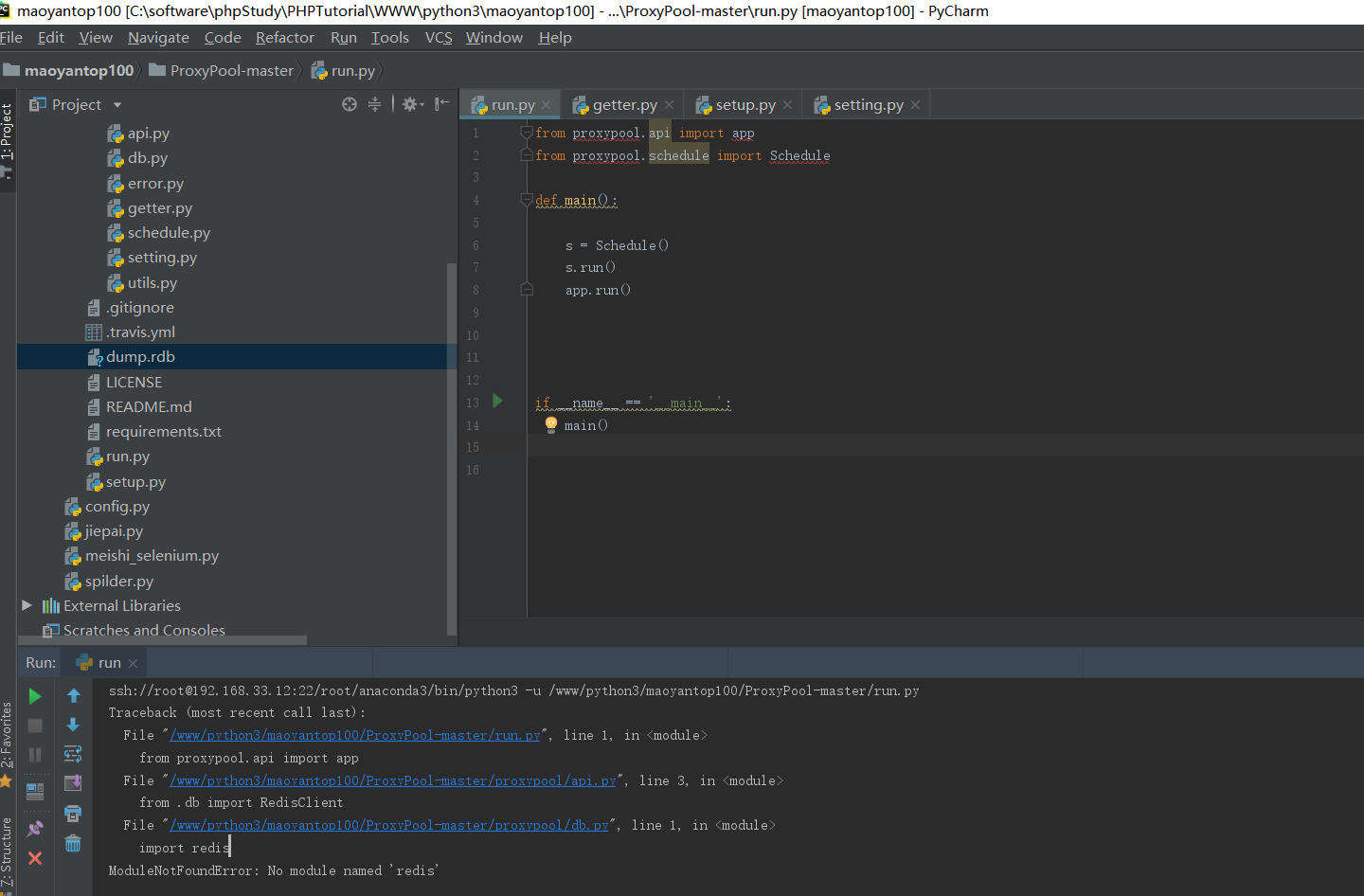

设置5.配置代理池维护的代码,后执行报错

import redis

ModuleNotFoundError: No module named 'redis' 错误原因:Python默认是不支持Redis的,当引用redis时就会报错:

错误原因:Python默认是不支持Redis的,当引用redis时就会报错:

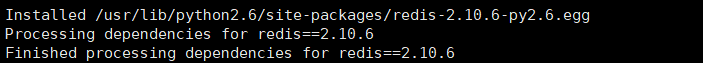

解决方法:为Python安装Redis库

登陆https://github.com/andymccurdy/redis-py下载安装包

# 安装解压缩命令

yum install -y unzip zip

# 解压

unzip redis-py-master.zip -d /usr/local/redis

# 进入解压文件目录

cd /usr/local/redis/redis-py-master

# 安装redis库

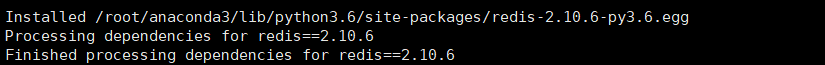

sudo python setup.py install

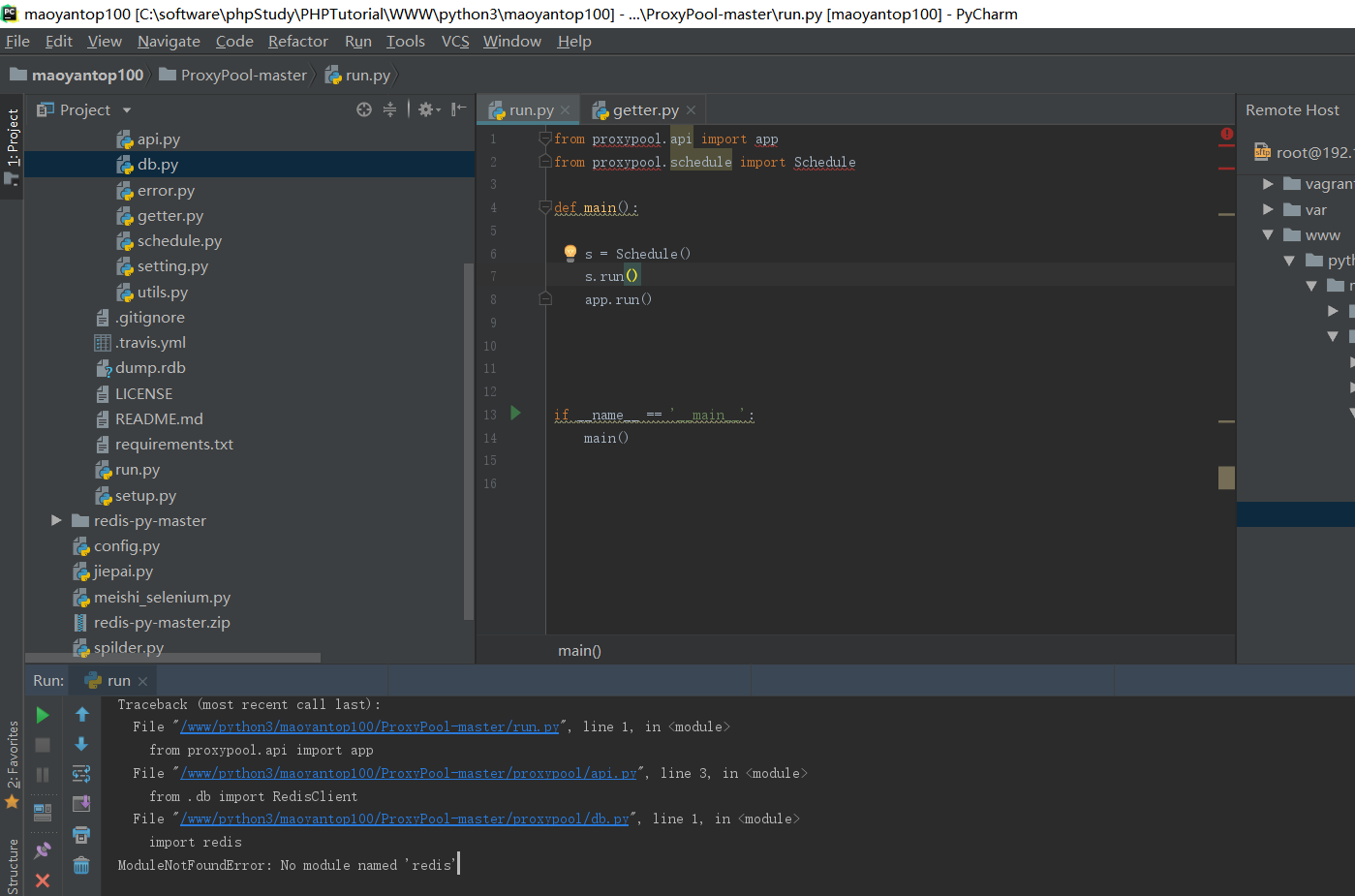

再次执行还是报错

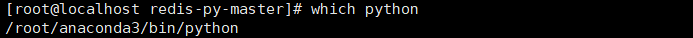

原来是安装redis库的python的版本不对,下面指定为那个python进行安装

sudo /root/anaconda3/bin/python setup.py install

再次执行

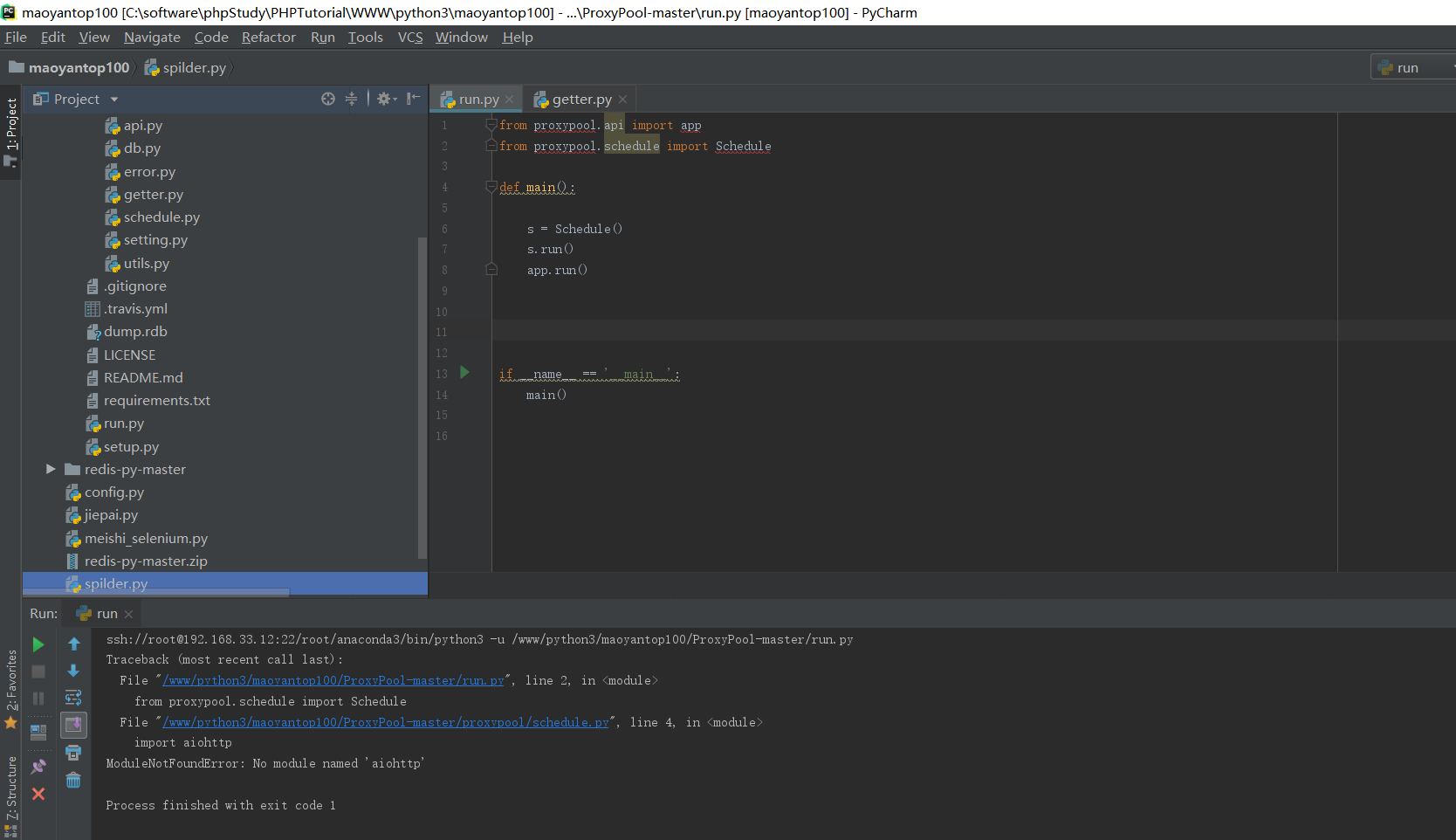

可以看到,刚刚上面的那个报错信息已经消失,但又出现了新的报错信息

import aiohttp

ModuleNotFoundError: No module named 'aiohttp'解决方法:

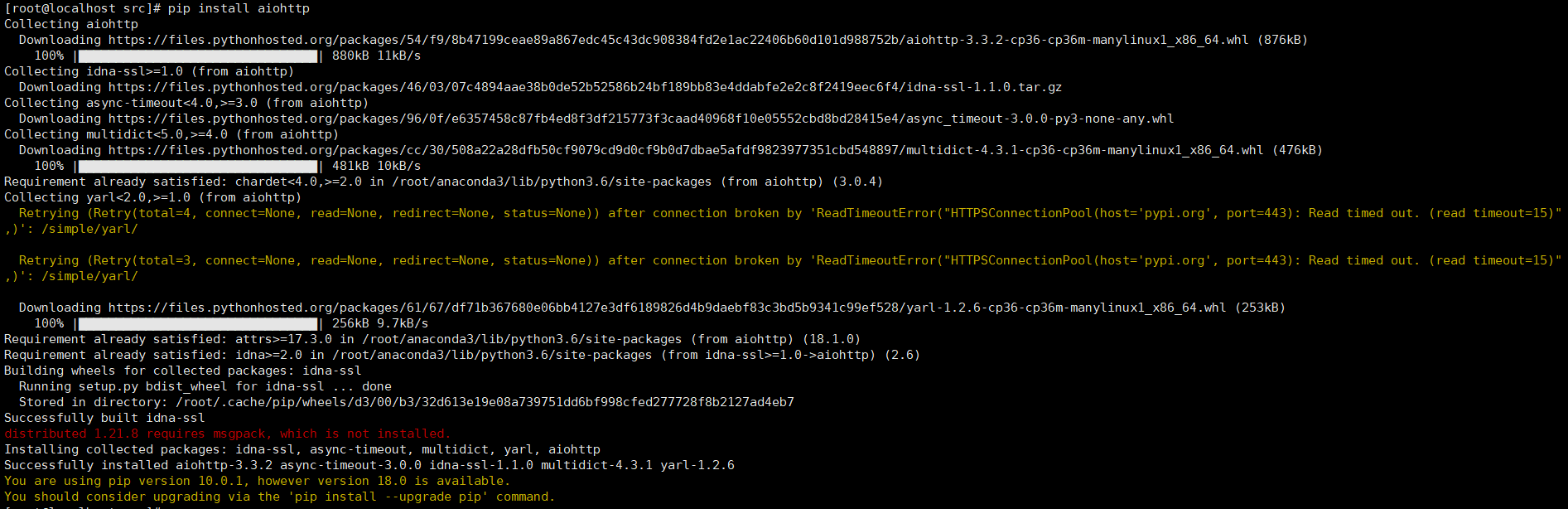

pip install aiohttp

再次执行,报错:

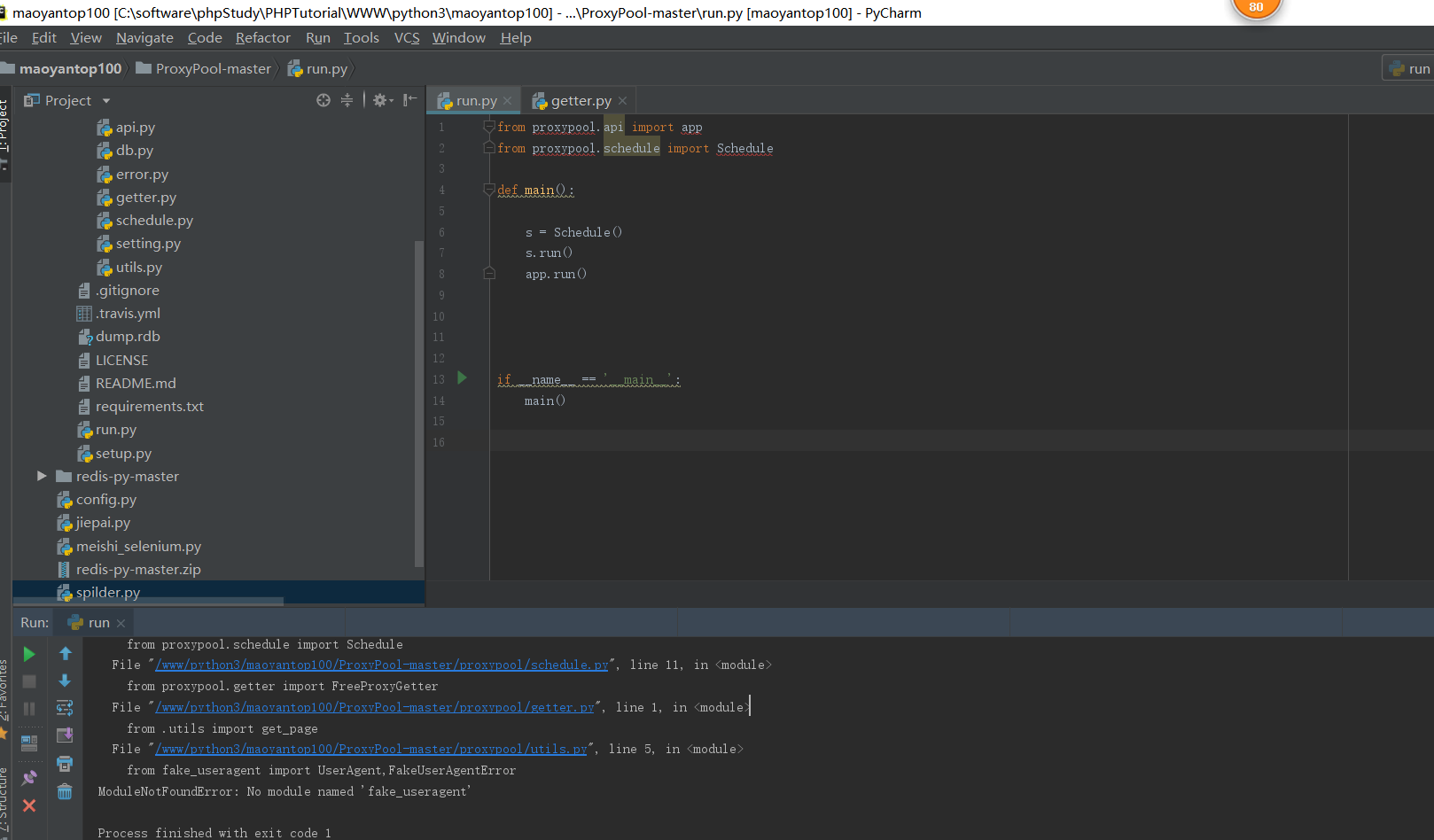

from fake_useragent import UserAgent,FakeUserAgentError

ModuleNotFoundError: No module named 'fake_useragent'解决方法:

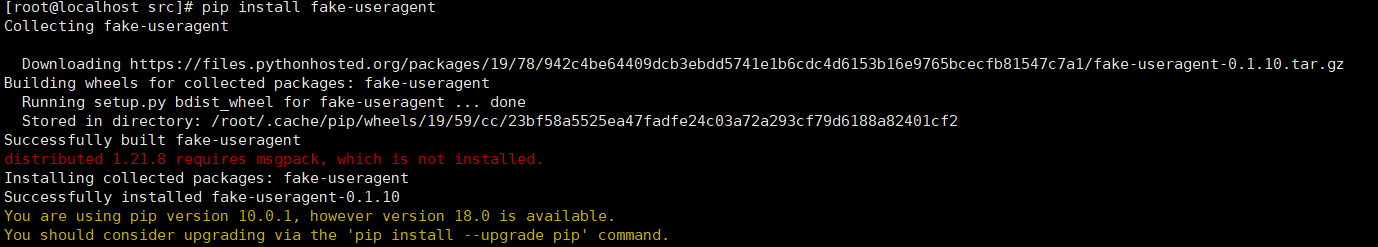

pip install fake-useragent

再次执行,报错:

Traceback (most recent call last):

File "/home/henry/dev/myproject/flaskr/flaskr.py", line 23, in <module>

app.run()

File "/home/henry/.local/lib/python3.5/site-packages/flask/app.py", line 841, in run

run_simple(host, port, self, **options)

File "/home/henry/.local/lib/python3.5/site-packages/werkzeug/serving.py", line 739, in run_simple

inner()

File "/home/henry/.local/lib/python3.5/site-packages/werkzeug/serving.py", line 699, in inner

fd=fd)

File "/home/henry/.local/lib/python3.5/site-packages/werkzeug/serving.py", line 593, in make_server

passthrough_errors, ssl_context, fd=fd)

File "/home/henry/.local/lib/python3.5/site-packages/werkzeug/serving.py", line 504, in __init__

HTTPServer.__init__(self, (host, int(port)), handler)

File "/usr/lib/python3.5/socketserver.py", line 440, in __init__

self.server_bind()

File "/usr/lib/python3.5/http/server.py", line 138, in server_bind

socketserver.TCPServer.server_bind(self)

File "/usr/lib/python3.5/socketserver.py", line 454, in server_bind

self.socket.bind(self.server_address)

OSError: [Errno 98] Address already in use

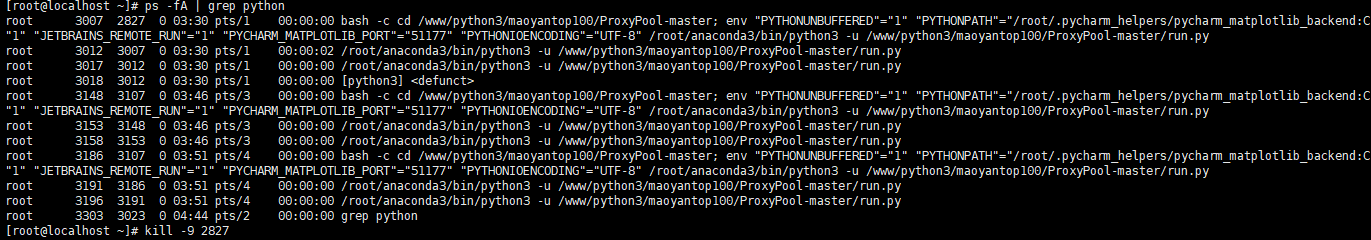

[Finished in 1.9s]临时解决办法:

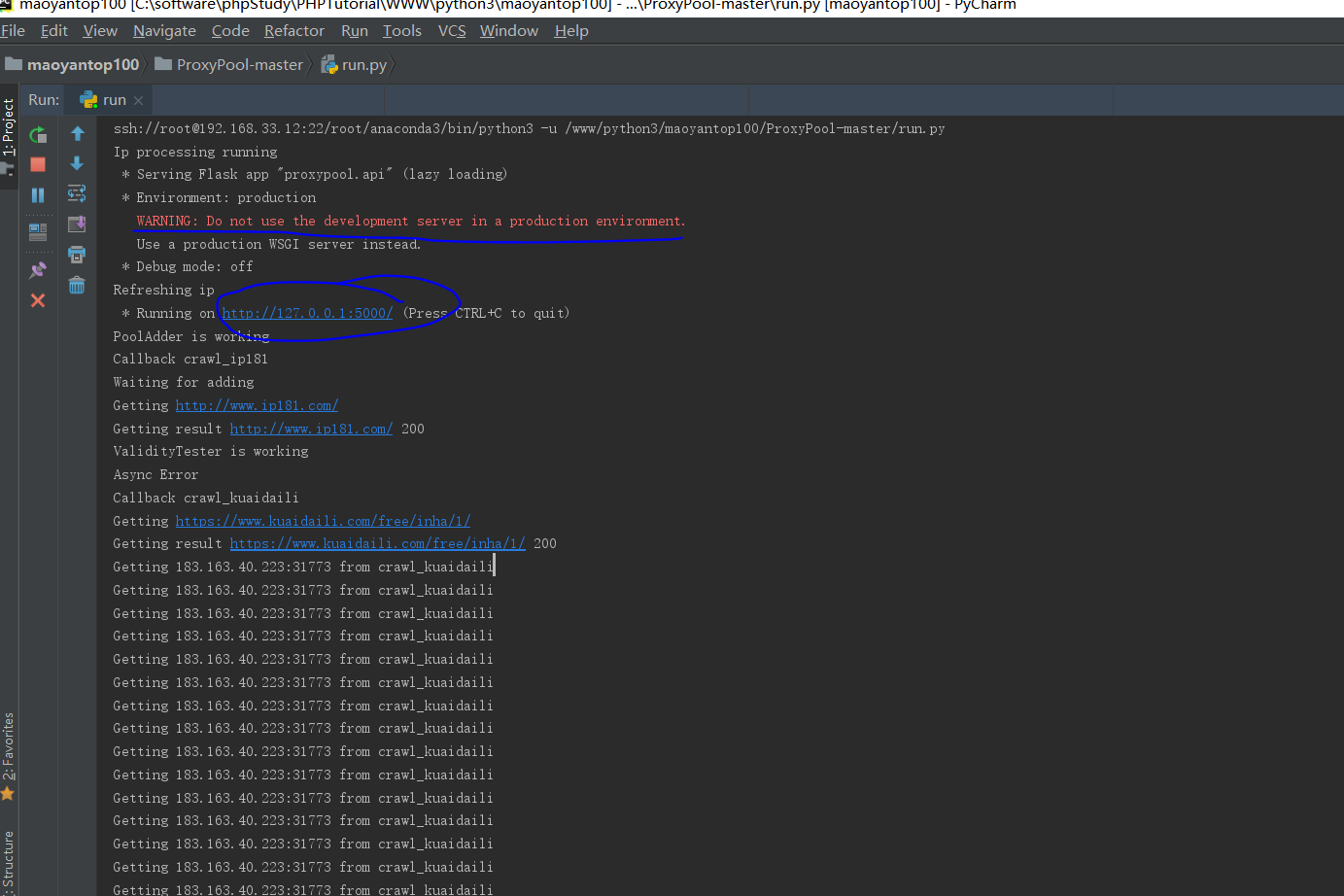

再次执行,报错如下:

ssh://root@192.168.33.12:22/root/anaconda3/bin/python3 -u /www/python3/maoyantop100/ProxyPool-master/run.py

Ip processing running

* Serving Flask app "proxypool.api" (lazy loading)

* Environment: production

WARNING: Do not use the development server in a production environment.

Use a production WSGI server instead.

* Debug mode: off

Refreshing ip

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

Waiting for adding

PoolAdder is working

Callback crawl_ip181

Getting http://www.ip181.com/

Getting result http://www.ip181.com/ 200

ValidityTester is working

Async Error

Callback crawl_kuaidaili

Getting https://www.kuaidaili.com/free/inha/1/

Getting result https://www.kuaidaili.com/free/inha/1/ 200

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting 183.163.40.223:31773 from crawl_kuaidaili

Getting https://www.kuaidaili.com/free/inha/2/

Getting result https://www.kuaidaili.com/free/inha/2/ 503

Process Process-2:

Traceback (most recent call last):

File "/root/anaconda3/lib/python3.6/multiprocessing/process.py", line 258, in _bootstrap

self.run()

File "/root/anaconda3/lib/python3.6/multiprocessing/process.py", line 93, in run

self._target(*self._args, **self._kwargs)

File "/www/python3/maoyantop100/ProxyPool-master/proxypool/schedule.py", line 130, in check_pool

adder.add_to_queue()

File "/www/python3/maoyantop100/ProxyPool-master/proxypool/schedule.py", line 87, in add_to_queue

raw_proxies = self._crawler.get_raw_proxies(callback)

File "/www/python3/maoyantop100/ProxyPool-master/proxypool/getter.py", line 28, in get_raw_proxies

for proxy in eval("self.{}()".format(callback)):

File "/www/python3/maoyantop100/ProxyPool-master/proxypool/getter.py", line 51, in crawl_kuaidaili

re_ip_adress = ip_adress.findall(html)

TypeError: expected string or bytes-like object

Refreshing ip

Waiting for adding解决方法:将getter.py line 51, in crawl_kuaidaili re_ip_adress = ip_adress.findall(html) 改成 re_ip_adress = ip_adress.findall(str(html))

再次执行:

到此为止,以上所有报错信息都已解决了。

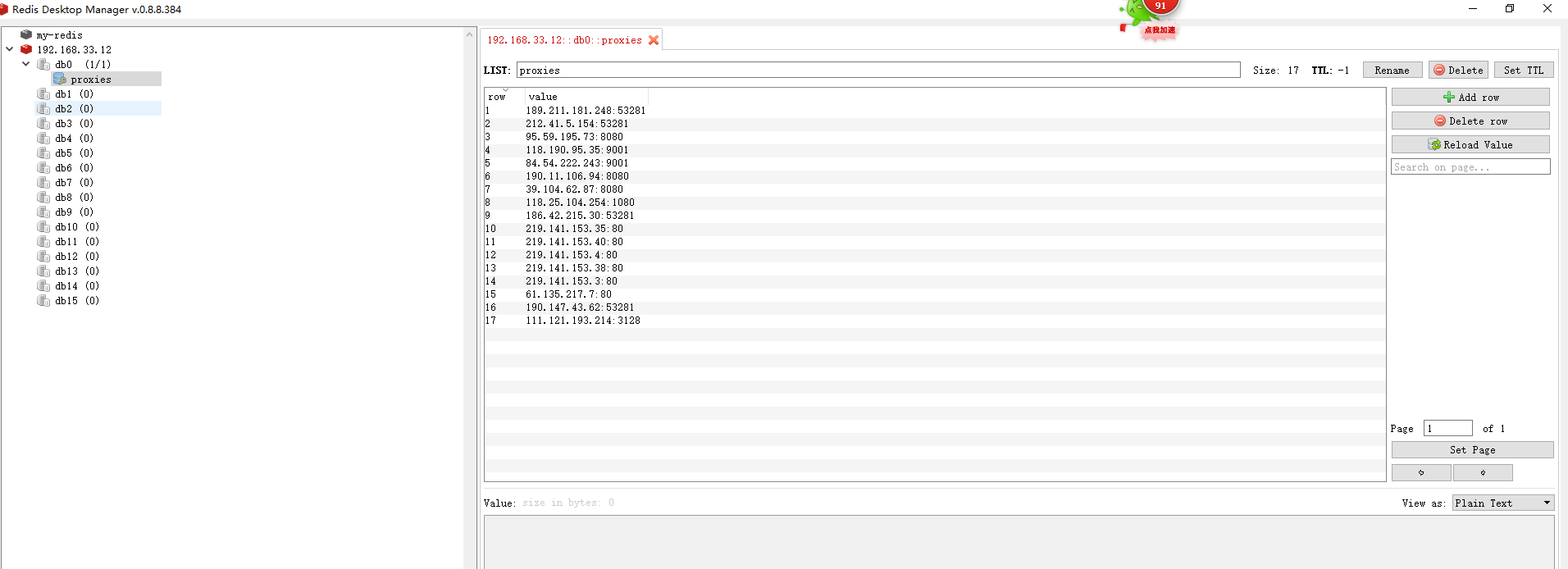

查看redis数据库中抓取到的免费的有效代理

访问此接口即可获取一个随机可用代理

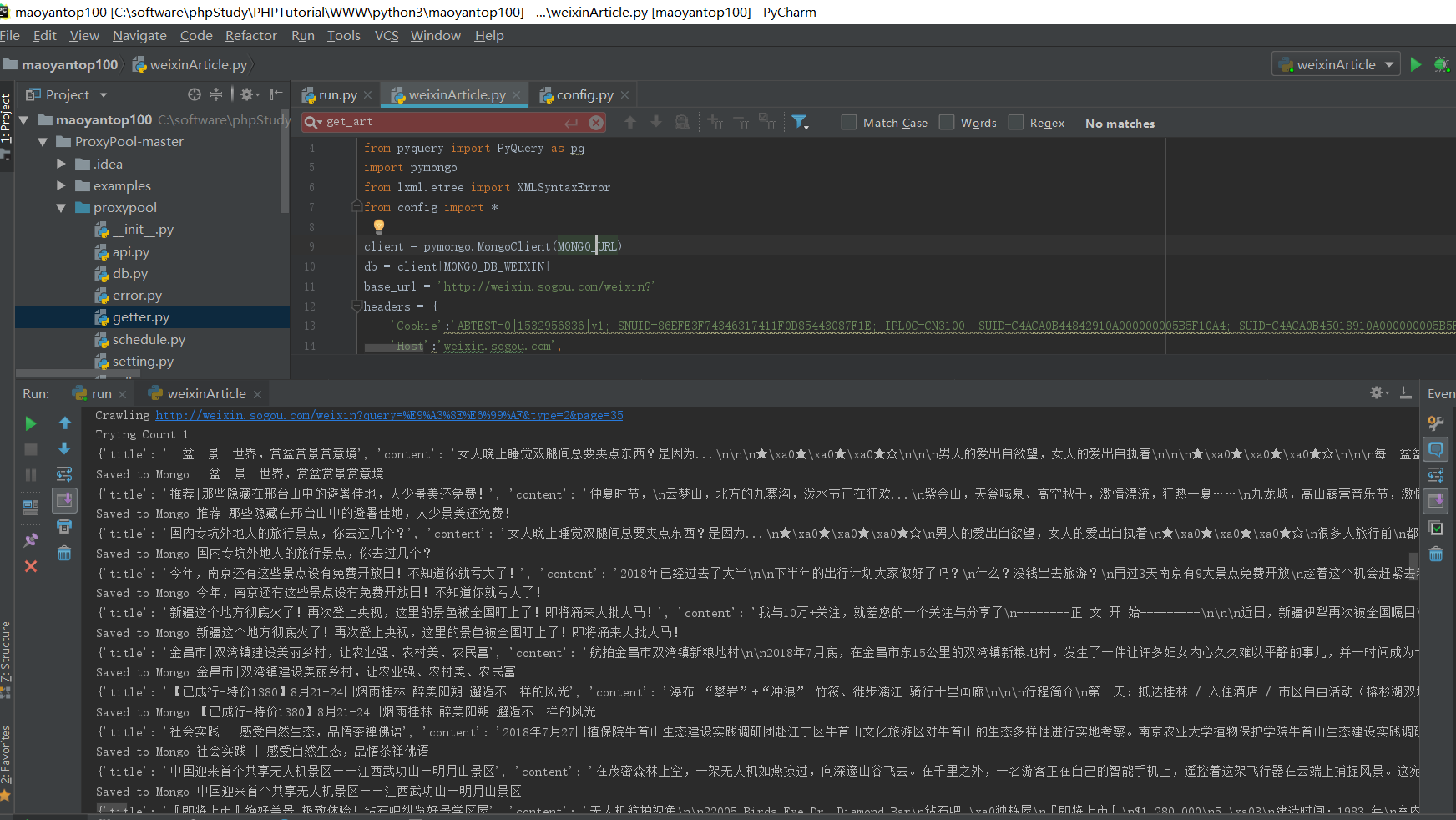

完整参考代码:

import requests

from urllib.parse import urlencode

from requests.exceptions import ConnectionError

from pyquery import PyQuery as pq

import pymongo

from lxml.etree import XMLSyntaxError

from config import *

client = pymongo.MongoClient(MONGO_URL)

db = client[MONGO_DB_WEIXIN]

base_url = 'http://weixin.sogou.com/weixin?'

headers = {

'Cookie':'ABTEST=0|1532956836|v1; SNUID=86EFE3F74346317411F0D85443087F1E; IPLOC=CN3100; SUID=C4ACA0B44842910A000000005B5F10A4; SUID=C4ACA0B45018910A000000005B5F10A4; weixinIndexVisited=1; SUV=00CF640BB4A0ACC45B5F10A581EB0750; sct=1; JSESSIONID=aaa5-uR_KeqyY51fCIHsw; ppinf=5|1532957080|1534166680|dHJ1c3Q6MToxfGNsaWVudGlkOjQ6MjAxN3x1bmlxbmFtZToxODolRTklODIlQjklRTYlOUYlQUZ8Y3J0OjEwOjE1MzI5NTcwODB8cmVmbmljazoxODolRTklODIlQjklRTYlOUYlQUZ8dXNlcmlkOjQ0Om85dDJsdUJ4alZpSjlNNDczeEphazBteWRkeE1Ad2VpeGluLnNvaHUuY29tfA; pprdig=XiDXOUL6rc8Ehi5XsOUYk-BVIFnPjZrNpwSjN3OknS0KjPtL7-KA8pqp9rKFEWK7YIBYgcZYkB5zhQ3teTjyIEllimmEiMUBBxbe_-O8DMu6ovVCimv7V1ejJQI_vWh-Q2b1UvYM_6Pei5mh9HBEYeqi-oNVJb-U4VAcC-BiiXo; sgid=03-34235539-AVtfEZhssmpJkiaRxNjPAr1k; ppmdig=1532957080000000f835369148a0058ef4c8400357ffc265',

'Host':'weixin.sogou.com',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:61.0) Gecko/20100101 Firefox/61.0'

}

proxy = None

# 3、请求url过程中,可能会遇到反爬虫措施,这时候需要开启代理

def get_proxy():

try:

response = requests.get(PROXY_POOL_URL)

if response.status_code == 200:

return response.text

return None

except ConnectionError:

return None

# 2、请求url,得到索引页html

def get_html(url,count=1):

print('Crawling',url)

print('Trying Count',count)

global proxy

if count >= MAX_COUNT:

print('Tried Too Many Counts')

return None

try:

if proxy:

proxies = {

'http':'http://' + proxy

}

response = requests.get(url, allow_redirects=False, headers=headers, proxies=proxies)

else:

response = requests.get(url, allow_redirects=False, headers=headers)

if response.status_code == 200:

return response.text

if response.status_code == 302:

print('302')

proxy = get_proxy()

if proxy:

print('Using Proxy',proxy)

count += 1

return get_html(url,count)

else:

print('Get Proxy Failed')

return None

except ConnectionError as e:

print('Error Occurred',e.args)

proxy = get_proxy()

count +=1

return get_html(url,count)

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=1

# Trying Count 1

# 302

# Using Proxy 190.11.106.94:8080

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=1

# Trying Count 2

# 302

# Using Proxy 213.128.7.72:53281

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=1

# Trying Count 3

# 302

# Using Proxy 190.147.43.62:53281

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=1

# Trying Count 4

# 302

# Using Proxy 39.104.62.87:8080

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=1

# Trying Count 5

# Tried Too Many Counts

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=2

# Trying Count 1

# 302

# Using Proxy 95.59.195.73:8080

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=2

# Trying Count 2

# 302

# Using Proxy 111.121.193.214:3128

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=2

# Trying Count 3

# 302

# Using Proxy 118.190.95.35:9001

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=2

# Trying Count 4

# 302

# Using Proxy 61.135.217.7:80

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=2

# Trying Count 5

# Tried Too Many Counts

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=3

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=4

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=5

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=6

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=7

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=8

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=9

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=10

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=11

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=12

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=13

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=14

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=15

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=16

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=17

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=18

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=19

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=20

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=21

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=22

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=23

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=24

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=25

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=26

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=27

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=28

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=29

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=30

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=31

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=32

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=33

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=34

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=35

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=36

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=37

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=38

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=39

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=40

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=41

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=42

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=43

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=44

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=45

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=46

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=47

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=48

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=49

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=50

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=51

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=52

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=53

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=54

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=55

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=56

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=57

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=58

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=59

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=60

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=61

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=62

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=63

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=64

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=65

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=66

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=67

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=68

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=69

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=70

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=71

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=72

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=73

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=74

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=75

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=76

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=77

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=78

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=79

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=80

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=81

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=82

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=83

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=84

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=85

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=86

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=87

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=88

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=89

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=90

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=91

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=92

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=93

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=94

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=95

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=96

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=97

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=98

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=99

# Trying Count 1

# Crawling http://weixin.sogou.com/weixin?query=%E9%A3%8E%E6%99%AF&type=2&page=100

# Trying Count 1

# 1、构造url,进行微信关键词搜索

def get_index(keyword,page):

data = {

'query':keyword,

'type':2,

'page':page

}

queries = urlencode(data)

url = base_url + queries

html = get_html(url)

return html

# 4、分析索引页html代码,返回微信详情页url

def parse_index(html):

doc = pq(html)

items = doc('.news-box .news-list li .txt-box h3 a').items()

for item in items:

yield item.attr('href')

# 5、请求微信详情页url,得到详情页html

def get_detail(url):

try:

response =requests.get(url)

if response.status_code == 200:

return response.text

return None

except ConnectionError:

return None

# 6、分析详情页html代码,得到微信标题、公众号、发布日期,文章内容等信息

def parse_detail(html):

try:

doc = pq(html)

title = doc('.rich_media_title').text()

content = doc('.rich_media_content').text()

date = doc('#post-date').text()

nickname = doc('#js_profile_qrcode > div > strong').text()

wechat = doc('#js_profile_qrcode > div > p:nth-child(3) > span').text()

return {

'title':title,

'content':content,

'date':date,

'nickname':nickname,

'wechat':wechat

}

except XMLSyntaxError:

return None

# 保存到数据库MongoDB

def save_to_mongo(data):

if db['articles'].update({'title':data['title']},{'$set':data},True):

print('Saved to Mongo',data['title'])

else:

print('Saved to Mongo Failed',data['title'])

# 四、调试模块

def main():

for page in range(1,101):

html = get_index(KEYWORD,page)

# print(html)

if html:

article_urls = parse_index(html)

# print(article_urls)

# http://mp.weixin.qq.com/s?src=11×tamp=1532962678&ver=1030&signature=y0i61ogz4QZEkNu-BrqFNFPnKwRh7qkdb7OpPVZjO2WEPPaZMv*w2USW1uosLJUJF6O4VXRw4DSLlwpCBtLjEW7fncV6idpY5xChzALf47rn8-PauyK5rgHvQTFs0ePy&new=1

for article_url in article_urls:

# print(article_url)

article_html = get_detail(article_url)

if article_html:

article_data = parse_detail(article_html)

print(article_data)

if article_data:

save_to_mongo(article_data)

# {

# 'title': '广东首批十条最美公路出炉!茂名周边也有,自驾约起!',

# 'content': '由广东省旅游局、广东省交通运输厅\n联合重磅发布了\n十条首批广东最美旅游公路\n条条惊艳!希望自驾骑行的别错过!\n\n\n\n\n① 湛江∣菠萝的海\n\n\n\n\n起止位置:\nS289徐闻县曲界镇——雷州市调风镇段,全长28km。\n\n\n特色:\n汇集生态农业观光体验、美丽乡村、风车群、湖泊、火山口等元素的旅游观光大道。\n\n\n\n\n推荐游览线路》》》\n\n\n菠萝的海核心景区一一风车群一一一世界地质公园田洋火山口一一龙门村一一九龙山国家湿地公园\n\n\n② 江门∣碉楼逸风\n\n\n\n\n\n起止位置:\n\n开阳高速塘口出口——X555——自力村——立园——325国道——S275——马降龙——S275——锦江里,全长35km。\n\n\n特色:\n世界文化遗产之旅,世界建筑景观长廊。\n\n\n推荐游览线路》》》\n\n\n自力村——立园——赤坎古镇——马降龙——锦江里\n\n\n③ 肇庆∣千里走廊\n\n\n\n\n起止位置:\n国道321四会市——封开县与广西交界处(G321),全长170km。\n\n\n特色:\n汇集西江风貌、山水景观、人文景观、乡村旅游、名胜古迹,花岗岩、石灰石等地质自然景观。\n\n\n\n\n推荐游览线路》》》\n\n\n贞山景区——六祖寺——鼎湖山景区——北岭山森林公园——七星岩景区——羚羊峡古栈道森林公园——端砚村——阅江楼——宋城墙——梅俺——包公文化园——悦城龙母祖庙——三元塔——德庆学宫——广信塔\n\n\n④ 广州∣悠游增城\n\n\n\n\n起止位置:\n\n荔城街--派潭镇白水寨风景名胜区,全长45km。\n\n\n特色:\n岭南绿色植物带和北回归线翡翠绿洲。\n\n\n推荐游览线路》》》\n\n\n增城莲塘春色景区——莲塘印象园——何仙姑景区——小楼人家景区——二龙山花园——邓村石屋——金叶子度假酒店——白水寨风景名胜区\n\n\n⑤ 珠海∣浪漫珠海\n起止位置:\n拱北——唐家,全长28km。\n\n\n特色:\n珠江口海域及岸线,百年渔港、浪漫气质、休闲街区远眺港珠澳大桥。\n\n\n推荐游览线路》》》\n\n\n港珠澳大桥一一海滨泳场一一城市客厅一一珠海渔女雕像一一海滨公园一一景山公园一一香炉湾一一野狸岛(大剧院)一一珠海市博物馆(新馆)一一美丽湾一一凤凰湾沙滩一一淇澳岛\n\n\n⑥ 汕头∣潮风岛韵\n\n\n\n\n\n起止位置:\n\n东海岸大道——南澳大桥——南澳环岛公路,全长95km。\n\n\n特色:\n粤东特区滨海城市、南国风情景观长廊、宏伟南澳跨海大桥、独特恬静的环岛滨海旅游公路。\n\n\n\n\n推荐游览线路》》》\n\n\n东海岸大道—南澳大桥—南澳环岛公路(逆时针环岛:宋井、青澳湾、金银岛、总兵府、黄花山森林公园)\n\n\n⑦ 韶关∣大美丹霞\n\n\n\n\n起止位置:\n1.省道S246线仁化至黄岗段\n2.国道G106线仁化县丹霞山至韶赣高速丹霞出口\n3.国道G323线仁化丹霞出口至小观园段\n4.国道G323线韶关市区过境段(湾头至桂头)\n5.“穿丹霞”景区内旅游通道\n总长160.3km。\n\n\n特色:\n世界自然遗产,丹霞地貌、河流、田园风貌、古村落等。\n\n\n\n\n推荐游览线路》》》\n\n\n石塘古村落——丹霞山风景名胜区——灵溪河森林公园——五马寨生态园\n\n\n⑧ 河源∣万绿河源\n\n\n\n\n起止位置:\n源城区东江湾迎客大桥——桂山旅游大道段万绿谷,全长33km。\n\n\n特色:\n公路依山沿湖而建,风景优美,沿途可观多彩万绿湖和秀美大桂山景色;集山、泉、湖、河、瀑、林于一体,融自然景观与人文景观于一身;感受河源独特的生态文化、客家文化、恐龙文化、温泉文化等不同文化的内涵与魅力。\n\n\n\n\n推荐游览线路》》》\n\n\n巴伐利亚——客天下水晶温泉——新丰江大坝——野趣沟——桂山——万绿谷——万绿湖——镜花缘\n\n\n⑨ 梅州∣休闲梅州\n\n\n\n\n\n起止位置:\n梅县区S223线秀兰桥——雁洋镇长教村,全长30km。\n\n\n特色:\n文化浓郁,风情醇厚,风景优美,人文景观独具特色,旅游服务设施完善。\n\n\n\n\n推荐游览线路》》》\n\n\n秀兰大桥——叶剑英纪念园——雁鸣湖旅游度假村——灵光寺旅游区——雁南飞茶田景区——桥溪古韵景区\n\n\n⑩ 清远∣北江画卷\n\n\n\n\n起止位置:\n清城起龙塘镇K244、K2478,英德起K2346止K2440,全长99km。\n\n\n特色:\n山水文化旅游画廊。沿线集亲情温泉、宗教文化、闲情山水、激情漂流、休闲度假、乡村旅游、名胜古迹、北江美食于一体的深度旅游体验带。\n\n\n\n\n推荐游览线路》》》\n\n\n德盈新银盏温泉度假村——飞霞风景区——黄腾峡生态旅游区——牛鱼嘴原始生态风景区——天子山旅游度假区——飞来峡水利枢纽风景区——上岳古村——铁溪小镇——连江口镇——浈阳峡旅游度假区——宝晶宫生态旅游度假区——奇洞温泉度假区——积庆里红茶谷——仙湖温泉旅游度假区\n来源:广东省旅游局、广东省旅游协会',

# 'date': '',

# 'nickname': '茂名建鸿传媒网',

# 'wechat': ''

# }

if __name__ == '__main__':

main()

参考博文:

OSError: [Errno 98] Address already in use(关键词:flask/bug)

来源:oschina

链接:https://my.oschina.net/u/4274927/blog/3886012