ceph迁移有很多种方案。最简单的就是上一台新的,拆一台旧的。但是这样的方法不安全,有可能在数据迁移到一半的时候出现异常而老的数据在迁移回去费时费力而且又可能出现新的问题。所以今天分享一个比较安全的迁移方案。

1 设计方案

1.1 准备硬件

硬件准备包括安装操作系统,防火墙关闭,插入磁盘,分配网络,安装ceph软件。

1.2 迁移规划

迁移前:

| 主机 | IP | 组件 |

| ceph-admin | 172.18.0.131 | mon,osd |

| ceph-node1 | 172.18.0.132 | mon,osd |

| ceph-node2 | 172.18.0.133 | mon,osd |

迁移后:

| 主机 | IP | 组件 |

| ceph-admin | 172.18.0.131 | mon |

| ceph-node1 | 172.18.0.132 | mon |

| ceph-node2 | 172.18.0.133 | mon |

| transfer01 | 172.18.0.135 | osd |

| transfer02 | 172.18.0.34 | osd |

| transfer03 | 172.18.0.51 | osd |

2 迁移原理

迁移原理基于ceph 的crush 伪随机原理。简单的说就是当副本数增加的时候原来bucket中的数据不迁移,只是拷贝一份副本到新的bucket中。当副本数减少的时候 也是把指定的bucket中的数据删除,其他bucket中的数据不会迁移。

2.1 搭建独立的bucket(new_root)

2.2 在老的 bucket (default) 中选择3个副本

2.3 修改pool的副本数增加到6副本

2.3 在新的bucket(new_root)中再选择3个副本 等待从老的bucket中选出的3个副本中的数据 拷贝到另外新bucket中的3副本上,达到6副本的效果

2.4 在新的bucket(new_root) 中选择3个副本(等于是放弃老bucket中的3个副本的数据)并修改pool的副本数从6副本降低至3副本(自动删除老bucket的3副本中的数据保留新bucket中的3副本数据从而达到无缝安全迁移的目的)

优点:

1 安全。在最后一刻修改副本数自动删除老bucket数据之前老的bucket中的数据从来都没有迁移过,不用担心因为迁移过程中的异常而丢损坏数据。

2 省时。每一步都是可逆的,如果再操作过程中出现任何问题只用简单的修改crushmap和副本数 就达到了回退的目的,避免在迁移过程中回退花费大量的时间和精力。

| 操作步骤 | 操作命令 | 回退命令 | 集群数据变化 | 是否有风险 |

| 设置规则1 | ceph osd pool set [pool-name] crush_rule replicated_rule_1 | ceph osd pool set [pool-name] set crush_rule replicated_rule | 无变化 | 无 |

| 修改pool 副本数 | ceph osd pool set [pool-name] size 6 | ceph osd pool set [pool-name] size 3 | 无变化 | 无 |

| 设置规则2 | ceph osd pool set [pool-name] crush_rule replicated_rule_2 | ceph osd pool set [pool-name] crush_rule replicated_rule_1 | 数据从老bucket树拷贝到新bucket树 | 极低 |

| 设置规则3 | ceph osd pool set [pool-name] crush_rule replicated_rule_3 | ceph osd pool set [pool-name] crush_rule replicated_rule_2 | 老bucket树中删除数据 | 极低 |

| 修改pool副本数 | ceph osd pool set [pool-name] size 3 | 无 | 无变化 | 无 |

在设置规则3前老的数据都未挪动半步,不影响业务使用也不担心数据丢失和损坏。当执行步骤3的的时候就表示步骤2已经完全拷贝了一份数据导新bucket中,全程安全无缝对接。

3 迁移实施

3.1 准备基础环境

# 准备磁盘

[root@transfer01 ~]# lsblk|grep vda

vda 252:0 0 100G 0 disk

# 更新yum

[root@transfer01 yum.repos.d]# ll|grep ceph.repo

-rw-r--r--. 1 root root 614 Apr 1 14:34 ceph.repo

[root@transfer01 yum.repos.d]# ll|grep epel.repo

-rw-r--r--. 1 root root 921 Apr 1 14:34 epel.repo

# 安装ceph 软件

[root@transfer01 yum.repos.d]# yum install ceph net-tools vim -y

# 防火墙关闭

[root@transfer01 yum.repos.d]# systemctl stop firewalld && systemctl disable firewalld

3.2 osd 初始化

# 修改集群参数osd_crush_update_on_start 这一点最关键一定不可忘记,否则迁移失败

在ceph.conf 中添加参数osd_crush_update_on_start = false,阻止因为osd的变化而引起crush的变化

添加参数后重启mon服务使得参数生效。(可以使用ceph --show-config | grep osd_crush_update_on_start 验证参数是否改变)

# 初始化osd

ceph-deploy osd create transfer01 --data /dev/vda

ceph-deploy osd create transfer02 --data /dev/vda

ceph-deploy osd create transfer03 --data /dev/vda

# 初始化后的ceph tree 是这样的

[root@ceph-admin ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-9 0 root new_root

-1 0.14699 root default

-3 0.04900 host ceph-admin

0 hdd 0.04900 osd.0 up 1.00000 1.00000

-5 0.04900 host ceph-node1

1 hdd 0.04900 osd.1 up 1.00000 1.00000

-7 0.04900 host ceph-node2

2 hdd 0.04900 osd.2 up 1.00000 1.00000

3 hdd 0 osd.3 up 1.00000 1.00000

4 hdd 0 osd.4 up 1.00000 1.00000

5 hdd 0 osd.5 up 1.00000 1.000003.3 搭建新的bucket 树

# 添加 新bucket 树中的叶子节点

[root@ceph-admin ceph]# ceph osd crush add-bucket new_root root

[root@ceph-admin ceph]# ceph osd crush add-bucket transfer01 host

added bucket transfer01 type host to crush map

[root@ceph-admin ceph]# ceph osd crush add-bucket transfer02 host

added bucket transfer02 type host to crush map

[root@ceph-admin ceph]# ceph osd crush add-bucket transfer03 host

added bucket transfer03 type host to crush map

[root@ceph-admin ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-13 0 host transfer03

-12 0 host transfer02

-11 0 host transfer01

-9 0 root new_root

-1 0.14699 root default

-3 0.04900 host ceph-admin

0 hdd 0.04900 osd.0 up 1.00000 1.00000

-5 0.04900 host ceph-node1

1 hdd 0.04900 osd.1 up 1.00000 1.00000

-7 0.04900 host ceph-node2

2 hdd 0.04900 osd.2 up 1.00000 1.00000

3 hdd 0 osd.3 up 1.00000 1.00000

4 hdd 0 osd.4 up 1.00000 1.00000

5 hdd 0 osd.5 up 1.00000 1.00000

# host级别bucket 添加到root 级别bucket下

[root@ceph-admin ceph]# ceph osd crush move transfer01 root=new_root

moved item id -11 name 'transfer01' to location {root=new_root} in crush map

[root@ceph-admin ceph]# ceph osd crush move transfer02 root=new_root

moved item id -12 name 'transfer02' to location {root=new_root} in crush map

[root@ceph-admin ceph]# ceph osd crush move transfer03 root=new_root

moved item id -13 name 'transfer03' to location {root=new_root} in crush map

[root@ceph-admin ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-9 0 root new_root

-11 0 host transfer01

-12 0 host transfer02

-13 0 host transfer03

-1 0.14699 root default

-3 0.04900 host ceph-admin

0 hdd 0.04900 osd.0 up 1.00000 1.00000

-5 0.04900 host ceph-node1

1 hdd 0.04900 osd.1 up 1.00000 1.00000

-7 0.04900 host ceph-node2

2 hdd 0.04900 osd.2 up 1.00000 1.00000

3 hdd 0 osd.3 up 1.00000 1.00000

4 hdd 0 osd.4 up 1.00000 1.00000

5 hdd 0 osd.5 up 1.00000 1.00000

# osd 级别bucket 添加到host级别bucket 下,完成搭建一个新的bucket树

[root@ceph-admin ceph]# ceph osd crush add osd.3 0.049 host=transfer01

add item id 3 name 'osd.3' weight 0.049 at location {host=transfer01} to crush map

[root@ceph-admin ceph]# ceph osd crush add osd.4 0.049 host=transfer02

add item id 4 name 'osd.4' weight 0.049 at location {host=transfer02} to crush map

[root@ceph-admin ceph]# ceph osd crush add osd.5 0.049 host=transfer03

add item id 5 name 'osd.5' weight 0.049 at location {host=transfer03} to crush map

[root@ceph-admin ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-9 0.14699 root new_root

-11 0.04900 host transfer01

3 hdd 0.04900 osd.3 up 1.00000 1.00000

-12 0.04900 host transfer02

4 hdd 0.04900 osd.4 up 1.00000 1.00000

-13 0.04900 host transfer03

5 hdd 0.04900 osd.5 up 1.00000 1.00000

-1 0.14699 root default

-3 0.04900 host ceph-admin

0 hdd 0.04900 osd.0 up 1.00000 1.00000

-5 0.04900 host ceph-node1

1 hdd 0.04900 osd.1 up 1.00000 1.00000

-7 0.04900 host ceph-node2

2 hdd 0.04900 osd.2 up 1.00000 1.000003.4 编辑crushmap 并更新

# 获取crushmap

[root@ceph-admin opt]# ceph osd getcrushmap -o /opt/map2

59

# 使用crushtools 镜像反编译成可读文档

[root@ceph-admin opt]# crushtool -d /opt/map2 -o /opt/map2.txt

# 编辑/opt/map2.txt

vim /opt/map2.txt

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class hdd

device 1 osd.1 class hdd

device 2 osd.2 class hdd

#### 加上初始化好的osd bucket

device 3 osd.3 class hdd

device 4 osd.4 class hdd

device 5 osd.5 class hdd

### 加上host bucket

……

host transfer01 {

id -11 # do not change unnecessarily

id -16 class hdd # do not change unnecessarily

# weight 0.049

alg straw2

hash 0 # rjenkins1

item osd.3 weight 0.049

}

host transfer02 {

id -12 # do not change unnecessarily

id -15 class hdd # do not change unnecessarily

# weight 0.049

alg straw2

hash 0 # rjenkins1

item osd.4 weight 0.049

}

host transfer03 {

id -13 # do not change unnecessarily

id -14 class hdd # do not change unnecessarily

# weight 0.049

alg straw2

hash 0 # rjenkins1

item osd.5 weight 0.049

}

### 添加新搭建独立的bucket结构new_root

root new_root {

id -9 # do not change unnecessarily

id -10 class hdd # do not change unnecessarily

# weight 0.147

alg straw2

hash 0 # rjenkins1

item transfer01 weight 0.049

item transfer02 weight 0.049

item transfer03 weight 0.049

}

### 新增3条规则

# 规则1 在老的bucket结构中选出3副本(默认就是3副本,所以这里系统没有变化)

rule replicated_rule_1 {

id 1

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 3 type host

step emit

}

# 规则2 在老的bucket结构中选出3副本并且在新bucket结构中选出3副本

rule replicated_rule_2 {

id 2

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 3 type host

step emit

step take new_root

step chooseleaf firstn 3 type host

step emit

}

# 规则3 在新的bucket结构中选出3副本(等于是废弃老的bucket结构)

rule replicated_rule_3 {

id 3

type replicated

min_size 1

max_size 10

step take new_root

step chooseleaf firstn 3 type host

step emit

}

#编译更新后的crushmap可编辑文件/opt/map2.txt 为map2.bin

[root@ceph-admin opt]# crushtool -c /opt/map2.txt -o /opt/map2.bin

# 注入新的crushmap

[root@ceph-admin opt]# ceph osd setcrushmap -i /opt/map2.bin

603.5 开始迁移

# 修改pool的crush_rule规则为我们新加的规则1,我们用rbd pool做演示,真实环境下每一个pool都要操作

# 当前rbd crush_rule 是默认的0

[root@ceph-admin ~]# ceph osd dump|grep rbd|grep crush_rule

pool 1 'rbd' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 last_change 398 flags hashpspool stripe_width 0

# 设置crush_rule 为修改的crushmap 文件中的规则1(replicated_rule_1)

[root@ceph-admin ~]# ceph osd pool set rbd crush_rule replicated_rule_1

set pool 1 crush_rule to replicated_rule_1

# 由于默认的规则和规则1相同,数据结构不变化

[root@ceph-admin ~]# ceph -s

cluster:

id: e3a671b9-9bf2-4f25-9c04-af79b5cffc7a

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2

mgr: ceph-admin(active), standbys: ceph-node1, ceph-node2

mds: cephfs-1/1/1 up {0=ceph-admin=up:active}, 2 up:standby

osd: 6 osds: 6 up, 6 in

rgw: 3 daemons active

data:

pools: 9 pools, 144 pgs

objects: 347 objects, 5.79MiB

usage: 6.32GiB used, 444GiB / 450GiB avail

pgs: 144 active+clean

# 设置pool中的副本数为6副本

[root@ceph-admin ~]# ceph osd pool set rbd size 6

set pool 1 size to 6

# 由于规则1指定的bucket是从老的bucket树中选择3个副本,但是当前修改pool的副本数为6副本,不能满足,所以ceph状态为active+undersized

[root@ceph-admin ~]# ceph -s

cluster:

id: e3a671b9-9bf2-4f25-9c04-af79b5cffc7a

health: HEALTH_WARN

Degraded data redundancy: 32 pgs undersized

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2

mgr: ceph-admin(active), standbys: ceph-node1, ceph-node2

mds: cephfs-1/1/1 up {0=ceph-admin=up:active}, 2 up:standby

osd: 6 osds: 6 up, 6 in

rgw: 3 daemons active

data:

pools: 9 pools, 144 pgs

objects: 347 objects, 5.79MiB

usage: 6.33GiB used, 444GiB / 450GiB avail

pgs: 112 active+clean

32 active+undersized

# 设置crush_rule 为修改的规则2,规则2开始数据拷贝

[root@ceph-admin ~]# ceph osd pool set rbd crush_rule replicated_rule_2

set pool 1 crush_rule to replicated_rule_2

# 数据集开始拷贝(迁移)

[root@ceph-admin ~]# ceph -s

cluster:

id: e3a671b9-9bf2-4f25-9c04-af79b5cffc7a

health: HEALTH_WARN

Reduced data availability: 13 pgs peering

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2

mgr: ceph-admin(active), standbys: ceph-node1, ceph-node2

mds: cephfs-1/1/1 up {0=ceph-admin=up:active}, 2 up:standby

osd: 6 osds: 6 up, 6 in

rgw: 3 daemons active

data:

pools: 9 pools, 144 pgs

objects: 347 objects, 5.79MiB

usage: 6.33GiB used, 444GiB / 450GiB avail

pgs: 9.028% pgs not active

131 active+clean

13 peering

# 数据拷贝完成后 新老bucket树中都各有3个完整的副本,达到6副本。ceph集群状态又恢复到active+clean

[root@ceph-admin ~]# ceph -s

cluster:

id: e3a671b9-9bf2-4f25-9c04-af79b5cffc7a

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2

mgr: ceph-admin(active), standbys: ceph-node1, ceph-node2

mds: cephfs-1/1/1 up {0=ceph-admin=up:active}, 2 up:standby

osd: 6 osds: 6 up, 6 in

rgw: 3 daemons active

data:

pools: 9 pools, 144 pgs

objects: 347 objects, 5.79MiB

usage: 6.33GiB used, 444GiB / 450GiB avail

pgs: 144 active+clean

# 修改规则为3

[root@ceph-admin ~]# ceph osd pool set rbd crush_rule replicated_rule_3

set pool 1 crush_rule to replicated_rule_3

# 由于规则3是 只保留在新bucket树中的数据,系统会自动删除老bucket中的3副本数据,但是这个时候pool 中的副本数还是6副本所以集群状态active+clean+remapped

[root@ceph-admin ~]# ceph -s

cluster:

id: e3a671b9-9bf2-4f25-9c04-af79b5cffc7a

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2

mgr: ceph-admin(active), standbys: ceph-node1, ceph-node2

mds: cephfs-1/1/1 up {0=ceph-admin=up:active}, 2 up:standby

osd: 6 osds: 6 up, 6 in; 32 remapped pgs

rgw: 3 daemons active

data:

pools: 9 pools, 144 pgs

objects: 347 objects, 5.79MiB

usage: 6.33GiB used, 444GiB / 450GiB avail

pgs: 112 active+clean

32 active+clean+remapped

io:

client: 4.00KiB/s rd, 0B/s wr, 3op/s rd, 2op/s wr

# 修改pool的副本为3副本,

[root@ceph-admin ~]# ceph osd pool set rbd size 3

set pool 1 size to 3

# 上面pool的副本数 大于 实际的副本数 导致状态为active+clean+remapped ,修改pool副本数为3后,状态恢复健康

[root@ceph-admin ~]# ceph -s

cluster:

id: e3a671b9-9bf2-4f25-9c04-af79b5cffc7a

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2

mgr: ceph-admin(active), standbys: ceph-node1, ceph-node2

mds: cephfs-1/1/1 up {0=ceph-admin=up:active}, 2 up:standby

osd: 6 osds: 6 up, 6 in

rgw: 3 daemons active

data:

pools: 9 pools, 144 pgs

objects: 347 objects, 5.79MiB

usage: 6.33GiB used, 444GiB / 450GiB avail

pgs: 144 active+clean

io:

client: 4.00KiB/s rd, 0B/s wr, 3op/s rd, 2op/s wr

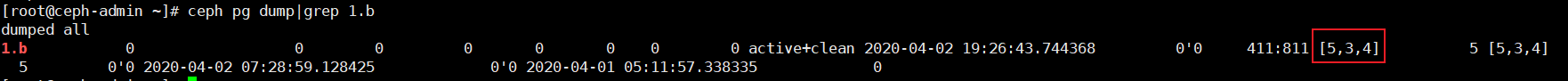

3.6 检查验证

rbd 池中的pg 已经迁移到了新的osd3,4,5中,符合迁移要求。

来源:oschina

链接:https://my.oschina.net/wangzilong/blog/3217618