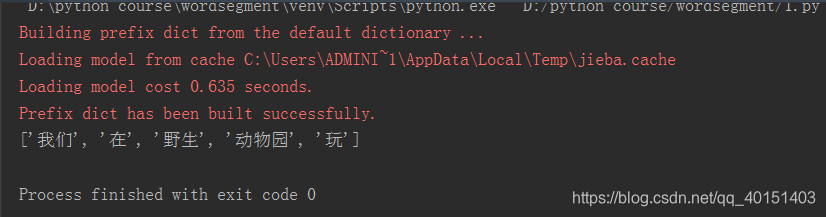

1.文本形式:

import jieba

text = '我们在野生动物园玩'

wordlist=jieba.lcut(text) # wordlist默认是列表形式

print(wordlist)

输出结果:

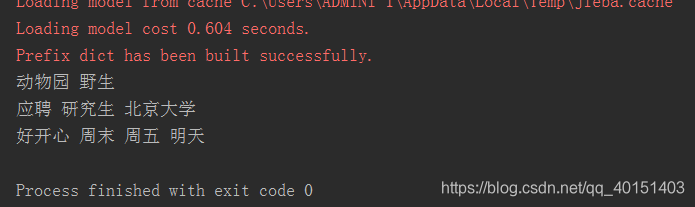

2.文件形式

import jieba

import jieba.analyse

jieba.load_userdict("D:/python course/wordsegment/dict/dict.txt") # 匹配的词语词典

jieba.analyse.set_stop_words("D:/python course/wordsegment/dict/stop_words.txt") # 停用词词表

def splitSentence(inputFile, outputFile):

fin = open('D:\python course\wordsegment\data\input.txt', 'r') # 待分词文本

fout = open('D:\python course\wordsegment\data\output.txt', 'w') # 分词结果

for line in fin:

line = line.strip()

line = jieba.analyse.extract_tags(line)

outstr = " ".join(line) # 分词结果

print(outstr)

fout.write(outstr + '\n')

fin.close()

fout.close()

splitSentence('input.txt', 'output.txt')

输出结果:

来源:CSDN

作者:我爱北回归线

链接:https://blog.csdn.net/qq_40151403/article/details/104697792