Flink与Apache Hadoop MapReduce接口兼容,因此允许重用Hadoop MapReduce实现的代码。

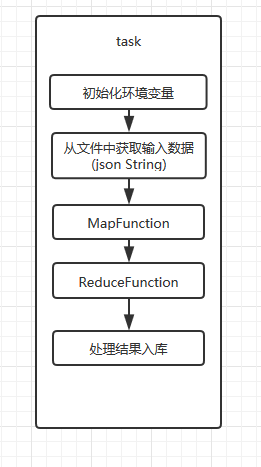

本文简述实际项目中Mapreduce在flink中的应用,task结构如下:

1.引入依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${project.version}</version>

</dependency>

2.task写法

public class CarrierTask {

public static void main(String[] args) {

final ParameterTool params = ParameterTool.fromArgs(args);

// 设置环境变量

final ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

// 设置全局参数

env.getConfig().setGlobalJobParameters(params);

// 获取输入数据

DataSet<String> text = env.readTextFile(params.get("input"));

//map function

DataSet<CarrierInfo> mapresult = text.map(new CarrierMap());

//reduce function(groupfield为分组字段)

DataSet<CarrierInfo> reduceresutl = mapresult.groupBy("groupfield").reduce(new CarrierReduce());

//数据处理结束遍历集合入库(mongo)

try {

List<CarrierInfo> reusltlist = reduceresutl.collect();

for (CarrierInfo carrierInfo : reusltlist) {

String carrier = carrierInfo.getCarrier();

Long count = carrierInfo.getCount();

Document doc = MongoUtils.findoneby("carrierstatics", "Portrait", carrier);

if (doc == null) {

doc = new Document();

doc.put("info", carrier);

doc.put("count", count);

} else {

Long countpre = doc.getLong("count");

Long total = countpre + count;

doc.put("count", total);

}

MongoUtils.saveorupdatemongo("carrierstatics", "Portrait", doc);

}

env.execute("carrier analysis");

} catch (Exception e) {

e.printStackTrace();

}

}

}

3.map function

map方法主要负责业务逻辑处理,将json转化对象返回。

public class CarrierMap implements MapFunction<String, CarrierInfo>{

@Override

public CarrierInfo map(String s) throws Exception {

if(StringUtils.isBlank(s)){

return null;

}

String[] userinfos = s.split(",");

String userid = userinfos[0];

String username = userinfos[1];

String sex = userinfos[2];

String telphone = userinfos[3];

String email = userinfos[4];

String age = userinfos[5];

String registerTime = userinfos[6];

String usetype = userinfos[7];//'终端类型:0、pc端;1、移动端;2、小程序端'

int carriertype = CarrierUtils.getCarrierByTel(telphone);

String carriertypestring = carriertype==0?"未知运营商":carriertype==1?"移动用户":carriertype==2?"联通用户":"电信用户";

String tablename = "userflaginfo";

String rowkey = userid;

String famliyname = "baseinfo";

String colum = "carrierinfo";//运营商

//原始数据入库(Hbase)

HbaseUtils.putdata(tablename,rowkey,famliyname,colum,carriertypestring);

CarrierInfo carrierInfo = new CarrierInfo();

//自定义分组字段

String groupfield = "carrierInfo=="+carriertype;

carrierInfo.setCount(1l);

carrierInfo.setCarrier(carriertypestring);

carrierInfo.setGroupfield(groupfield);

return carrierInfo;

}

}

4.reduce function

reduce方法负责数据的聚合,一般情况下做数量统计时使用累加,最后将聚合后的对象返回到task,将结果遍历入库。

public class CarrierReduce implements ReduceFunction<CarrierInfo>{

@Override

public CarrierInfo reduce(CarrierInfo carrierInfo, CarrierInfo t1) throws Exception {

String carrier = carrierInfo.getCarrier();

Long count1 = carrierInfo.getCount();

Long count2 = t1.getCount();

CarrierInfo carrierInfofinal = new CarrierInfo();

carrierInfofinal.setCarrier(carrier);

carrierInfofinal.setCount(count1+count2);

return carrierInfofinal;

}

}

来源:CSDN

作者:左岸Jason

链接:https://blog.csdn.net/djx1085213329/article/details/104610267