一 、Linear_Regression

1_线性回归的基本要素

模型(model)

数据集(data set):训练数据集(training data set) and 训练集(training set)

损失函数(price function):用来衡量损失值和真实值的误差,数值越小代表误差越小

优化函数_随机梯度下降(optimical function_random gradient descent)

在模型和损失函数形式较为简单时,上面的误差最小化问题的解可以直接被公式表达出来,这类解叫做解析解(analytical solution)

大多数深度学习的模型并没有解析解,只能通过优化算法有限次迭代模型参数来尽可能降低损失函数的值,这类解叫做数值解(numerical solution)

小批量随机梯度下降(mini-batch stochastic gradient descent)—— 求解数值解的优化算法:

step1:选取一组模型参数的初始值

step2:对参数进行多次迭代,使每次迭代都降低损失函数的值

step3:在每次迭代的过程中,先随机均匀采样一个由固定数目训练数据样本所组成的小批量

step4:求小批量中数据样本的平均损失有关模型参数的导数(梯度descent)

step5:用此结果与预先设定的一个正数的乘机作为模型参数在本次迭代的减小量

优化函数的两个步骤:

1、初始化模型参数,一般来说使用随机初始化;

2、我们在数据上迭代多次,通过在负梯度方向移动参数来更新每个参数

矢量计算(vector_compute):

1、两个向量按照元素逐一作标量加法;

2、两个向量直接作矢量加法;

2_代码分析

Program_1_同一矢量标量相加和矢量相加的比较

import time

import torch

# init variable a, b as 1000 dimension vector

n = 1000

a = torch.ones(n)

b = torch.ones(n)

# define a timer class to record time

class Timer:

# Record multiple running times

def __init__(self):

self.start_time = time.time()

self.times = []

self.start()

def start(self):

# start the timer

self.start_time = time.time()

return self.start_time

def stop(self):

# stop the timer and record time into a list

self.times.append(time.time() - self.start_time)

return self.times[-1]

def avg(self):

# calculate the average and return

return sum(self.times) / len(self.times)

def sum(self):

# return the sum of recorded time

return sum(self.times)

timer = Timer()

timer.start()

c = torch.zeros(n)

for i in range(n):

c[i] = a[i] + b[i]

print('%.5f sec' % timer.stop())

timer.start()

d = a + b

print('%.5f sec' % timer.stop())

Results:

0.01007 sec

0.00003 sec

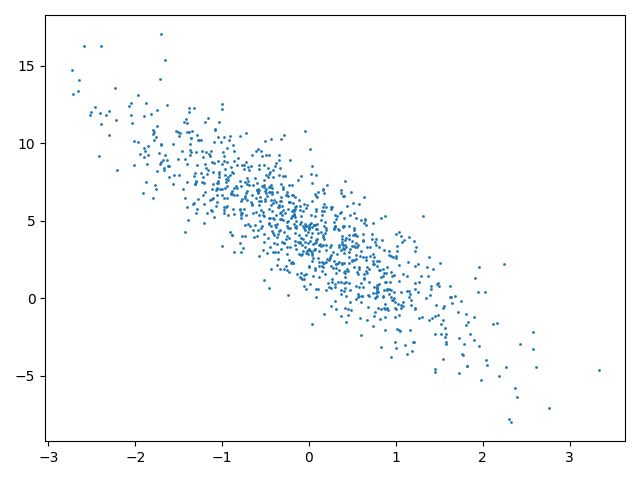

Program_2_线性回归模型的实现

import torch

import IPython.display

import numpy as np

import matplotlib.pyplot as plt

# set input feature number

num_inputs = 2

# set example number

num_examples = 1000

# set true weight and bias in order to generate corresponded label

true_w = [2, -3.4]

true_b = 4.2

features = torch.randn(num_examples, num_inputs, dtype=torch.float32)

labels = true_w[0] * features[:, 0] + true_w[1] * features[:, 1] + true_b

labels += torch.tensor(np.random.normal(0, 0.01, size=labels.size()), dtype=torch.float32)

plt.scatter(features[:, 1].numpy(), labels.numpy(), 1)

# load the dataset

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

np.random.shuffle(indices)

for i in range(0, num_examples, batch_size):

j = torch.LongTensor(indices[i:min(i + batch_size, num_examples)])

# the last time may be not enough for a whole batch

yield features.index_select(0, j), labels.index_select(0, j)

batch_size = 10

# x is the features and the y is the labels

for x, y in data_iter(batch_size, features, labels):

print(x, '\n', y)

break

# initial the parameters of the model

# w_weight b_bias

w = torch.tensor(np.random.normal(0, 0.01, (num_inputs, 1)), dtype=torch.float32)

b = torch.zeros(1, dtype=torch.float32)

w.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

# define the model

def linreg(X, w, b):

return torch.mm(X, w) + b

# define the loss function

def squared_loss(y_hat, y):

return (y_hat - y.view(y_hat.size())) ** 2 / 2

# define the optimal function

def sgd(params, lr, batch_size):

for param in params:

param.data -= lr * param.grad / batch_size

# super parameters init

lr = 0.03

num_epoches = 5

net = linreg

loss = squared_loss

# training

for epoch in range(num_epoches):

# in each epoch, all the samples in dataset will be used once

# X is the feature and y is the label of a batch sample

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y).sum()

# calculate the gradient of batch sample loss

l.backward()

# using small batch random gradient descent to iter model parameters

sgd([w, b], lr, batch_size)

# reset parameter gradient

w.grad.data.zero_()

b.grad.data.zero_()

train_l = loss(net(features, w, b), labels)

print('epoch %d, loss %f' % (epoch+1, train_l.mean().item()))

Results:

tensor([[-0.3569, -1.4891],

[-1.5324, 0.6264],

[-0.2262, 0.4117],

[ 0.0079, -0.1557],

[-1.3890, -0.3931],

[ 0.2599, -0.2163],

[ 0.1842, 0.9379],

[-1.4903, 0.2506],

[ 0.3148, -0.0577],

[-0.6676, -0.7581]])

tensor

([ 8.5545, -0.9781, 2.3439, 4.7273, 2.7604, 5.4580, 1.3687, 0.3776, 5.0183, 5.4375])

epoch 1, loss 0.042602

epoch 2, loss 0.000174

epoch 3, loss 0.000049

epoch 4, loss 0.000049

epoch 5, loss 0.000049

#Note:经过5次优化迭代,可知损失值越来越小,说明优化方法的可靠性

feature、model、dataset、label

feature就是数据集中每一个batch的data

model包括loss_function和optimal_function

选取weight和bias,计算得到label‘,将label’代入loss_function与label比较求出error

通过optimal_function对weight和bias进行优化,以减小loss的值

本例中,选择的例子是linear_regression,要确定的参数包括weight 和bias

选用的loss_function是square_loss

选用的optimal_function是mini-batch stochastic gradient descent

重复上述过程,直到loss的值降至最低,此时得到的weight和bias就是进行学习后得到的参数

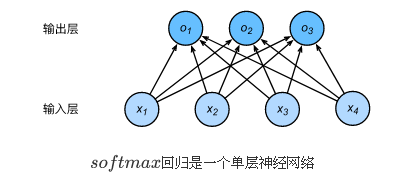

二、Softmax和分类模型

1_Softmax的基本概念

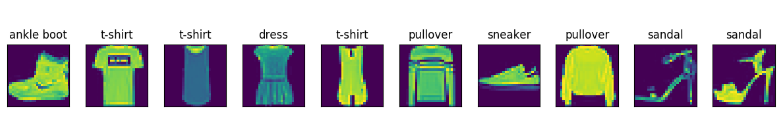

· Softmax用来进行简单的图像分类

假设:输入图像的宽和高均为2pixels,色彩为gray

那么我们将这四个pixels分别定义为x1, x2, x3, x4

如果真实的label是狗、猫和鸡,这些labels对应的离散值为y1, y2, y3

通常采用离散的数值来表示类别,也就是y1=1, y2=2, y3=3 分别代表了三类不同的事物

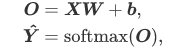

· 权重矢量

o1 = x1w11+x2w21+x3w31+x4w41+b1

o2 = x1w12+x2w22+x3w32+x4w42+b2

o3 = x1w13+x2w23+x3w33+x4w43+b3

· 神经网络图

softmax同线性回归一样,也是一个单层的神经网络,由于每个输出的计算都取决于输入的所有变量,所以softmax回归的输出层也是一个全连接层(full-connection layer)

由于分类问题需要得到离散的预测输出,所以将输出值oi当作是预测类别为i的置信度,并将值最大的输出所对应的类作为预测输出

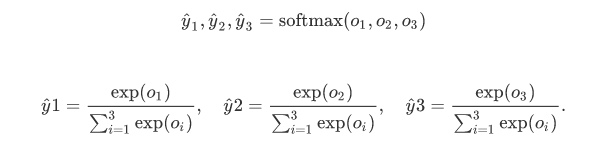

· Softmax运算符(softmax operator)

直接使用输出层描述分类有两个问题:

1_由于输出层的输出值的范围不确定,所以很难从数值的大小上判断这些离散值所代表的意义

2_由于真实标签是离散值,所以这些离散值和不确定范围的输出值之间的误差难以衡量

Softmax operator的存在就是为了解决上述问题:

它将最后的输出值变为值为正和为一的概率分布

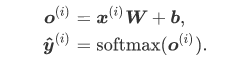

· 计算效率

1.单样本矢量计算表达式——提高计算效率(Linear_regression中已经说明矢量计算相对于标量计算效率要高

2.小批量矢量计算表达式——进一步提高计算效率

2_交叉熵损失函数

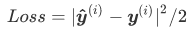

· 平方损失估计

· 交叉熵损失估计

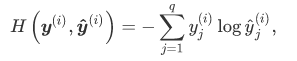

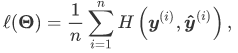

交叉熵:

交叉熵损失函数更适合于衡量两个概率分布差异的测量

交叉熵损失函数:

3_代码分析

Program_1_get the datasets

import matplotlib.pyplot as plt

import torch

import torchvision

import torchvision.transforms as transforms

import time

import sys

import d2lzh as d2l

# get dataset by the network

mnist_train = torchvision.datasets.FashionMNIST(root='/home/yuzhu/input/FashionMNIST2065', train=True, download=True, transform=transforms.ToTensor())

mnist_test = torchvision.datasets.FashionMNIST(root='/home/yuzhu/input/FashionMNIST2065', train=False, download=True, transform=transforms.ToTensor())

# show the result

print(type(mnist_train))

print(len(mnist_train), len(mnist_test))

Note_1:除了导入的package之外,还需要install名为mxnet的包

Note_2:root是数据集的根目录,其中存放processed/training.pt以及processed/test.pt文件

# we can look up every sample by the label

feature, label = mnist_train[0]

print(feature.shape, label)

# the size of the sample is a 3-dimension arrays, representing the three roads of the picture

# if the data which hasn't been converted is picture, we can find the parameters of the picture

mnist_PIL = torchvision.datasets.FashionMNIST(root='/home/yuzhu/input/FashionMNIST2065', train=True, download=True)

PIL_feature, label = mnist_PIL[0]

print(PIL_feature)

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat', 'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

def show_fashion_mnist(images, labels):

d2l.use_svg_display()

_, figs = plt.subplots(1, len(images), figsize=(12, 12))

for f, img, lbl in zip(figs, images, labels):

f.imshow(img.view((28, 28)).numpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

plt.show()

x, y = [], []

for i in range(10):

x.append(mnist_train[i][0])

y.append(mnist_train[i][1])

show_fashion_mnist(x, get_fashion_mnist_labels(y))

Results:

<class ‘torchvision.datasets.mnist.FashionMNIST’>

60000 10000

torch.Size([1, 28, 28]) 9

<PIL.Image.Image image mode=L size=28x28 at 0x7FCF986AF9B0>

Program_2_Softmax_Achieve

import torch

import torchvision

import numpy as np

import sys

import d2lzh as d2l

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, root='/home/yuzhu/input/FashionMNIST2065')

# initial the model parameters

num_inputs = 784

# 28*28 = 784

num_outputs = 10

w = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_outputs)), dtype=torch.float)

b = torch.zeros(num_outputs, dtype=torch.float)

w.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

x = torch.tensor([[1, 2, 3], [4, 5, 6]])

# define the softmax operator

def softmax(x):

x_exp = x.exp()

partition = x_exp.sum(dim=1, keepdim=True)

return x_exp / partition

X = torch.rand(2, 5)

x_prob = softmax(X)

print(x_prob, '\n', x_prob.sum(dim=1))

# define the model of softmax_regression

def net(x):

return softmax(torch.mm(x.view((-1, num_inputs)), w) + b)

y_hat = torch.tensor([[0.1, 0.3, 0.6], [0.3, 0.2, 0.5]])

y = torch.LongTensor([0, 2])

y_hat.gather(1, y.view(-1, 1))

# define the loss function

def cross_entropy(y_hat, y):

return - torch.log(y_hat.gather(1, y.view(-1, 1)))

# define the accuracy

def accuracy(y_hat, y):

return (y_hat.argmax(dim=1) == y).float().mean().item()

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for x, y in data_iter:

acc_sum += (net(x).argmax(dim=1) == y).float().sum.item()

n += y.shape[0]

return acc_sum / n

# training model

num_epoches, lr = 5, 0.1

def train_ch3(net, train_iter, test_iter, loss, num_epoches, batch_size, params = None, lr = None, optimizer = None):

for epoch in range(num_epoches):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

# gradient zero

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

d2l.sgd(params, lr, batch_size)

else:

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f' % (epoch+1, train_l_sum / n, train_acc_sum/n, test_acc))

train_ch3(net, train_iter, test_iter, cross_entropy, num_epoches, batch_size, [w, b], lr)

x, y = iter(test_iter).next()

true_labels = d2l.get_fashion_mnist_labels(y.numpy())

pred_labels = d2l.get_fashion_mnist_labels(net(x).argmax(dim=1).numpy())

titles = [true + '\n' + pred for true, pred in zip(true_labels, pred_labels)]

d2l.show_fashion_mnist(x[0: 9], titles[0: 9])

Note_1:tensor的.view属性是一个将已有的矩阵形状重组的过程,在重组中,不确定位置可使用-1补位,不确定位置最多只可以有1个

Note_2:tensor的.mm属性代表矩阵乘法

Note_3:LongTensor为Float64, Tensor为Float32

Note_4:Pychram实现存在一个bug,train_iter的数据类型为NDArray,没有.view属性

三、多层感知机

1_多层感知机(multilayer perceptron, MLP) 的基本知识

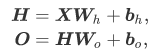

多层感知机的神经网络图中,含有一个隐藏层,隐藏层和输出层均为全链接层, 含有一种单隐藏层的多层感知机的设计,其输出的计算为:

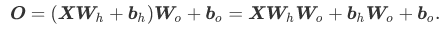

也就是将隐藏层的输出直接作为输出层的输入,将上述两个式子联立起来就是:

从联立得到的式子中可以看出,虽然神经网络引入了隐藏层,却依然等价于一个单层神经网络,其中输出层的权重参数为WoWh, 偏差参数为bhWo+bo,由此可以知道,在线性变换中,即使添加再多的隐藏层,输出也仍然与单层神经网络等价

· 激活函数

上述问题的根源是全连接层只是对数据进行仿射变换(affine transformation),而多个仿设变换的叠加仍然是一个仿设变换,解决该问题的方法是引入非线性变换,例如对隐藏变量使用按元素运算的非线性函数进行变换,然后在作为下一个全连接层的输入,这个非线性函数就被称为激活函数(activation function)

常有的激活函数包括:

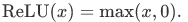

1_ReLU函数

ReLU(rectified linear unit) 只保留正数元素,并将负数元素清零:

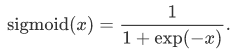

2_Sigmoid函数

Sigmoid函数可以将元素的值变换在0和1之间:

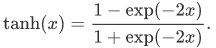

3_tanh函数

tanh(双曲正切)函数可以将元素的值变换在-1到1之间:

关于激活函数的选择,ReLU函数是一个通用的激活函数,在大多数情况下使用,但是ReLU函数值只能在隐藏层中使用,sigmoid函数用于分类器时的效果更好,在神经网络层比较多的时候,最好使用ReLU函数,由于其比较简单,计算量少

2_多层感知机(MLP)

多层感知机就是含有至少一个隐藏层的由全连接层组成的神经网络,且每个隐藏层的输出通过激活函数进行变换,多层感知机的层数和各隐藏单元个数都是超参数

Program:

import numpy as np

import sys

import d2lzh as d2l

import torch

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, root='/home/yuzhu/input/FashionMNIST2065')

# define the model parameter

num_inputs, num_outputs, num_hiddens = 784, 10, 256

w1 = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_hiddens)), dtype=torch.float)

b1 = torch.zeros(num_hiddens, dtype=torch.float)

w2 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_outputs)), dtype=torch.float)

b2 = torch.zeros(num_outputs, dtype=torch.float)

params = [w1, b1, w2, b2]

for param in params:

param.requires_grad_(requires_grad=True)

# define the activation function

def relu(x):

return torch.max(input=x, other=torch.tensor(0.0))

# define the net

def net(x):

x = x.view((-1, num_inputs))

H = relu(torch.matmul(x, w1) + b1)

return torch.matmul(H, w2) + b2

# define the loss funcion

loss = torch.nn.CrossEntropyLoss()

# training

num_epoches, lr = 5, 100.0

d2l.train_ch3(net, train_iter, test_iter, loss, num_epoches, batch_size, params, lr)

来源:CSDN

作者:yonki_e

链接:https://blog.csdn.net/bkbvushu/article/details/104310089