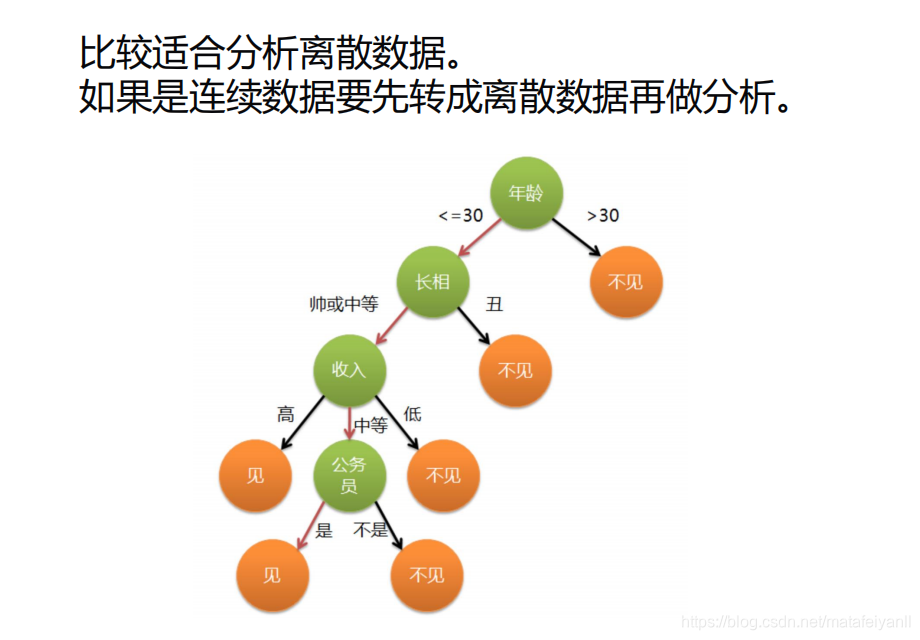

决策树ID3

原理

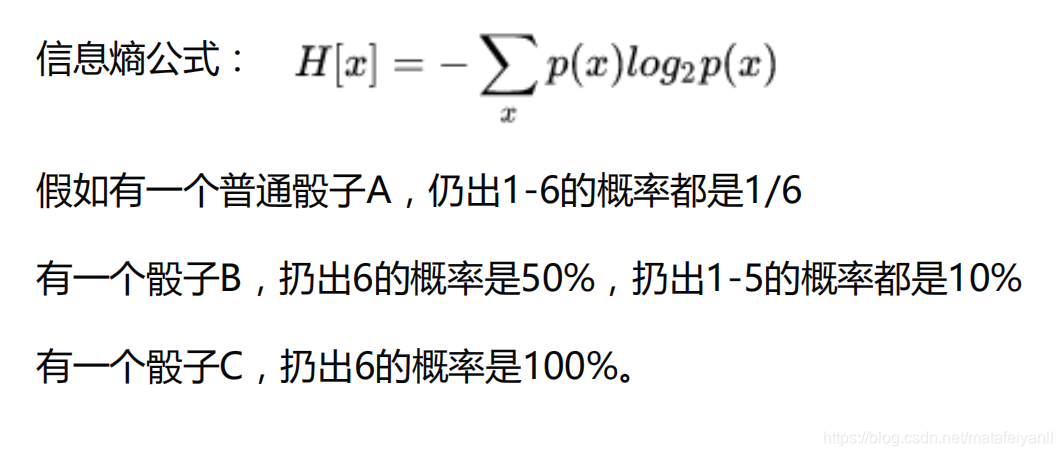

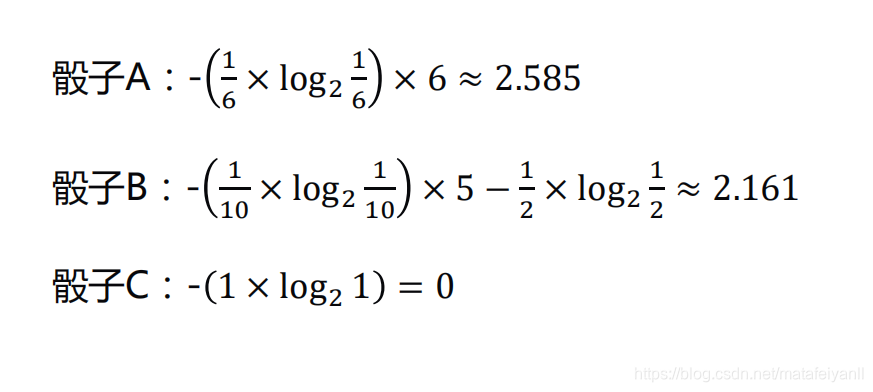

熵(entropy)概念–衡量不确定性的大小

一条信息的信息量大小和它的不确定性有直接的关系,要搞清楚一件非常非常不确定的事情,或者是我们一无所知的事情,需要了解大量信息->信息量的度量就

等于不确定性的多少。

信息熵的计算

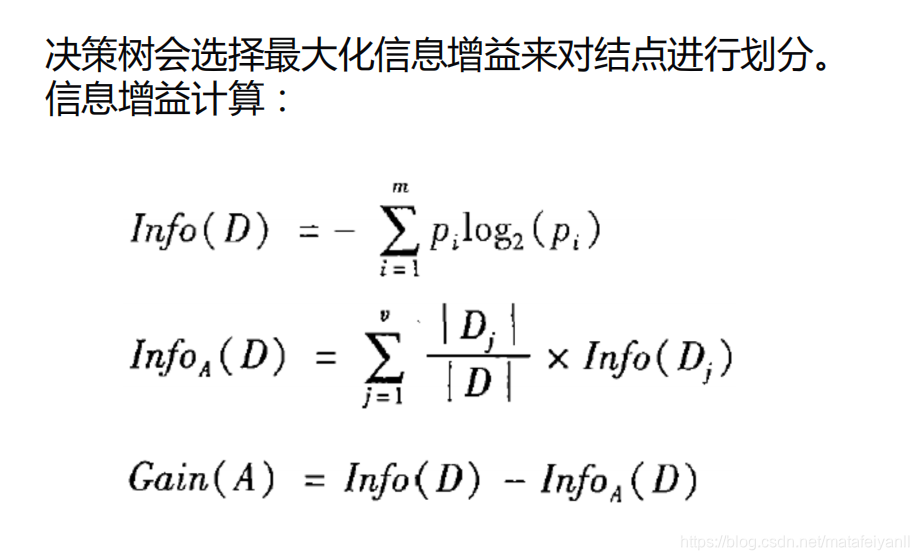

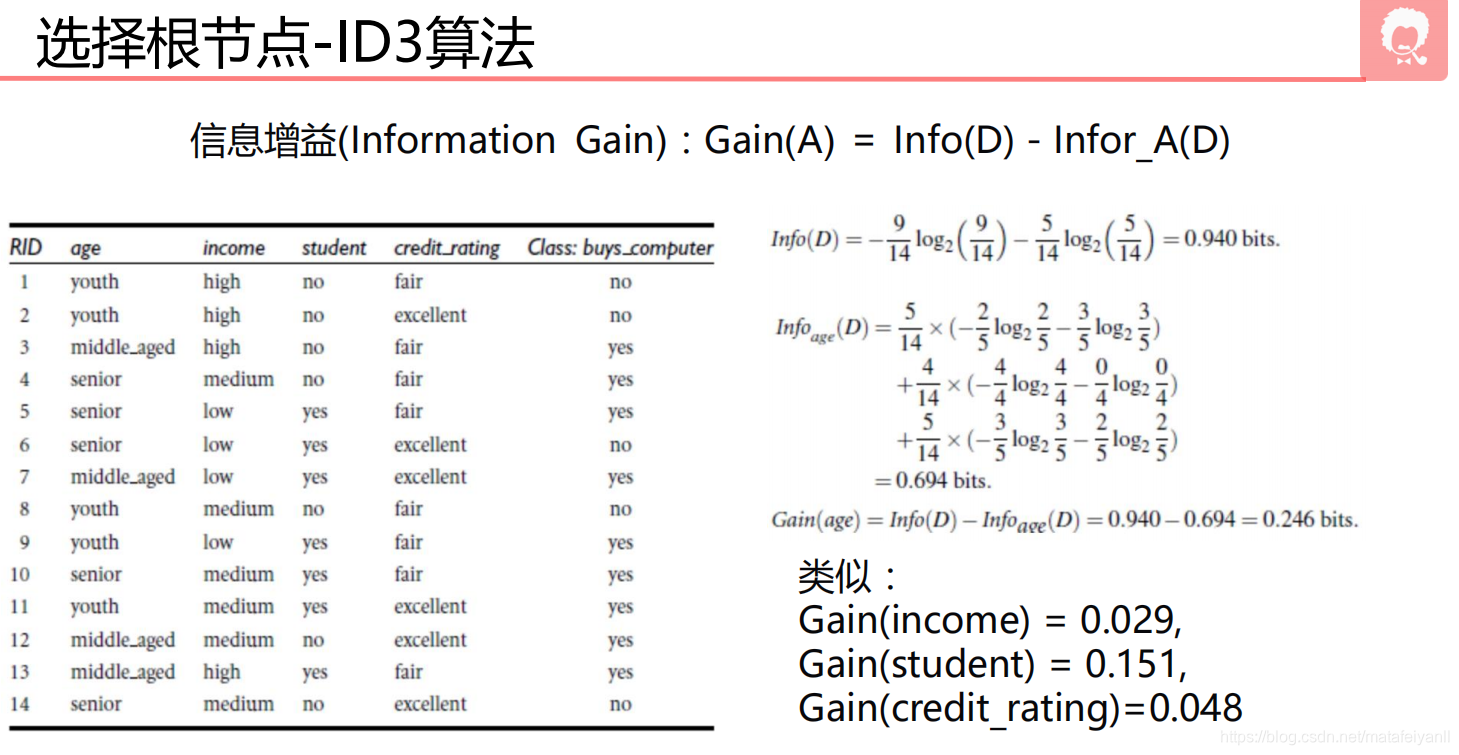

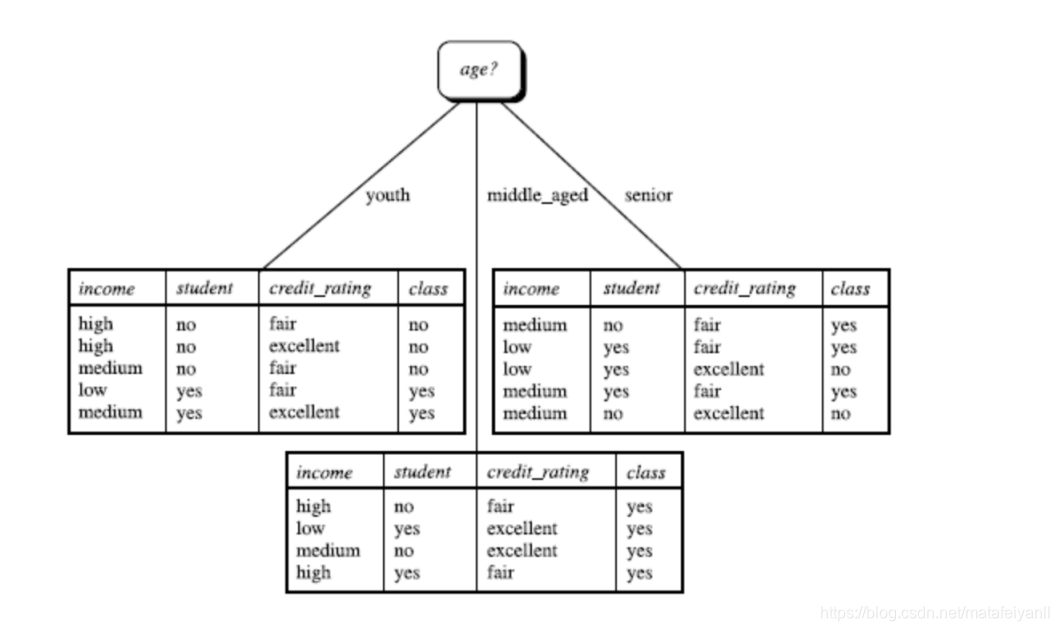

ID3算法

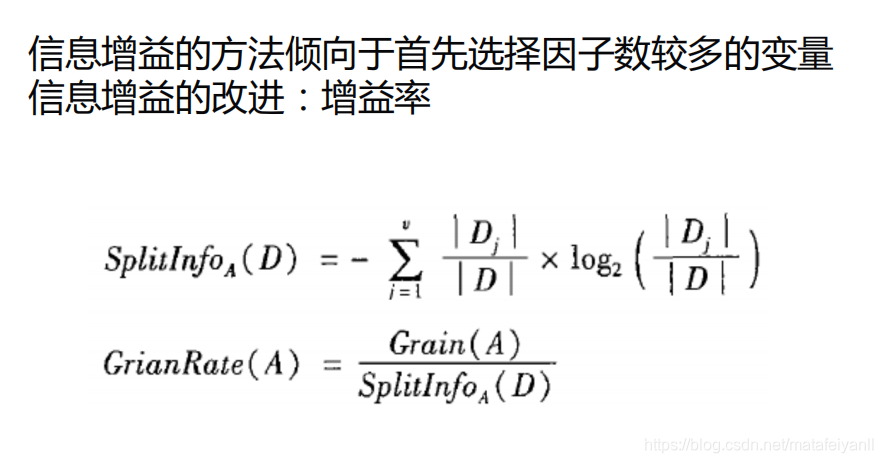

C4.5算法

算法实现

from sklearn.feature_extraction import DictVectorizer

from sklearn import tree

from sklearn import preprocessing

import csv

import numpy as np

# 读入数据

Dtree = open(r'AllElectronics.csv', 'r')

reader = csv.reader(Dtree)

# 获取第一行数据

headers = reader.__next__()

print(headers)

# 定义两个列表

featureList = []

labelList = []

for row in reader:

# 把label存入list

labelList.append(row[-1])

rowDict = {}

for i in range(1, len(row)-1):

#建立一个数据字典

rowDict[headers[i]] = row[i]

# 把数据字典存入list

featureList.append(rowDict)

print(featureList)

'''

['RID', 'age', 'income', 'student', 'credit_rating', 'class_buys_computer']

[{'age': 'youth', 'income': 'high', 'student': 'no', 'credit_rating': 'fair'}, {'age': 'youth', 'income': 'high', 'student': 'no', 'credit_rating': 'excellent'}, {'age': 'middle_aged', 'income': 'high', 'student': 'no', 'credit_rating': 'fair'}, {'age': 'senior', 'income': 'medium', 'student': 'no', 'credit_rating': 'fair'}, {'age': 'senior', 'income': 'low', 'student': 'yes', 'credit_rating': 'fair'}, {'age': 'senior', 'income': 'low', 'student': 'yes', 'credit_rating': 'excellent'}, {'age': 'middle_aged', 'income': 'low', 'student': 'yes', 'credit_rating': 'excellent'}, {'age': 'youth', 'income': 'medium', 'student': 'no', 'credit_rating': 'fair'}, {'age': 'youth', 'income': 'low', 'student': 'yes', 'credit_rating': 'fair'}, {'age': 'senior', 'income': 'medium', 'student': 'yes', 'credit_rating': 'fair'}, {'age': 'youth', 'income': 'medium', 'student': 'yes', 'credit_rating': 'excellent'}, {'age': 'middle_aged', 'income': 'medium', 'student': 'no', 'credit_rating': 'excellent'}, {'age': 'middle_aged', 'income': 'high', 'student': 'yes', 'credit_rating': 'fair'}, {'age': 'senior', 'income': 'medium', 'student': 'no', 'credit_rating': 'excellent'}]

'''

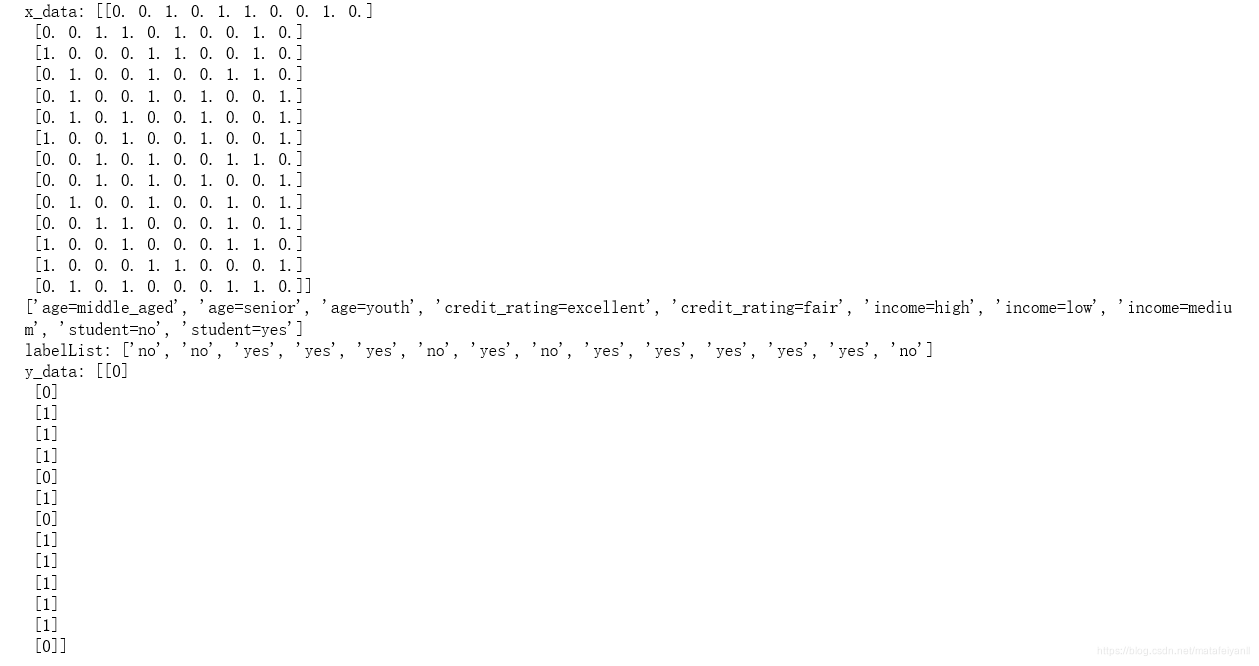

# 把数据转换成01表示

vec = DictVectorizer()

x_data = vec.fit_transform(featureList).toarray()

print("x_data: " + str(x_data))

# 打印属性名称

print(vec.get_feature_names())

# 打印标签

print("labelList: " + str(labelList))

# 把标签转换成01表示

lb = preprocessing.LabelBinarizer()

y_data = lb.fit_transform(labelList)

print("y_data: " + str(y_data))

# 创建决策树模型

model = tree.DecisionTreeClassifier(criterion='entropy') #entropy熵 ID3

# 输入数据建立模型

model.fit(x_data, y_data)

# 测试

x_test = x_data[0]

print("x_test: " + str(x_test))

X_test = x_test.reshape(1,-1)

print(X_test)

X_test1 = x_data[0,:,np.newaxis]

print(X_test1)

predict = model.predict(x_test.reshape(1,-1))

print("predict: " + str(predict))

x_test: [0. 0. 1. 0. 1. 1. 0. 0. 1. 0.]

[[0. 0. 1. 0. 1. 1. 0. 0. 1. 0.]]

[[0.]

[0.]

[1.]

[0.]

[1.]

[1.]

[0.]

[0.]

[1.]

[0.]]

predict: [0]

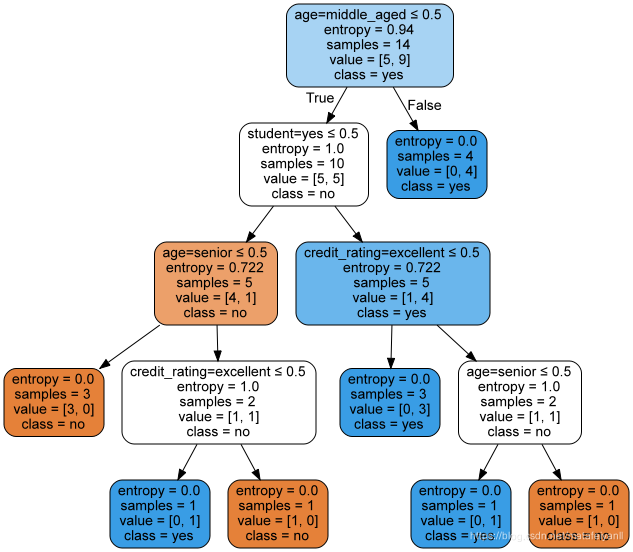

# 导出决策树

# pip install graphviz

# http://www.graphviz.org/

import graphviz

dot_data = tree.export_graphviz(model,

out_file = None,

feature_names = vec.get_feature_names(),

class_names = lb.classes_,

filled = True,

rounded = True,

special_characters = True)

graph = graphviz.Source(dot_data)

graph.render('computer')

来源:CSDN

作者:马踏飞燕&lin_li

链接:https://blog.csdn.net/matafeiyanll/article/details/104173534