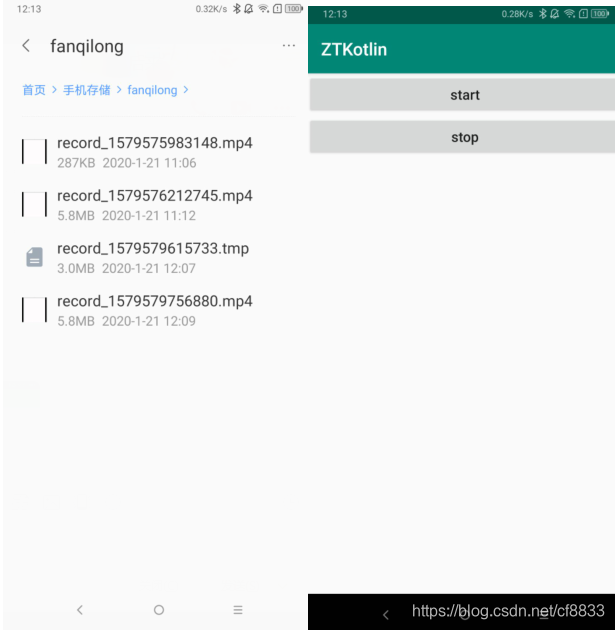

1.效果图:

2.添加依赖

dependencies {

implementation fileTree(dir: 'libs', include: ['*.jar'])

implementation"org.jetbrains.kotlin:kotlin-stdlib-jdk7:$kotlin_version"

implementation 'androidx.appcompat:appcompat:1.1.0'

implementation 'androidx.core:core-ktx:1.0.2'

implementation 'androidx.constraintlayout:constraintlayout:1.1.3'

testImplementation 'junit:junit:4.12'

androidTestImplementation 'androidx.test.ext:junit:1.1.1'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.2.0'

api 'com.blankj:utilcode:1.24.4'

}

repositories {

mavenCentral()

}

3.注册权限:

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

4.主界面,

test.aac是录屏的时候配的音乐,可以随便找另外一个放到assets文件夹里面进行替换

package com.ufi.pdioms.ztkotlin

import android.content.Intent

import android.content.res.AssetFileDescriptor

import android.media.MediaPlayer

import android.os.Build

import androidx.appcompat.app.AppCompatActivity

import android.os.Bundle

import android.util.Log

import android.widget.Toast

import com.blankj.utilcode.util.PathUtils

import kotlinx.android.synthetic.main.activity_main.*

class MainActivity : AppCompatActivity() {

// https://github.com/fanqilongmoli/AndroidScreenRecord

private var screenRecordHelper: ScreenRecordHelper? = null

private val afdd:AssetFileDescriptor by lazy { assets.openFd("test.aac") }

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

btnStart.setOnClickListener {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

if (screenRecordHelper == null) {

screenRecordHelper = ScreenRecordHelper(this, object : ScreenRecordHelper.OnVideoRecordListener {

override fun onBeforeRecord() {

}

override fun onStartRecord() {

play()

}

override fun onCancelRecord() {

releasePlayer()

}

override fun onEndRecord() {

releasePlayer()

}

}, PathUtils.getExternalStoragePath() + "/fanqilong")

}

screenRecordHelper?.apply {

if (!isRecording) {

// 如果你想录制音频(一定会有环境音量),你可以打开下面这个限制,并且使用不带参数的 stopRecord()

// recordAudio = true

startRecord()

}

}

} else {

Toast.makeText(this@MainActivity.applicationContext, "sorry,your phone does not support recording screen", Toast.LENGTH_LONG).show()

}

}

btnStop.setOnClickListener {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

screenRecordHelper?.apply {

if (isRecording) {

if (mediaPlayer != null) {

// 如果选择带参数的 stop 方法,则录制音频无效

stopRecord(mediaPlayer!!.duration.toLong(), 15 * 1000, afdd)

} else {

stopRecord()

}

}

}

}

}

}

private fun play() {

mediaPlayer = MediaPlayer()

try {

mediaPlayer?.apply {

this.reset()

this.setDataSource(afdd.fileDescriptor, afdd.startOffset, afdd.length)

this.isLooping = true

this.prepare()

this.start()

}

} catch (e: Exception) {

Log.d("fanqilong", "播放音乐失败")

} finally {

}

}

// 音频播放

private var mediaPlayer: MediaPlayer? = null

private fun releasePlayer() {

mediaPlayer?.apply {

stop()

release()

}

mediaPlayer = null

}

override fun onActivityResult(requestCode: Int, resultCode: Int, data: Intent?) {

super.onActivityResult(requestCode, resultCode, data)

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP && data != null) {

screenRecordHelper?.onActivityResult(requestCode, resultCode, data)

}

}

override fun onDestroy() {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

screenRecordHelper?.clearAll()

}

afdd.close()

super.onDestroy()

}

}

5.录屏代码

package com.ufi.pdioms.ztkotlin

import android.app.Activity

import android.content.Context

import android.content.Intent

import android.content.pm.PackageManager

import android.content.res.AssetFileDescriptor

import android.hardware.display.DisplayManager

import android.hardware.display.VirtualDisplay

import android.media.*

import android.media.projection.MediaProjection

import android.media.projection.MediaProjectionManager

import android.net.Uri

import android.os.Build

import android.os.Environment

import android.os.Handler

import android.util.DisplayMetrics

import android.util.Log

import android.widget.Toast

import androidx.annotation.RequiresApi

import com.blankj.utilcode.constant.PermissionConstants

import com.blankj.utilcode.util.PermissionUtils

import java.io.File

import java.lang.Exception

import java.nio.ByteBuffer

@RequiresApi(Build.VERSION_CODES.LOLLIPOP)

class ScreenRecordHelper @JvmOverloads constructor(

private var activity: Activity,

private val listener: OnVideoRecordListener?,

private var savePath: String = Environment.getExternalStorageDirectory().absolutePath + File.separator

+ "DCIM" + File.separator + "Camera",

private val saveName: String = "record_${System.currentTimeMillis()}"

) {

private val mediaProjectionManager by lazy { activity.getSystemService(Context.MEDIA_PROJECTION_SERVICE) as? MediaProjectionManager }

private var mediaRecorder: MediaRecorder? = null

private var mediaProjection: MediaProjection? = null

private var virtualDisplay: VirtualDisplay? = null

private val displayMetrics by lazy { DisplayMetrics() }

private var saveFile: File? = null

var isRecording = false

var recordAudio = false

init {

activity.windowManager.defaultDisplay.getMetrics(displayMetrics)

}

companion object {

private const val VIDEO_FRAME_RATE = 30

private const val REQUEST_CODE = 1024

private const val TAG = "ScreenRecordHelper"

}

fun startRecord() {

if (mediaProjectionManager == null) {

Log.d(TAG, "mediaProjectionManager == null,当前手机暂不支持录屏")

showToast(R.string.phone_not_support_screen_record)

return

}

PermissionUtils.permission(PermissionConstants.STORAGE, PermissionConstants.MICROPHONE)

.callback(object : PermissionUtils.SimpleCallback {

override fun onGranted() {

mediaProjectionManager?.apply {

listener?.onBeforeRecord()

val intent = this.createScreenCaptureIntent()

if (activity.packageManager.resolveActivity(

intent,

PackageManager.MATCH_DEFAULT_ONLY

) != null

) {

activity.startActivityForResult(intent, REQUEST_CODE)

} else {

showToast(R.string.phone_not_support_screen_record)

}

}

}

override fun onDenied() {

showToast(R.string.permission_denied)

}

}).request()

}

@RequiresApi(Build.VERSION_CODES.N)

fun resume() {

mediaRecorder?.resume()

}

@RequiresApi(Build.VERSION_CODES.N)

fun pause() {

mediaRecorder?.pause()

}

fun onActivityResult(requestCode: Int, resultCode: Int, data: Intent) {

if (requestCode == REQUEST_CODE) {

if (resultCode == Activity.RESULT_OK) {

mediaProjection = mediaProjectionManager!!.getMediaProjection(resultCode, data)

// 部分手机录制视频的时候 会出现弹框

Handler().postDelayed({

if (initRecorder()) {

isRecording = true

mediaRecorder?.start()

listener?.onStartRecord()

} else {

showToast(R.string.phone_not_support_screen_record)

}

}, 150)

} else {

showToast(R.string.phone_not_support_screen_record)

}

}

}

fun cancelRecord(){

stopRecord()

saveFile?.delete()

saveFile = null

listener?.onCancelRecord()

}

fun stopRecord(videoDuration: Long = 0, audioDuration: Long = 0, afdd: AssetFileDescriptor? = null){

stop()

if (audioDuration != 0L && afdd != null) {

syntheticAudio(videoDuration, audioDuration, afdd)

} else {

// saveFile

if (saveFile != null) {

val newFile = File(savePath, "$saveName.mp4")

// 录制结束后修改后缀为 mp4

saveFile!!.renameTo(newFile)

refreshVideo(newFile)

}

saveFile = null

}

}

private fun refreshVideo(newFile: File) {

Log.d(TAG, "screen record end,file length:${newFile.length()}.")

if (newFile.length() > 5000) {

val intent = Intent(Intent.ACTION_MEDIA_SCANNER_SCAN_FILE)

intent.data = Uri.fromFile(newFile)

activity.sendBroadcast(intent)

Log.e("TAG","refreshVideo: "+savePath)

showToast(R.string.save_to_album_success)

} else {

newFile.delete()

showToast(R.string.phone_not_support_screen_record)

Log.d(TAG, activity.getString(R.string.record_faild))

}

}

private fun stop() {

if (isRecording) {

isRecording = false

try {

mediaRecorder?.apply {

setOnErrorListener(null)

setOnInfoListener(null)

setPreviewDisplay(null)

stop()

Log.d(TAG, "stop success")

}

} catch (e: Exception) {

Log.e(TAG, "stopRecorder() error!${e.message}")

} finally {

mediaRecorder?.reset()

virtualDisplay?.release()

mediaProjection?.stop()

listener?.onEndRecord()

}

}

}

private fun initRecorder(): Boolean {

var result = true

val f = File(savePath)

if (!f.exists()) {

f.mkdir()

}

saveFile = File(savePath, "$saveName.tmp")

saveFile?.apply {

if (exists()) {

delete()

}

}

mediaRecorder = MediaRecorder()

val width = Math.min(displayMetrics.widthPixels, 1080)

val height = Math.min(displayMetrics.heightPixels, 1920)

mediaRecorder?.apply {

if (recordAudio) {

setAudioSource(MediaRecorder.AudioSource.MIC)

}

setVideoSource(MediaRecorder.VideoSource.SURFACE)

setOutputFormat(MediaRecorder.OutputFormat.MPEG_4)

setVideoEncoder(MediaRecorder.VideoEncoder.H264)

if (recordAudio) {

setAudioEncoder(MediaRecorder.AudioEncoder.AMR_NB)

}

setOutputFile(saveFile!!.absolutePath)

setVideoSize(width, height)

setVideoEncodingBitRate(8388608)

setVideoFrameRate(VIDEO_FRAME_RATE)

try {

prepare()

virtualDisplay = mediaProjection?.createVirtualDisplay(

"MainScreen", width, height, displayMetrics.densityDpi,

DisplayManager.VIRTUAL_DISPLAY_FLAG_AUTO_MIRROR, surface, null, null

)

Log.d(TAG, "initRecorder 成功")

} catch (e: Exception) {

Log.e(TAG, "IllegalStateException preparing MediaRecorder: ${e.message}")

e.printStackTrace()

result = false

}

}

return result

}

private fun showToast(resId: Int) {

Toast.makeText(activity.applicationContext, activity.applicationContext.getString(resId), Toast.LENGTH_SHORT)

.show()

}

fun clearAll() {

mediaRecorder?.release()

mediaRecorder = null

virtualDisplay?.release()

virtualDisplay = null

mediaProjection?.stop()

mediaProjection = null

}

/**

* https://stackoverflow.com/questions/31572067/android-how-to-mux-audio-file-and-video-file

*/

private fun syntheticAudio(audioDuration: Long, videoDuration: Long, afdd: AssetFileDescriptor) {

Log.d(TAG, "start syntheticAudio")

val newFile = File(savePath, "$saveName.mp4")

if (newFile.exists()) {

newFile.delete()

}

try {

newFile.createNewFile()

val videoExtractor = MediaExtractor()

videoExtractor.setDataSource(saveFile!!.absolutePath)

val audioExtractor = MediaExtractor()

afdd.apply {

audioExtractor.setDataSource(fileDescriptor, startOffset, length * videoDuration / audioDuration)

}

val muxer = MediaMuxer(newFile.absolutePath, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4)

videoExtractor.selectTrack(0)

val videoFormat = videoExtractor.getTrackFormat(0)

val videoTrack = muxer.addTrack(videoFormat)

audioExtractor.selectTrack(0)

val audioFormat = audioExtractor.getTrackFormat(0)

val audioTrack = muxer.addTrack(audioFormat)

var sawEOS = false

var frameCount = 0

val offset = 100

val sampleSize = 1000 * 1024

val videoBuf = ByteBuffer.allocate(sampleSize)

val audioBuf = ByteBuffer.allocate(sampleSize)

val videoBufferInfo = MediaCodec.BufferInfo()

val audioBufferInfo = MediaCodec.BufferInfo()

videoExtractor.seekTo(0, MediaExtractor.SEEK_TO_CLOSEST_SYNC)

audioExtractor.seekTo(0, MediaExtractor.SEEK_TO_CLOSEST_SYNC)

muxer.start()

// 每秒多少帧

// 实测 OPPO R9em 垃圾手机,拿出来的没有 MediaFormat.KEY_FRAME_RATE

val frameRate = if (videoFormat.containsKey(MediaFormat.KEY_FRAME_RATE)) {

videoFormat.getInteger(MediaFormat.KEY_FRAME_RATE)

} else {

31

}

// 得出平均每一帧间隔多少微妙

val videoSampleTime = 1000 * 1000 / frameRate

while (!sawEOS) {

videoBufferInfo.offset = offset

videoBufferInfo.size = videoExtractor.readSampleData(videoBuf, offset)

if (videoBufferInfo.size < 0) {

sawEOS = true

videoBufferInfo.size = 0

} else {

videoBufferInfo.presentationTimeUs += videoSampleTime

videoBufferInfo.flags = videoExtractor.sampleFlags

muxer.writeSampleData(videoTrack, videoBuf, videoBufferInfo)

videoExtractor.advance()

frameCount++

}

}

var sawEOS2 = false

var frameCount2 = 0

while (!sawEOS2) {

frameCount2++

audioBufferInfo.offset = offset

audioBufferInfo.size = audioExtractor.readSampleData(audioBuf, offset)

if (audioBufferInfo.size < 0) {

sawEOS2 = true

audioBufferInfo.size = 0

} else {

audioBufferInfo.presentationTimeUs = audioExtractor.sampleTime

audioBufferInfo.flags = audioExtractor.sampleFlags

muxer.writeSampleData(audioTrack, audioBuf, audioBufferInfo)

audioExtractor.advance()

}

}

muxer.stop()

muxer.release()

videoExtractor.release()

audioExtractor.release()

// 删除无声视频文件

saveFile?.delete()

} catch (e: Exception) {

Log.e(TAG, "Mixer Error:${e.message}")

// 视频添加音频合成失败,直接保存视频

saveFile?.renameTo(newFile)

} finally {

afdd.close()

Handler().post {

refreshVideo(newFile)

saveFile = null

}

}

}

interface OnVideoRecordListener {

/**

* 录制开始时隐藏不必要的UI

*/

fun onBeforeRecord()

/**

* 开始录制

*/

fun onStartRecord()

/**

* 取消录制

*/

fun onCancelRecord()

/**

* 结束录制

*/

fun onEndRecord()

}

}

6.布局

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

tools:context=".MainActivity">

<Button android:id="@+id/btnStart"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:textAllCaps="false"

android:text="start"/>

<Button android:id="@+id/btnStop"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:textAllCaps="false"

android:text="stop"/>

</LinearLayout>

end

来源:CSDN

作者:cf8833

链接:https://blog.csdn.net/cf8833/article/details/104060655