Redis Cluster介绍

Redis Cluster集群是redis集群的一种方式,由官方提供,由多个节点组成的分布式网络集群,每个节点可以是主,也可以是从,但每个主节点都需要有对应的从节点,保证高可用,主节点提供数据读写,不支持同时处理多个键(如mset/mget命令),因为redis需要把键均匀分布在各个节点上,并发量很高的情况下同时创建键值会降低性能并导致不可预测的行为。支持在线增加、删除节点,客户端可以连任何一个主节点进行读写。

Redis Cluster采用了分布式系统的分片(分区)的思路,每个主节点为一个分片,这样也就意味着 存储的数据是分散在所有分片中的。当增加节点或删除主节点时,原存储在某个主节点中的数据 会自动再次分配到其他主节点。

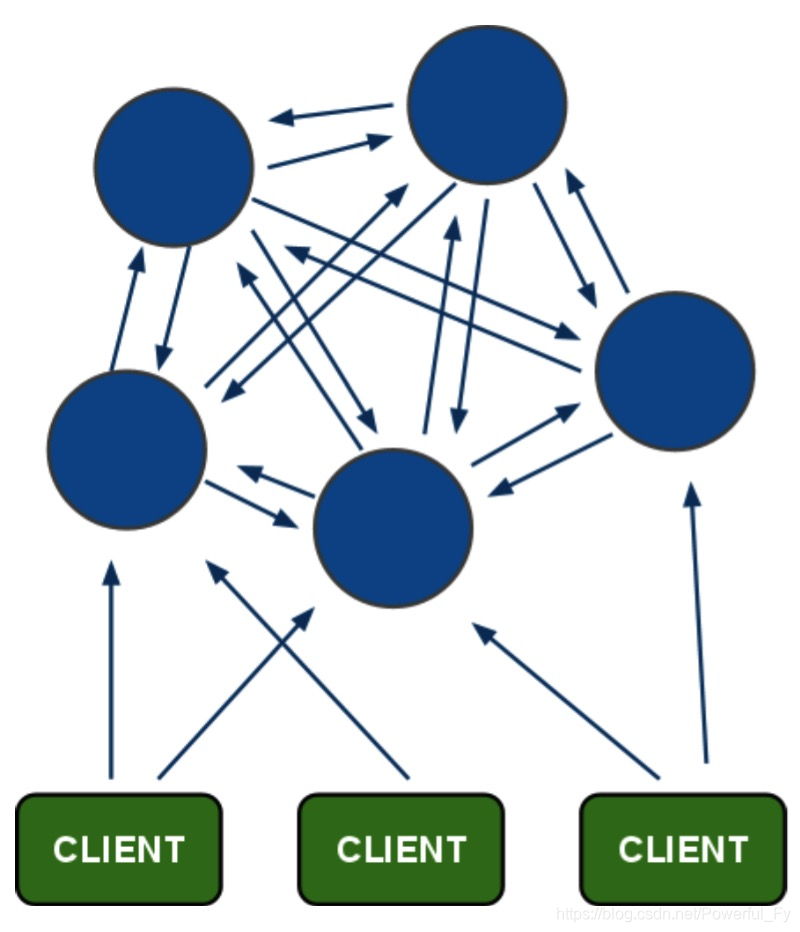

如图,各节点间是相互通信的,通信端口为各节点Redis服务端口+10000,这个端口是固定的,所以注意防火墙设置, 节点之间通过二进制协议通信,这样的目的是减少带宽消耗。

在Redis Cluster中有一个概念slot,我们翻译为槽。Slot数量是固定的,为16384个。这些slot会均匀地分布到各个节点上,另外Redis的键和值会根据hash算法存储在对应的slot中。简单讲,对于一个键值对,存的时候在哪里是通过 hash算法算出来的,那么取得时候也会算一下,知道值在哪个slot上。根据slot编号再到对应的节点上去取。

Redis Cluster无法保证数据的强一致性,这是因为当数据存储时,只要在主节点上存好了,就会告诉客户端存好了, 如果等所有从节点上都同步完再跟客户端确认,那么会有很大的延迟,这个对于客户端来讲是无法容忍的。所以,最终Redis Cluster只好放弃了数据强一致性,而把性能放在了首位。

创建集群

由于机器有限,使用两台机器实现redis cluster集群,每台机器需要启动3个redis服务

机器A(192.168.234.128)的3个redis服务的端口:7000 7001 7002

机器B(192.168.234.130)的3个redis服务的端口:7003 7004 7005

在两台机器上编译安装redis后,每台机器复制并编辑3个配置文件redis.conf,设置不同的端口号,数据目录,cluster相关参数后,分别启动6个redis服务

在A机器(192.168.234.128)操作:

创建7000端口配置文件:

[root@linux ~]# vim /etc/redis_7000.conf

配置文件内容:

port 7000

bind 192.168.234.128

daemonize yes

pidfile /var/run/redis_7000.pid

dir /data/redis/7000

cluster-enabled yes

cluster-config-file nodes_7000.conf

cluster-node-timeout 10100

appendonly yes

创建7001端口配置文件:

[root@linux ~]# vim /etc/redis_7001.conf

配置文件内容:

port 7001

bind 192.168.234.128

daemonize yes

pidfile /var/run/redis_7001.pid

dir /data/redis/7001

cluster-enabled yes

cluster-config-file nodes_7001.conf

cluster-node-timeout 10100

appendonly yes

创建7002端口配置文件:

[root@linux ~]# vim /etc/redis_7002.conf

配置文件内容:

port 7002

bind 192.168.234.128

daemonize yes

pidfile /var/run/redis_7002.pid

dir /data/redis/7002

cluster-enabled yes

cluster-config-file nodes_7002.conf

cluster-node-timeout 10100

appendonly yes

在B机器(192.168.234.130)操作:

创建7003端口配置文件:

[root@linux02 ~]# vim /etc/redis_7003.conf

配置文件内容:

port 7003

bind 192.168.234.130

daemonize yes

pidfile /var/run/redis_7003.pid

dir /data/redis/7003

cluster-enabled yes

cluster-config-file nodes_7003.conf

cluster-node-timeout 10100

appendonly yes

创建7004端口配置文件:

[root@linux02 ~]# vim /etc/redis_7004.conf

配置文件内容:

port 7004

bind 192.168.234.130

daemonize yes

pidfile /var/run/redis_7004.pid

dir /data/redis/7004

cluster-enabled yes

cluster-config-file nodes_7004.conf

cluster-node-timeout 10100

appendonly yes

创建7005端口配置文件:

[root@linux02 ~]# vim /etc/redis_7005.conf

配置文件内容:

port 7005

bind 192.168.234.130

daemonize yes

pidfile /var/run/redis_7005.pid

dir /data/redis/7005

cluster-enabled yes

cluster-config-file nodes_7005.conf

cluster-node-timeout 10100

appendonly yes

在A机器创建dir目录:

[root@linux ~]# mkdir /data/redis

[root@linux ~]# mkdir /data/redis/{7000,7001,7002}

在B机器创建dir目录:

[root@linux02 ~]# mkdir /data/redis

[root@linux02 ~]# mkdir /data/redis/{7003,7004,7005}

在A机器启动redis:

[root@linux ~]# redis-server /etc/redis_7000.conf

[root@linux ~]# redis-server /etc/redis_7001.conf

[root@linux ~]# redis-server /etc/redis_7002.conf

[root@linux ~]# ps aux|grep redis

root 8169 0.1 0.2 153900 2932 ? Ssl 15:17 0:00 redis-server 192.168.234.128:7000 [cluster]

root 8174 0.1 0.2 153900 2924 ? Ssl 15:17 0:00 redis-server 192.168.234.128:7001 [cluster]

root 8180 0.1 0.2 153900 2928 ? Ssl 15:17 0:00 redis-server 192.168.234.128:7002 [cluster]

在B机器启动redis:

[root@linux02 ~]# redis-server /etc/redis_7003.conf

[root@linux02 ~]# redis-server /etc/redis_7004.conf

[root@linux02 ~]# redis-server /etc/redis_7005.conf

[root@linux02 ~]# ps aux|grep redis

root 12318 0.1 0.2 144540 2464 ? Ssl 15:23 0:00 redis-server 192.168.234.130:7003 [cluster]

root 12323 0.1 0.2 144540 2460 ? Ssl 15:23 0:00 redis-server 192.168.234.130:7004 [cluster]

root 12328 0.0 0.2 144540 2460 ? Ssl 15:23 0:00 redis-server 192.168.234.130:7005 [cluster]

构建集群:

[root@linux ~]# redis-cli --cluster create 192.168.234.128:7000 192.168.234.128:7001 192.168.234.128:7002 192.168.234.130:7003 192.168.234.130:7004 192.168.234.130:7005 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.234.130:7005 to 192.168.234.128:7000

Adding replica 192.168.234.128:7002 to 192.168.234.130:7003

Adding replica 192.168.234.130:7004 to 192.168.234.128:7001

M: ba42b28cdb6eac91cef0b5550386be27a93075d7 192.168.234.128:7000

slots:[0-5460] (5461 slots) master

M: e1d33357114913bcf068ea63a9885aaa857312da 192.168.234.128:7001

slots:[10923-16383] (5461 slots) master

S: 651769bf76d89027ebad794b81338521e4a66558 192.168.234.128:7002

replicates 91f98e0070ca065fc58a97d8b62e4167bb9a1e4d

M: 91f98e0070ca065fc58a97d8b62e4167bb9a1e4d 192.168.234.130:7003

slots:[5461-10922] (5462 slots) master

S: ca4d398cf758e903eeec5916839813d0ae220cc3 192.168.234.130:7004

replicates e1d33357114913bcf068ea63a9885aaa857312da

S: 189caae16aa05c8483d39af9b9ccb1734849eaf6 192.168.234.130:7005

replicates ba42b28cdb6eac91cef0b5550386be27a93075d7

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 192.168.234.128:7000)

M: ba42b28cdb6eac91cef0b5550386be27a93075d7 192.168.234.128:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: e1d33357114913bcf068ea63a9885aaa857312da 192.168.234.128:7001

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: ca4d398cf758e903eeec5916839813d0ae220cc3 192.168.234.130:7004

slots: (0 slots) slave

replicates e1d33357114913bcf068ea63a9885aaa857312da

S: 189caae16aa05c8483d39af9b9ccb1734849eaf6 192.168.234.130:7005

slots: (0 slots) slave

replicates ba42b28cdb6eac91cef0b5550386be27a93075d7

S: 651769bf76d89027ebad794b81338521e4a66558 192.168.234.128:7002

slots: (0 slots) slave

replicates 91f98e0070ca065fc58a97d8b62e4167bb9a1e4d

M: 91f98e0070ca065fc58a97d8b62e4167bb9a1e4d 192.168.234.130:7003

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#- -cluster-replicas 1 表示每个主对应一个从(改为2,每个主对应2个从),3台主平均分配了18363个槽点。当主节点挂掉后,从节点会自动变为主,主节点的槽点也会变为从节点的,当主恢复后,会变为从

192.168.234.130:7005 为 192.168.234.128:7000 的从节点

192.168.234.128:7002 为 192.168.234.130:7003 的从节点

192.168.234.130:7004 为 192.168.234.128:7001 的从节点

管理集群

连接集群中的redis:

[root@linux ~]# redis-cli -c -h 192.168.234.130 -p 7004

192.168.234.130:7004>

#-h指定ip,-p指定redis对应的端口即可

创建键值对:

192.168.234.130:7004> set key 1

-> Redirected to slot [12539] located at 192.168.234.128:7001

OK

192.168.234.128:7001> set key2 1

-> Redirected to slot [4998] located at 192.168.234.128:7000

OK

192.168.234.128:7000> set key3 1

OK

192.168.234.128:7000> keys *

1) "key3"

2) "key2"

192.168.234.128:7000>

#连接的是130:7004从,创建的键值对被存储到了128:7001主上,在128:7001上创建键值对被存储到了128:7000上,在创建键值对的过程中,redis集群会把不同的键值对分配到不同的slot中

查看集群情况: redis-cli --cluster check 跟任意一个节点的ip:port

[root@linux ~]# redis-cli --cluster check 192.168.234.128:7000

192.168.234.128:7000 (ba42b28c...) -> 2 keys | 5461 slots | 1 slaves.

192.168.234.128:7001 (e1d33357...) -> 1 keys | 5461 slots | 1 slaves.

192.168.234.130:7003 (91f98e00...) -> 0 keys | 5462 slots | 1 slaves.

[OK] 3 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.234.128:7000)

M: ba42b28cdb6eac91cef0b5550386be27a93075d7 192.168.234.128:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: e1d33357114913bcf068ea63a9885aaa857312da 192.168.234.128:7001

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: ca4d398cf758e903eeec5916839813d0ae220cc3 192.168.234.130:7004

slots: (0 slots) slave

replicates e1d33357114913bcf068ea63a9885aaa857312da

S: 189caae16aa05c8483d39af9b9ccb1734849eaf6 192.168.234.130:7005

slots: (0 slots) slave

replicates ba42b28cdb6eac91cef0b5550386be27a93075d7

S: 651769bf76d89027ebad794b81338521e4a66558 192.168.234.128:7002

slots: (0 slots) slave

replicates 91f98e0070ca065fc58a97d8b62e4167bb9a1e4d

M: 91f98e0070ca065fc58a97d8b62e4167bb9a1e4d 192.168.234.130:7003

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

添加节点:(假设新增节点为1.1.1.1:6379)

redis-cli --cluster add-node 1.1.1.1:6379 192.168.234.128:7000

#定义要添加的节点ip:port以及任意一个集群中的节点,这种方式添加的节点是主节点

redis-cli --cluster add-node 1.1.1.1:6379 192.168.234.128:7000 --cluster-slave

#这样方式添加的节点为从节点

redis-cli --cluster add-node 1.1.1.1:6379 192.168.234.128:7000 --cluster-slave --cluster-master-id xxxxxx

#这种方式添加的节点为从节点,并通过nodeid指定主节点

给添加的节点分配slots:

redis-cli --cluster reshard 192.168.234.128:7000 #ip:port可以是任意节点的

How many slots do you want to move (from 1 to 16384)? #定义要分配多少slots

What is the receiving node ID? #定义接收slot的nodeid

Source node #1: #定义第一个源master的id,如果想在所有master上拿slot,直接敲all

Source node #2: #定义第二个源master的id,如果不再继续有新的源,直接敲done

平均分配各节点的slots数量:

redis-cli --cluster rebalance --cluster-threshold 1 192.168.234.128:7000

#可以是任意节点的ip:port

删除某个节点:

redis-cli --cluster del-node 192.168.234.7000 nodeid

#可以是任意节点的ip:port,指定要删除的节点nodeid即可,删除节点之前,需要先通过redis-cli --cluster reshard命令移走所有slots到其他的主节点上,否则会报错,删除完后需要在集群中执行cluster forget nodeid来忘记节点

将集群外部redis实例中的数据导入到集群中去:

redis-cli --cluster import 192.168.234.128:7000 --cluster-from 192.168.1.10:6379 --cluster-copy

#cluster-from后面跟外部redis的ip和port,如果只使用cluster-copy,则要导入集群中的key不能在, 假如集群中已有同样的key,如果需要替换,可以cluster-copy和cluster-replace联用,这样集群中的key就会被替换为外部的

来源:CSDN

作者:Asnfy

链接:https://blog.csdn.net/Powerful_Fy/article/details/103562537