我们首先启动一个ubuntu的基本镜像,并且指定一个脚本循环,使得它不要运行完结束。

iie4bu@hostdocker:~/ddy/docker-flask$ docker run -d --name test1 ubuntu:xenial /bin/bash -c "while true; do sleep 3600; done"

b21a9d817e2557afaac4f453e0856094afcaea2efba020008a6a6c2431e7d0b3

iie4bu@hostdocker:~/ddy/docker-flask$参数--name 可以指定这个镜像的名字。

我们再启动第二个镜像,指定名字为test2

iie4bu@hostdocker:~/ddy/docker-flask$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d85b091d4deb ubuntu:xenial "/bin/bash -c 'while…" 4 seconds ago Up 3 seconds test2

b21a9d817e25 ubuntu:xenial "/bin/bash -c 'while…" 3 minutes ago Up 3 minutes test1

现在我们有两个相同的镜像,分别是test1和test2。

查看他们的IP地址。

先安装ifconfig和ping

iie4bu@hostdocker:~/ddy/docker-flask$ docker exec -it d85b091d4deb /bin/bash

root@d85b091d4deb:/# apt-get update

root@d85b091d4deb:/# apt install -y net-tools #安装 ifconfig

root@d85b091d4deb:/# apt-get install -y iputils-ping两个container都安装好了ifconfig,我们来查看一下:

iie4bu@hostdocker:~$ docker exec d85b091d4deb ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:ac:11:00:03

inet addr:172.17.0.3 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:13372 errors:0 dropped:0 overruns:0 frame:0

TX packets:13134 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:28530516 (28.5 MB) TX bytes:1176567 (1.1 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

iie4bu@hostdocker:~$ docker exec b21a9d817e25 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:ac:11:00:02

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:13081 errors:0 dropped:0 overruns:0 frame:0

TX packets:12850 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:28511437 (28.5 MB) TX bytes:1148463 (1.1 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

iie4bu@hostdocker:~$这种方式不用去交互式的执行,可以直接使用命令执行。可以发现:两个容器的ip分别为172.17.0.2和172.17.0.3。说明这是各自有一个独立的命名空间。它们彼此可以互相ping通:

iie4bu@hostdocker:~$ docker exec b21a9d817e25 ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.103 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.063 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.063 ms

64 bytes from 172.17.0.3: icmp_seq=4 ttl=64 time=0.062 ms

64 bytes from 172.17.0.3: icmp_seq=5 ttl=64 time=0.061 ms

64 bytes from 172.17.0.3: icmp_seq=6 ttl=64 time=0.060 ms

^C

iie4bu@hostdocker:~$ docker exec d85b091d4deb ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.151 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.093 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.068 ms

^C

说明这两个container之间可以互相通信。

docker network ls 可以列举出当前机器上docker有哪些网络

iie4bu@hostdocker:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

b7c11f829aac bridge bridge local

xbo08wnv73f1 dgraph_dgraph overlay swarm

b55a3535504f docker_gwbridge bridge local

c8406d5c4e72 host host local

uz1kgf9j6m48 ingress overlay swarm

d55fa090559d none null local

iie4bu@hostdocker:~$ 查看test1容器使用的是哪种类型的网络,使用命令docker network inspect NETWORKID/NAME

iie4bu@hostdocker:~$ docker network inspect b7c11f829aac

[

{

"Name": "bridge",

"Id": "b7c11f829aacbfe6578b556865d2bbd6d2276442cb58099ae2edb7167b85b365",

"Created": "2019-06-26T09:52:55.529849773+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"b21a9d817e2557afaac4f453e0856094afcaea2efba020008a6a6c2431e7d0b3": {

"Name": "test1",

"EndpointID": "336ee00007605e54a8e09c1d1d04d16c2920c70681809d6cfde45b996abe41ac",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"d85b091d4debbacc1f3901bde6296ff793e9151bac49b31afe738f9f51f10ca4": {

"Name": "test2",

"EndpointID": "9a8afbac1de0f778f7bf4f4fc4783b421edac874a6b99b956044eaf6088c6929",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

iie4bu@hostdocker:~$可以看到有一块是Containers,可以看到里面的test1和test2。说明这两个container连到了bridge网络上面。docker创建容器的时候,默认会使用bridge。

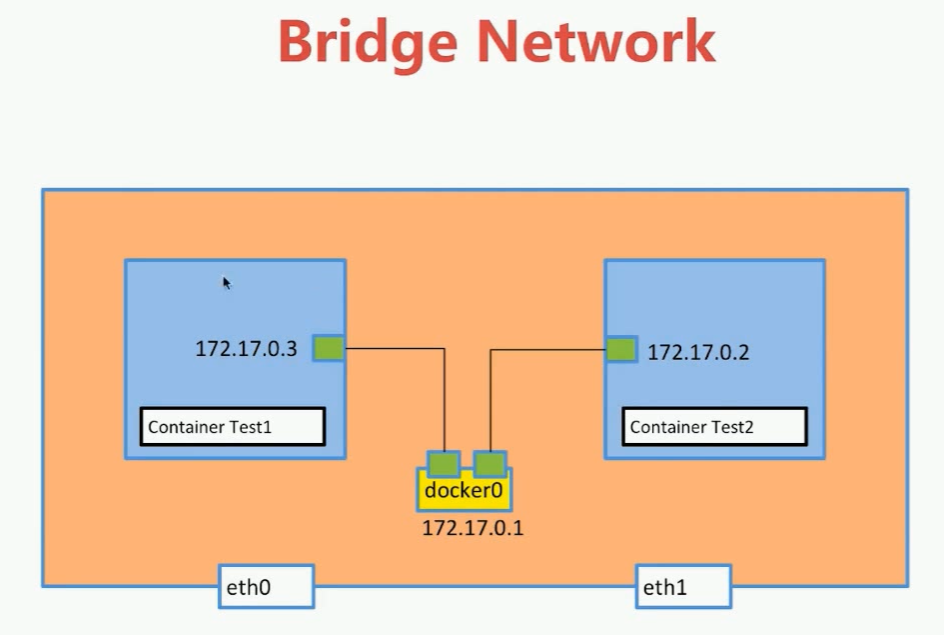

bridge是什么网络?

Docker 容器默认使用 bridge 模式的网络。其特点如下:

- 使用一个 linux bridge,默认为 docker0

- 使用 veth 对,一头在容器的网络 namespace 中,一头在 docker0 上

- 该模式下Docker Container不具有一个公有IP,因为宿主机的IP地址与veth pair的 IP地址不在同一个网段内

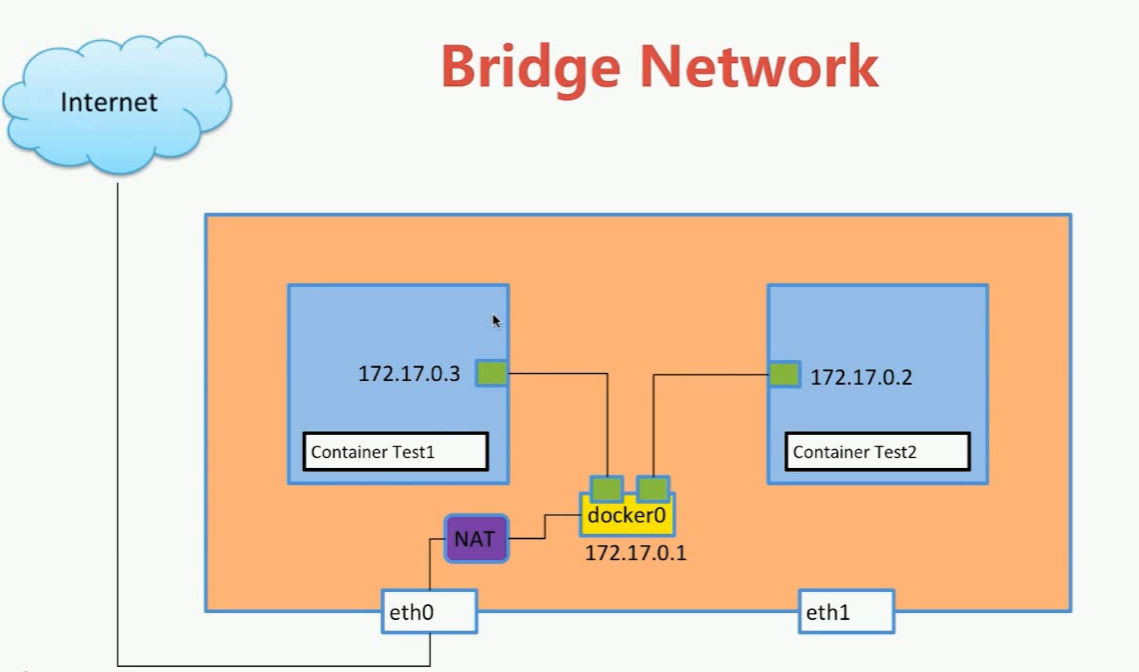

- Docker采用 NAT 方式,将容器内部的服务监听的端口与宿主机的某一个端口port 进行“绑定”,使得宿主机以外的世界可以主动将网络报文发送至容器内部

- 外界访问容器内的服务时,需要访问宿主机的 IP 以及宿主机的端口 port

- NAT 模式由于是在三层网络上的实现手段,故肯定会影响网络的传输效率。

- 容器拥有独立、隔离的网络栈;让容器和宿主机以外的世界通过NAT建立通信

当我们使用命令ifconfig时,除了我们的eno1、eno2、eno3、eno4、lo等等,还有一个docker0和几个veth。

当我们的container要连到我们的docker0这个bridge上面,docker0的命名空间是本机linux,而test1有自己的一个命名空间,这两个network namespace要连在一起是需要一对veth的pair。

iie4bu@hostdocker:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether e0:07:1b:f2:07:ac brd ff:ff:ff:ff:ff:ff

inet 192.168.152.45/24 brd 192.168.152.255 scope global eno1

valid_lft forever preferred_lft forever

inet6 2400:dd01:1001:152:5632:5c7f:ff0c:4834/64 scope global noprefixroute dynamic

valid_lft 2591792sec preferred_lft 604592sec

inet6 fe80::ca14:40ee:6eeb:838e/64 scope link

valid_lft forever preferred_lft forever

3: eno2: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether e0:07:1b:f2:07:ad brd ff:ff:ff:ff:ff:ff

4: eno3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether e0:07:1b:f2:07:ae brd ff:ff:ff:ff:ff:ff

517: veth6d03a1f@if516: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether d6:a6:dd:97:1d:ae brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::d4a6:ddff:fe97:1dae/64 scope link

valid_lft forever preferred_lft forever

5: eno4: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether e0:07:1b:f2:07:af brd ff:ff:ff:ff:ff:ff

6: vmnet1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

link/ether 00:50:56:c0:00:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.128.1/24 brd 192.168.128.255 scope global vmnet1

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fec0:1/64 scope link

valid_lft forever preferred_lft forever

519: veth3413b16@if518: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 9e:f6:57:94:dd:51 brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::9cf6:57ff:fe94:dd51/64 scope link

valid_lft forever preferred_lft forever

7: vmnet8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

link/ether 00:50:56:c0:00:08 brd ff:ff:ff:ff:ff:ff

inet 192.168.205.1/24 brd 192.168.205.255 scope global vmnet8

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fec0:8/64 scope link

valid_lft forever preferred_lft forever

14: docker_gwbridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:65:5e:eb:4a brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global docker_gwbridge

valid_lft forever preferred_lft forever

inet6 fe80::42:65ff:fe5e:eb4a/64 scope link

valid_lft forever preferred_lft forever

17: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:6e:91:dc:15 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:6eff:fe91:dc15/64 scope link

valid_lft forever preferred_lft forever

467: veth92ff8a3@if466: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker_gwbridge state UP group default

link/ether 4e:99:82:5f:87:a5 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::4c99:82ff:fe5f:87a5/64 scope link

valid_lft forever preferred_lft forever

如何证明我们的test1和docker0建立了链接?

使用brctl命令。

iie4bu@hostdocker:~$ brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02426e91dc15 no veth3413b16

veth6d03a1f

docker_gwbridge 8000.0242655eeb4a no veth92ff8a3可以看到 veth3413b16和veth6d03a1f都连到了docker0上面了

这就解释了为什么两个容器之间可以通信。

那么对于单个容器来说,如何连接到Internet的呢?

自定义bridge network

新建bridge network ,命令docker network create -d bridge my-bridge

iie4bu@hostdocker:~$ docker network create -d bridge my-bridge

02b4d20593cc255f87b9b1d7414baf3865892593b061a5d43beb2fe720bb926a

iie4bu@hostdocker:~$ 这样你就新建了一个bridge的network。

查看network:

iie4bu@hostdocker:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

b7c11f829aac bridge bridge local

xbo08wnv73f1 dgraph_dgraph overlay swarm

b55a3535504f docker_gwbridge bridge local

c8406d5c4e72 host host local

uz1kgf9j6m48 ingress overlay swarm

02b4d20593cc my-bridge bridge local

d55fa090559d none null local可以看到添加了一个my-bridge,它的driver是bridge。

iie4bu@hostdocker:~$ brctl show

bridge name bridge id STP enabled interfaces

br-02b4d20593cc 8000.0242705676cf no

docker0 8000.02426e91dc15 no veth4f89fa1

veth6d03a1f

docker_gwbridge 8000.0242655eeb4a no veth92ff8a3使用brctl show 可以看到我们新建的网络br-02b4d20593cc

我们新建一个容器的时候,可以使用--network去指定我们要连接的网络是哪个。

新建一个容器test3,并指定network为我们自己创建的my-bridge

iie4bu@hostdocker:~$ docker run -d --name test3 --network my-bridge vincent/ubuntu-update /bin/sh -c "while true; do sleep 3600; done"

b72de1141605ff177b7ac7e84746fa63112d50708f986d28fa3dd3ac08fb51e4

iie4bu@hostdocker:~$使用brctl show查看到我们的br-02b4d20593cc有了一个新的interfaces(之前是没有的):

iie4bu@hostdocker:~$ brctl show

bridge name bridge id STP enabled interfaces

br-02b4d20593cc 8000.0242705676cf no vethde5dfd8

docker0 8000.02426e91dc15 no veth4f89fa1

veth6d03a1f

docker_gwbridge 8000.0242655eeb4a no veth92ff8a3

iie4bu@hostdocker:~$ 使用docker network inspect my-bridge查看:

iie4bu@hostdocker:~$ docker network inspect my-bridge

[

{

"Name": "my-bridge",

"Id": "02b4d20593cc255f87b9b1d7414baf3865892593b061a5d43beb2fe720bb926a",

"Created": "2019-06-27T16:46:54.65888934+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.19.0.0/16",

"Gateway": "172.19.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"b72de1141605ff177b7ac7e84746fa63112d50708f986d28fa3dd3ac08fb51e4": {

"Name": "test3",

"EndpointID": "bde438c3113311d41e2ee9151ef6f37f237864b75d46c626566457e34b98673e",

"MacAddress": "02:42:ac:13:00:02",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

iie4bu@hostdocker:~$可以看到test3容器的ip是172.19.0.2。

对于前面创建的test1和test2容器,也可以把它link到新建的my-bridge上面。使用命令docker network connect my-bridge test2

iie4bu@hostdocker:~$ docker network connect my-bridge test2

iie4bu@hostdocker:~$ docker network inspect my-bridge

[

{

"Name": "my-bridge",

"Id": "02b4d20593cc255f87b9b1d7414baf3865892593b061a5d43beb2fe720bb926a",

"Created": "2019-06-27T16:46:54.65888934+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.19.0.0/16",

"Gateway": "172.19.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"7fe6f95d299275781222faf451f77d7c6d31589069755d4a2ddead770c7ace50": {

"Name": "test2",

"EndpointID": "5265ebe0aeabb08bb6be546b27bf3f51fd472ceb0ce1b1ff4bfe00aff73d2963",

"MacAddress": "02:42:ac:13:00:03",

"IPv4Address": "172.19.0.3/16",

"IPv6Address": ""

},

"b72de1141605ff177b7ac7e84746fa63112d50708f986d28fa3dd3ac08fb51e4": {

"Name": "test3",

"EndpointID": "bde438c3113311d41e2ee9151ef6f37f237864b75d46c626566457e34b98673e",

"MacAddress": "02:42:ac:13:00:02",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

iie4bu@hostdocker:~$然后可以看到test2已经连接到我们的my-bridge上面了,ip也变为172.19.0.3。

我们查看默认的bridge:

iie4bu@hostdocker:~$ docker network inspect bridge

[

{

"Name": "bridge",

"Id": "b7c11f829aacbfe6578b556865d2bbd6d2276442cb58099ae2edb7167b85b365",

"Created": "2019-06-26T09:52:55.529849773+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"7fe6f95d299275781222faf451f77d7c6d31589069755d4a2ddead770c7ace50": {

"Name": "test2",

"EndpointID": "70886a332041f48fe32d41a2ef053d10ed2acdf1fc445e5c2a64ebe634883945",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"b21a9d817e2557afaac4f453e0856094afcaea2efba020008a6a6c2431e7d0b3": {

"Name": "test1",

"EndpointID": "336ee00007605e54a8e09c1d1d04d16c2920c70681809d6cfde45b996abe41ac",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

iie4bu@hostdocker:~$ test2的IP地址是172.17.0.3发现test2容器既连到了bridge上面,又连到了my-bridge上面。

我们尝试在test3中ping test2,看能不能ping通:

iie4bu@hostdocker:~$ docker exec -it test3 ping 172.19.0.3

PING 172.19.0.3 (172.19.0.3) 56(84) bytes of data.

64 bytes from 172.19.0.3: icmp_seq=1 ttl=64 time=0.175 ms

64 bytes from 172.19.0.3: icmp_seq=2 ttl=64 time=0.095 ms

64 bytes from 172.19.0.3: icmp_seq=3 ttl=64 time=0.062 ms

64 bytes from 172.19.0.3: icmp_seq=4 ttl=64 time=0.068 ms

64 bytes from 172.19.0.3: icmp_seq=5 ttl=64 time=0.069 ms

^C

--- 172.19.0.3 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 3997ms

rtt min/avg/max/mdev = 0.062/0.093/0.175/0.043 ms发现是可以ping通的,那么我们尝试pingtest2 的名字,一般情况下是ping不通的,因为我们没有link test3和test2。

iie4bu@hostdocker:~$ docker exec -it test3 ping test2

PING test2 (172.19.0.3) 56(84) bytes of data.

64 bytes from test2.my-bridge (172.19.0.3): icmp_seq=1 ttl=64 time=0.100 ms

64 bytes from test2.my-bridge (172.19.0.3): icmp_seq=2 ttl=64 time=0.078 ms

64 bytes from test2.my-bridge (172.19.0.3): icmp_seq=3 ttl=64 time=0.062 ms

^C

--- test2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1998ms

rtt min/avg/max/mdev = 0.062/0.080/0.100/0.015 ms

iie4bu@hostdocker:~$发现可以ping通。这是个例外情况。

原因是test2和test3都连到了我们自己创建的network bridge上面,而不是默认的docker0,那么test2和test3是默认已经link成功的,test2和test3之间可以相互通过容器名字ping通。

来源:oschina

链接:https://my.oschina.net/u/946962/blog/3066843