import re, time, redis

from lxml import etree

from selenium import webdriver

class Guazi:

def __call__(self, *args, **kwargs):

# 初始url

self.base_url = 'https://www.guazi.com/bj/buy'

# 创建数据库连接

self.r = self.get_redis()

# selsnium方式打开网页

self.driver = self.get_driver()

# 所有城市url 缺少/buy

self.city_url_list = self.get_city_url()

# 所有品牌后缀

self.pai_url_list = self.get_city_pai()

self.run()

# 主函数

def run(self):

# 储存所有url到数据库

# self.get_cmp_url()

# 浏览数据库取出所有url组成一个列表

complete_url_list = self.r.lrange("guazi_url_list",0,-1)

for complete_url in complete_url_list:

try:

complete_url1 = str(complete_url,"utf-8")

self.driver.get(complete_url1)

# page_source直接返回网页源码

html = self.driver.page_source

# 转换为xpath对象

html_xml = etree.HTML(html)

self.get_zuihou(html_xml)

# print(car_dict)

# self.r.lpush("cmp_guazi_url",car_dict)

# print(complete_url2)

except Exception as e:

print(e)

# 取最大页码 与页码组合后48865个url 并存储到redis数据库

def get_max_yema(self, html_xml, url):

try:

# 用xpath取最大页码

max_ye = html_xml.xpath("//ul[@class='pageLink clearfix']/li[last()-1]/a/span/text()")

# print(max_ye)

ba_url = re.findall(r'(.+)#bread',url)

# print(ba_url)

if max_ye:

for eve in range(int(max_ye[0])):

# print(eve)

complete_url = ba_url[0] + 'o' + str(eve+1)

# print(complete_url)

# 存储到数据库

self.lpush(complete_url)

else:

# print(url)

self.lpush(url)

except Exception as e:

print(e)

"""

把每一个城市的所有品牌的网址打印并爬取,总共43665个网址,

"""

def get_cmp_url(self):

for eve in self.city_url_list:

for cvc in self.pai_url_list:

# 组成每一个城市的所有牌子的url

url = eve + cvc

self.driver.get(url)

html = self.driver.page_source

html_xml = etree.HTML(html)

time.sleep(0.5)

# 查最大页码 循环组成新的url并储存到数据库

self.get_max_yema(html_xml, url)

# 获取城市地区品牌的url 231个牌子

def get_city_pai(self):

self.driver.get(self.base_url)

# page_source直接返回网页源码

html = self.driver.page_source

# 转换为xpath对象

html_xml = etree.HTML(html)

# 品牌后缀

str_pai_list = html_xml.xpath("//div[@class='dd-all clearfix js-brand js-option-hid-info']//a/@href")

pai_url_list = []

for eve in str_pai_list:

str_pai_list = re.findall(r'/bj(.+)', eve)[0]

pai_url_list.append(str_pai_list)

# print(pai_url_list)

return pai_url_list

# 获取城市地区url 缺少/buy 223个城市

def get_city_url(self):

self.driver.get(self.base_url)

# page_source直接返回网页源码

html = self.driver.page_source

# print(html)

# 转换为xpath对象

html_xml = etree.HTML(html)

# 取出城市后缀

str_city_list = html_xml.xpath("//div[@class='city-box all-city']//div/dl/dd/a/@href")

# print(str_city_list)

city_url_list = []

for city_url in str_city_list:

# 组成城市url

city_url = "https://www.guazi.com" + city_url

city_url = re.findall(r'(.+)/buy', city_url)[0]

if city_url not in city_url_list:

city_url_list.append(city_url)

# print(len(city_url_list))

return city_url_list

# 取出数据

def get_zuihou(self, html_xml):

car_list = html_xml.xpath("//ul[@class='carlist clearfix js-top']//li")

car_dict = {}

for car in car_list:

car_img_list = car.xpath(".//img/@src")

car_img = car_img_list[0] if car_img_list else ''

car_name = car.xpath(".//a/@title")[0]

car_info = car.xpath(".//div[@class='t-i']/text()")

car_time = car_info[0]

car_km_num = car_info[1]

car_price = car.xpath(".//div[@class='t-price']/p/text()")[0] + '万'

car_detail_url = car.xpath(".//a/@href")[0]

car_detail_url = 'https://www.guazi.com' + car_detail_url

car_dict = {

"car_img": car_img,

"car_name": car_name,

"car_time": car_time,

"car_km_num": car_km_num,

"car_price": car_price,

"car_detail_url": car_detail_url,

}

# print(car_dict)

if car_dict:

# 取出的数据存入数据库

self.r.lpush("guazi_url", str(car_dict))

print(car_dict)

else:

print("000")

# return car_dict

# 存入redis数据

def lpush(self, guzi_url):

self.r.lpush("guazi_url_list", guzi_url)

print(guzi_url)

# 创建redis连接

def get_redis(self):

return redis.Redis(host='127.0.0.1', port=6379)

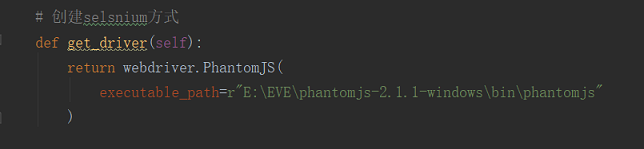

# 创建selsnium方式

def get_driver(self):

return webdriver.PhantomJS(

executable_path=r"E:\EVE\phantomjs-2.1.1-windows\bin\phantomjs"

)

def __del__(self):

"""

作用:代码执行完执行此函数

:return:

"""

self.driver.close()

self.driver.quit()

if __name__ == '__main__':

guazi = Guazi()

guazi()

项目爬取流程

1:先爬取所有城市爬取瓜子二手车网站

2:在爬取每一个城市的每一个品牌

品牌数是固定的,所以爬取一次便可以了,然后组合与城市组合成新的URL

3:瓜子网每一个城市的每一个品牌都没超过50页,所以就不在爬取车系了(瓜子网最多显示50页的汽车信息,每一页40辆)

爬取最大页码,找出页码的规律,循环组成新的url,全部存进数据库。

4:再从数据库取出每一条url在爬取车辆信息,再存入一个新的数据库。

数据库

使用了redis数据库,因为只需存取数据,不需太多复杂的操作。redis又是非关系型内存数据库。

爬取难点

1:用request登录url取不到网页信息,所以用到selenium。

1:url太多,需要每一步都测试多次结果,容易出错。

2:对于信息的爬取需要xpath筛选,谷歌xpath的结果与pytharm的结果有一些不一样,容易出错。

来源:CSDN

作者:big-mingming

链接:https://blog.csdn.net/weixin_43952160/article/details/88095566