上一篇文章介绍了hive的原理以及实现机。本篇博客开始,博主将分享数据仓库hive工具搭建全过程。

一、安装Hive

(1)、下载Hive和环境准备:

Hive官网地址:http://hive.apache.org/index.html

Hive下载地址:http://www.apache.org/dyn/closer.cgi/hive/

注意: 在安装Hive之前,需要保证你的Hadoop集群已经正常启动,Hive只需在Hadoop集群的NameNode节点上安装即可,无需在DataNode节点上安装。

本文安装的是 apache-hive-2.3.4-bin.tar.gz 其下载地址为:http://mirrors.shu.edu.cn/apache/hive/hive-2.3.4/

(2)、执行安装

#上传

Alt+p;

cd ~

put apache-hive-2.3.4-bin.tar.gz

# 将下载好的hive压缩包解压到用户根目录

tar zxvf apache-hive-2.3.4-bin.tar.gz(3)、配置hive

#a.配置环境变量,编辑/etc/profile

#set hive env

export HIVE_HOME=/home/hadoop/apps/apache-hive-2.3.4-bin

export PATH=${HIVE_HOME}/bin:$PATH

#让环境变量生效

source /etc/profile

#创建hive-site.xml配置文件

# 在开始配置Hive之前,先执行如下命令,切换到Hive的操作账户,我的是 hadoop

su - hadoop

cd /home/hadoop/apps/apache-hive-2.3.4-bin/conf

#以hive-default.xml.template为模板,创建 hive-site.xml

cp hive-default.xml.template hive-site.xml

(4)、在HDFS中创建Hive所需目录

因为在hive-site.xml中有以下配置:

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description>

</property>

所以需要在HDFS中创建好相应的目录,操作命令如下:

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -mkdir -p /user/hive/warehouse

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -chmod -R 777 /user/hive/warehouse

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -mkdir -p /tmp/hive

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -chmod -R 777 /tmp/hive

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -ls /

Found 16 items

drwxr-xr-x - hadoop supergroup 0 2018-12-30 07:45 /combinefile

drwxr-xr-x - hadoop supergroup 0 2018-12-24 00:51 /crw

drwxr-xr-x - hadoop supergroup 0 2018-12-24 00:51 /en

drwxr-xr-x - hadoop supergroup 0 2018-12-19 07:11 /index

drwxr-xr-x - hadoop supergroup 0 2018-12-09 06:57 /localwccombineroutput

drwxr-xr-x - hadoop supergroup 0 2018-12-24 00:51 /loge

drwxr-xr-x - hadoop supergroup 0 2018-12-23 08:12 /ordergp

drwxr-xr-x - hadoop supergroup 0 2018-12-19 05:48 /rjoin

drwxr-xr-x - hadoop supergroup 0 2018-12-23 05:12 /shared

drwx------ - hadoop supergroup 0 2019-01-20 23:34 /tmp

drwxr-xr-x - hadoop supergroup 0 2019-01-20 23:33 /user

drwxr-xr-x - hadoop supergroup 0 2018-12-05 08:32 /wccombineroutput

drwxr-xr-x - hadoop supergroup 0 2018-12-05 08:39 /wccombineroutputs

drwxr-xr-x - hadoop supergroup 0 2019-01-19 22:38 /webloginput

drwxr-xr-x - hadoop supergroup 0 2019-01-20 02:13 /weblogout

drwxr-xr-x - hadoop supergroup 0 2018-12-23 07:46 /weblogwash

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -ls /tmp/

Found 2 items

drwx------ - hadoop supergroup 0 2018-12-05 08:30 /tmp/hadoop-yarn

drwxrwxrwx - hadoop supergroup 0 2019-01-20 23:34 /tmp/hive

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -ls /user/hive

Found 1 items

drwxrwxrwx - hadoop supergroup 0 2019-01-20 23:33 /user/hive/warehouse

(5)、配置hive-site.xml

a、配置hive本地临时目录

#将hive-site.xml文件中的${system:java.io.tmpdir}替换为hive的本地临时目录,例如我使用的是 #/home/hadoop/apps/apache-hive-2.3.4-bin/tmp ,如果该目录不存在,需要先进行创建,并且赋予读写权限:

[hadoop@centos-aaron-h1 apache-hive-2.3.4-bin]$ cd /home/hadoop/apps/apache-hive-2.3.4-bin

[hadoop@centos-aaron-h1 apache-hive-2.3.4-bin]$ mkdir tmp/

[hadoop@centos-aaron-h1 apache-hive-2.3.4-bin]$ chmod -R 777 tmp/

[hadoop@centos-aaron-h1 apache-hive-2.3.4-bin]$ cd conf

#在vim命令模式下执行如下命令完成替换

%s#${system:java.io.tmpdir}#/home/hadoop/apps/apache-hive-2.3.4-bin/tmp#g

#如下:

#将

<property>

<name>hive.exec.local.scratchdir</name>

<value>${system:java.io.tmpdir}/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

#替换为

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/hadoop/apps/apache-hive-2.3.4-bin/tmp/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

#配置Hive用户名

#将hive-site.xml文件中的 ${system:user.name} 替换为操作Hive的账户的用户名,例如我的是 hadoop 。在vim命令模式##下执行如下命令完成替换:

%s#${system:user.name}#hadoop#g

#如下:

将

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/hadoop/apps/apache-hive-2.3.4-bin/tmp/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

#替换为

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/hadoop/apps/apache-hive-2.3.4-bin/tmp/hadoop</value>

<description>Local scratch space for Hive jobs</description>

</property>b、修改Hive数据库配置

| 属性名称 | 描述 |

| javax.jdo.option.ConnectionDriverName | 数据库的驱动类名称 |

| javax.jdo.option.ConnectionURL | 数据库的JDBC连接地址 |

| javax.jdo.option.ConnectionUserName | 连接数据库所使用的用户名 |

| javax.jdo.option.ConnectionPassword | 连接数据库所使用的密码 |

Hive默认的配置使用的是Derby数据库来存储Hive的元数据信息,其配置信息如下:

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.apache.derby.jdbc.EmbeddedDriver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:derby:;databaseName=metastore_db;create=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>APP</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>mine</value>

<description>password to use against metastore database</description>

</property>需要将Derby数据库切换为MySQL数据库的话,只需要修改以上4项配置,例如:

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>在配置 javax.jdo.option.ConnectionURL 的时候,使用useSSL=false,禁用MySQL连接警告,而且可能会导致Hive初始化MySQL元数据失败。

此外,还需要将MySQL的驱动包拷贝到Hive的lib目录下

#上面配置文件中的驱动名称是 com.mysql.jdbc.Driver

cp ~/mysql-connector-java-5.1.28.jar $HIVE_HOME/lib/c、配置 hive-env.sh

[hadoop@centos-aaron-h1 conf]$ cd ~/apps/apache-hive-2.3.4-bin/conf

[hadoop@centos-aaron-h1 conf]$ cp hive-env.sh.template hive-env.sh

[hadoop@centos-aaron-h1 conf]$ vi hive-env.sh

#新增以下内容

export HADOOP_HOME=/home/hadoop/apps/hadoop-2.9.1

export HIVE_CONF_DIR=/home/hadoop/apps/apache-hive-2.3.4-bin/conf

export HIVE_AUX_JARS_PATH=/home/hadoop/apps/apache-hive-2.3.4-bin/lib(6)、初始化启动和测试Hive

[hadoop@centos-aaron-h1 apache-hive-2.3.4-bin]$ cd bin

[hadoop@centos-aaron-h1 bin]$ schematool -initSchema -dbType mysql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apps/apache-hive-2.3.4-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.9.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://192.168.29.131:3306/hive?createDatabaseIfNotExist=true&useSSL=false

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Initialization script completed

schemaTool completed

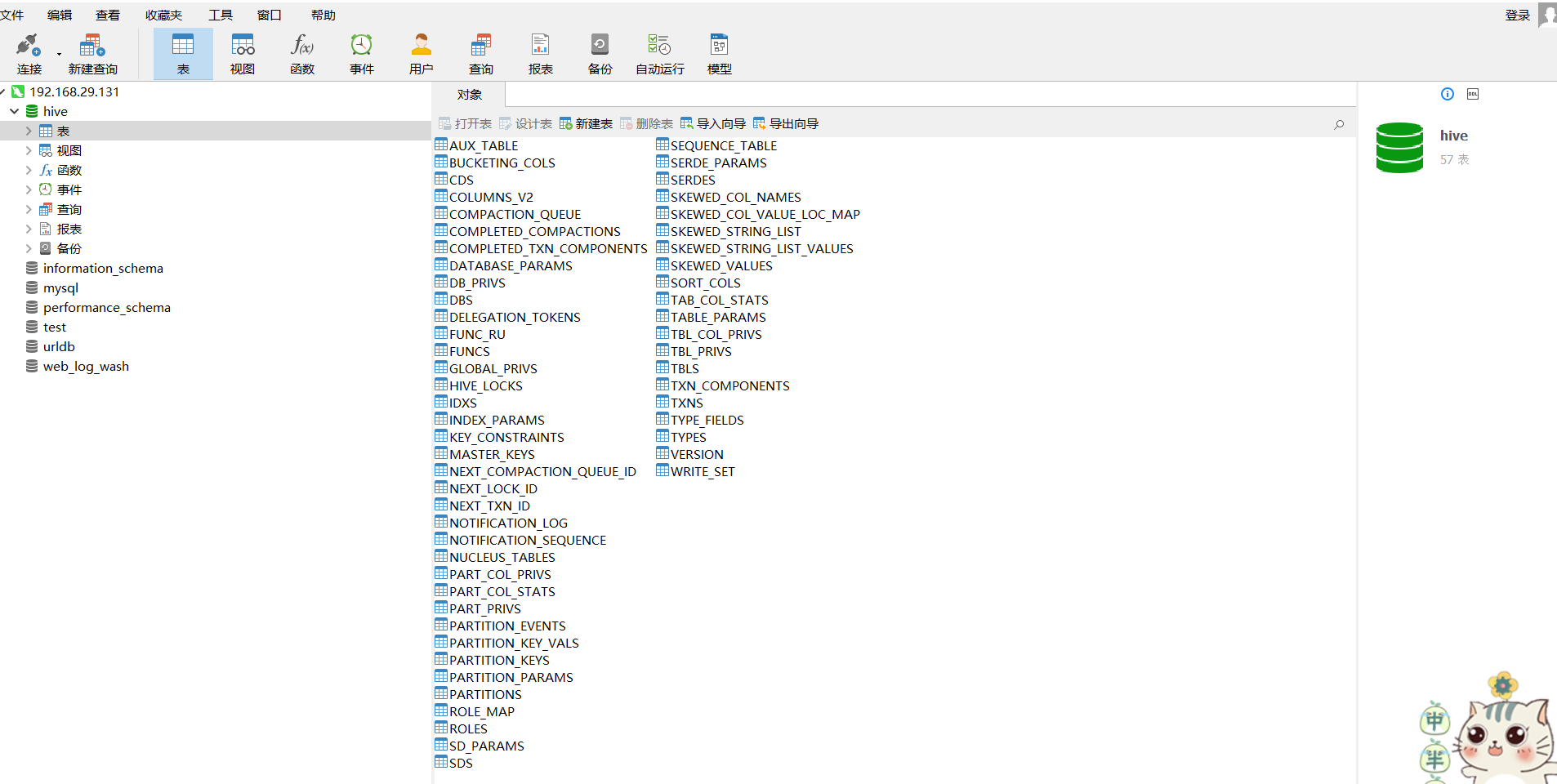

[hadoop@centos-aaron-h1 bin]$ 数据库初始化完成之后,会在MySQL数据库里生成如下metadata表用于存储Hive的元数据信息:

(7)、启动hive

[hadoop@centos-aaron-h1 bin]$ ./hive

which: no hbase in (/home/hadoop/apps/apache-hive-2.3.4-bin/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/usr/local/jdk1.7.0_45/bin:/home/hadoop/apps/hadoop-2.9.1/bin:/home/hadoop/apps/hadoop-2.9.1/sbin:/home/hadoop/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apps/apache-hive-2.3.4-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.9.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/home/hadoop/apps/apache-hive-2.3.4-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

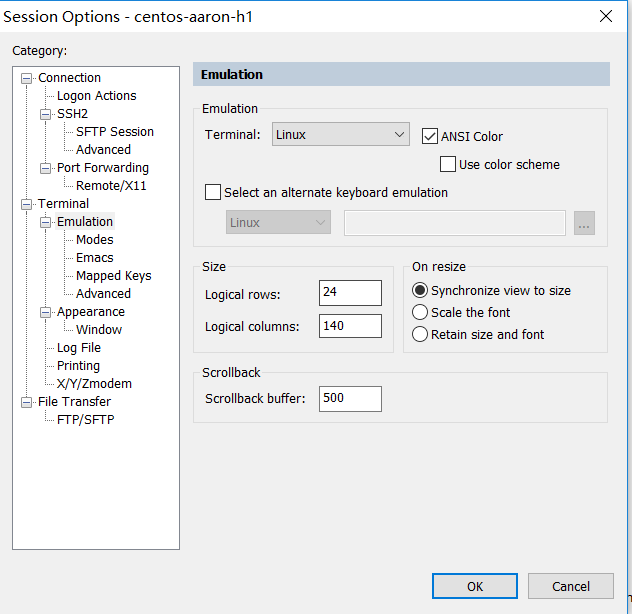

hive> 输入命令后发现hive 退格键不能用(网上搜索,将Secure CRT做以下操作就可以了)

终端》仿真

修改 终端(T) 为 Linux

查看hive数据库:

hive> show databases;

OK

default

Time taken: 3.88 seconds, Fetched: 1 row(s)

hive> hive操作建库建表:

hive> create database wcc_log;

OK

Time taken: 0.234 seconds

hive> use wcc_log;

OK

Time taken: 0.019 seconds

hive> create table test_log(id int,name string);

OK

Time taken: 0.715 seconds

hive> show tables;

OK

test_log

Time taken: 0.026 seconds, Fetched: 1 row(s)

hive> select * from test_log;

OK

Time taken: 1.814 seconds

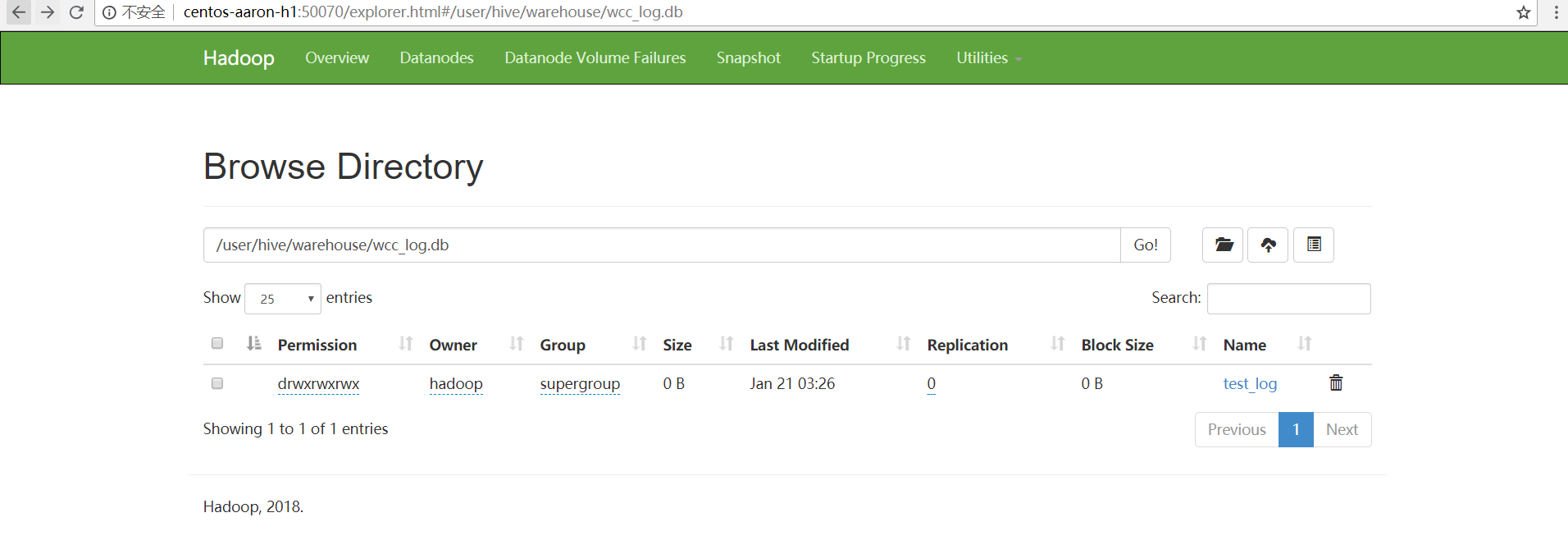

hive> 我们可以在hdfs中查看刚才创建的数据库、表等信息

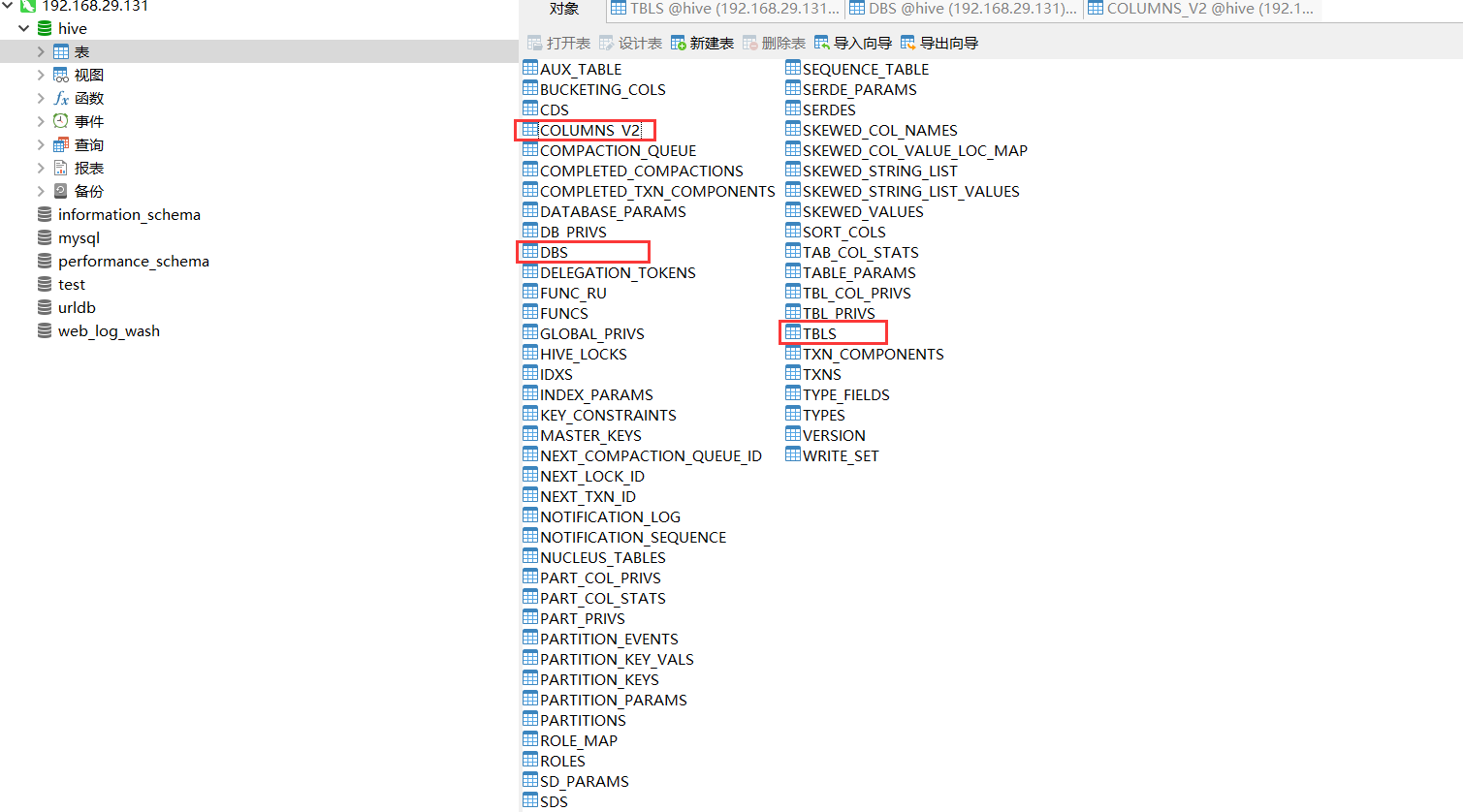

mysql中查看hive的元数据信息(以下三种表分别是存储hive仓库中数据库、表、字段的三张元数据表)

hive操作情况表数据:

hive> use wcc_log;

OK

Time taken: 0.179 seconds

hive> truncate table test_log;

OK

Time taken: 0.399 seconds

hive> drop table test_log;

OK

Time taken: 1.195 seconds

hive> show tables;

OK

Time taken: 0.045 seconds

hive> hive新建一张正规的表:

hive> create table t_web_log01(id int,name string)

> row format delimited

> fields terminated by ',';

OK

Time taken: 0.602 seconds在linux中新建一个文件bbb_hive.txt,并且上传到hdfs:/user/hive/warehouse/wcc_log.db/t_web_log01目录

[hadoop@centos-aaron-h1 ~]$ cat bbb_hive.txt

1,张三

2,李四

3,王二

4,麻子

5,隔壁老王

[hadoop@centos-aaron-h1 ~]$ hdfs dfs -put bbb_hive.txt /user/hive/warehouse/wcc_log.db/t_web_log01查看hive中该表的数据:

[hadoop@centos-aaron-h1 bin]$ ./hive

which: no hbase in (/home/hadoop/apps/apache-hive-2.3.4-bin/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/usr/local/jdk1.7.0_45/bin:/home/hadoop/apps/hadoop-2.9.1/bin:/home/hadoop/apps/hadoop-2.9.1/sbin:/home/hadoop/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apps/apache-hive-2.3.4-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.9.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/home/hadoop/apps/apache-hive-2.3.4-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

hive> show databases;

OK

default

wcc_log

Time taken: 3.859 seconds, Fetched: 2 row(s)

hive> use wcc_log

> ;

OK

Time taken: 0.024 seconds

hive> show tables;

OK

t_web_log01

Time taken: 0.027 seconds, Fetched: 1 row(s)

hive> select * from t_web_log01;

OK

1 张三

2 李四

3 王二

4 麻子

5 隔壁老王

Time taken: 1.353 seconds, Fetched: 5 row(s)

hive> 【暂时未解决问题】在做聚合查询时错误日志记录:select count(*) from t_web_log01;

hive> select count(id) from t_web_log01;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

Query ID = hadoop_20190121041134_962cf495-4474-4c91-98ea-8a96bc548b20

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

org.apache.hadoop.yarn.exceptions.YarnRuntimeException: java.lang.reflect.InvocationTargetException

at org.apache.hadoop.yarn.factories.impl.pb.RpcClientFactoryPBImpl.getClient(RpcClientFactoryPBImpl.java:81)

at org.apache.hadoop.yarn.ipc.HadoopYarnProtoRPC.getProxy(HadoopYarnProtoRPC.java:48)

at org.apache.hadoop.mapred.ClientCache$1.run(ClientCache.java:95)

at org.apache.hadoop.mapred.ClientCache$1.run(ClientCache.java:92)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:356)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1869)

at org.apache.hadoop.mapred.ClientCache.instantiateHistoryProxy(ClientCache.java:92)

at org.apache.hadoop.mapred.ClientCache.getInitializedHSProxy(ClientCache.java:77)

at org.apache.hadoop.mapred.YARNRunner.addHistoryToken(YARNRunner.java:219)

at org.apache.hadoop.mapred.YARNRunner.submitJob(YARNRunner.java:316)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:253)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1570)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1567)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1889)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1567)

at org.apache.hadoop.mapred.JobClient$1.run(JobClient.java:576)

at org.apache.hadoop.mapred.JobClient$1.run(JobClient.java:571)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1889)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:571)

at org.apache.hadoop.mapred.JobClient.submitJob(JobClient.java:562)

at org.apache.hadoop.hive.ql.exec.mr.ExecDriver.execute(ExecDriver.java:411)

at org.apache.hadoop.hive.ql.exec.mr.MapRedTask.execute(MapRedTask.java:151)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:100)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2183)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1839)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1526)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1227)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:233)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:184)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:403)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:821)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:759)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:239)

at org.apache.hadoop.util.RunJar.main(RunJar.java:153)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.yarn.factories.impl.pb.RpcClientFactoryPBImpl.getClient(RpcClientFactoryPBImpl.java:78)

... 45 more

Caused by: java.lang.OutOfMemoryError: PermGen space

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2531)

at java.lang.Class.privateGetPublicMethods(Class.java:2651)

at java.lang.Class.privateGetPublicMethods(Class.java:2661)

at java.lang.Class.privateGetPublicMethods(Class.java:2661)

at java.lang.Class.privateGetPublicMethods(Class.java:2661)

at java.lang.Class.getMethods(Class.java:1467)

at sun.misc.ProxyGenerator.generateClassFile(ProxyGenerator.java:426)

at sun.misc.ProxyGenerator.generateProxyClass(ProxyGenerator.java:323)

at java.lang.reflect.Proxy.getProxyClass0(Proxy.java:636)

at java.lang.reflect.Proxy.newProxyInstance(Proxy.java:722)

at org.apache.hadoop.ipc.ProtobufRpcEngine.getProxy(ProtobufRpcEngine.java:101)

at org.apache.hadoop.ipc.RPC.getProtocolProxy(RPC.java:583)

at org.apache.hadoop.ipc.RPC.getProtocolProxy(RPC.java:549)

at org.apache.hadoop.ipc.RPC.getProtocolProxy(RPC.java:496)

at org.apache.hadoop.ipc.RPC.getProtocolProxy(RPC.java:461)

at org.apache.hadoop.ipc.RPC.getProtocolProxy(RPC.java:647)

at org.apache.hadoop.ipc.RPC.getProxy(RPC.java:604)

at org.apache.hadoop.mapreduce.v2.api.impl.pb.client.HSClientProtocolPBClientImpl.<init>(HSClientProtocolPBClientImpl.java:38)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

Job Submission failed with exception 'org.apache.hadoop.yarn.exceptions.YarnRuntimeException(java.lang.reflect.InvocationTargetException)'

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask. java.lang.reflect.InvocationTargetException

Exception in thread "main" java.lang.OutOfMemoryError: PermGen space

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at org.apache.hadoop.hive.common.FileUtils.deleteDirectory(FileUtils.java:778)

at org.apache.hadoop.hive.ql.session.SessionState.close(SessionState.java:1560)

at org.apache.hadoop.hive.cli.CliSessionState.close(CliSessionState.java:66)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:762)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:239)

at org.apache.hadoop.util.RunJar.main(RunJar.java:153)

Exception in thread "Thread-1" java.lang.OutOfMemoryError: PermGen space

hive> set mapred.reduce.tasks = 1;

hive> select count(1) from t_web_log01;

OK

0

Time taken: 1.962 seconds, Fetched: 1 row(s)

hive> select count(id) from t_web_log01;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

Query ID = hadoop_20190121041942_3f55a0ac-c478-43f5-abf3-0a7aace5c334

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

FAILED: Execution Error, return code -101 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask. PermGen space

Exception in thread "main" java.lang.OutOfMemoryError: PermGen space

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at org.apache.hadoop.hive.common.FileUtils.deleteDirectory(FileUtils.java:778)

at org.apache.hadoop.hive.ql.session.SessionState.close(SessionState.java:1560)

at org.apache.hadoop.hive.cli.CliSessionState.close(CliSessionState.java:66)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:762)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:239)

at org.apache.hadoop.util.RunJar.main(RunJar.java:153)原因:hive2.3.4已经建议更换其它的平台(Apache Tez, Apache Spark)来跑任务了。博主下一篇文章将用hive1.2.2来为小伙伴们重新搭建一次!

最后寄语,以上是博主本次文章的全部内容,如果大家觉得博主的文章还不错,请点赞;如果您对博主其它服务器大数据技术或者博主本人感兴趣,请关注博主博客,并且欢迎随时跟博主沟通交流。

来源:oschina

链接:https://my.oschina.net/u/2371923/blog/3003831