What is the purpose/advantage of using yield return iterators in C#?

All of the examples I\'ve seen of using yield return x; inside a C# method could be done in the same way by just returning the whole list. In those cases, is th

-

Lazy Evaluation/Deferred Execution

The "yield return" iterator blocks won't execute any of the code until you actually call for that specific result. This means they can also be chained together efficiently. Pop quiz: how many times will the following code iterate over the file?

var query = File.ReadLines(@"C:\MyFile.txt") .Where(l => l.Contains("search text") ) .Select(l => int.Parse(l.SubString(5,8)) .Where(i => i > 10 ); int sum=0; foreach (int value in query) { sum += value; }The answer is exactly one, and that not until way down in the

foreachloop. Even though I have three separate linq operator functions, we still only loop through the contents of the file one time.This has benefits other than performance. For example, I can write a fair simple and generic method to read and pre-filter a log file once, and use that same method in several different places, where each use adds on different filters. Thus, I maintain good performance while also efficiently re-using code.

Infinite Lists

See my answer to this question for a good example:

C# fibonacci function returning errorsBasically, I implement the fibonacci sequence using an iterator block that will never stop (at least, not before reaching MaxInt), and then use that implementation in a safe way.

Improved Semantics and separation of concerns

Again using the file example from above, we can now easily separate the code that reads the file from the code that filters out un-needed lines from the code that actually parses the results. That first one, especially, is very re-usable.

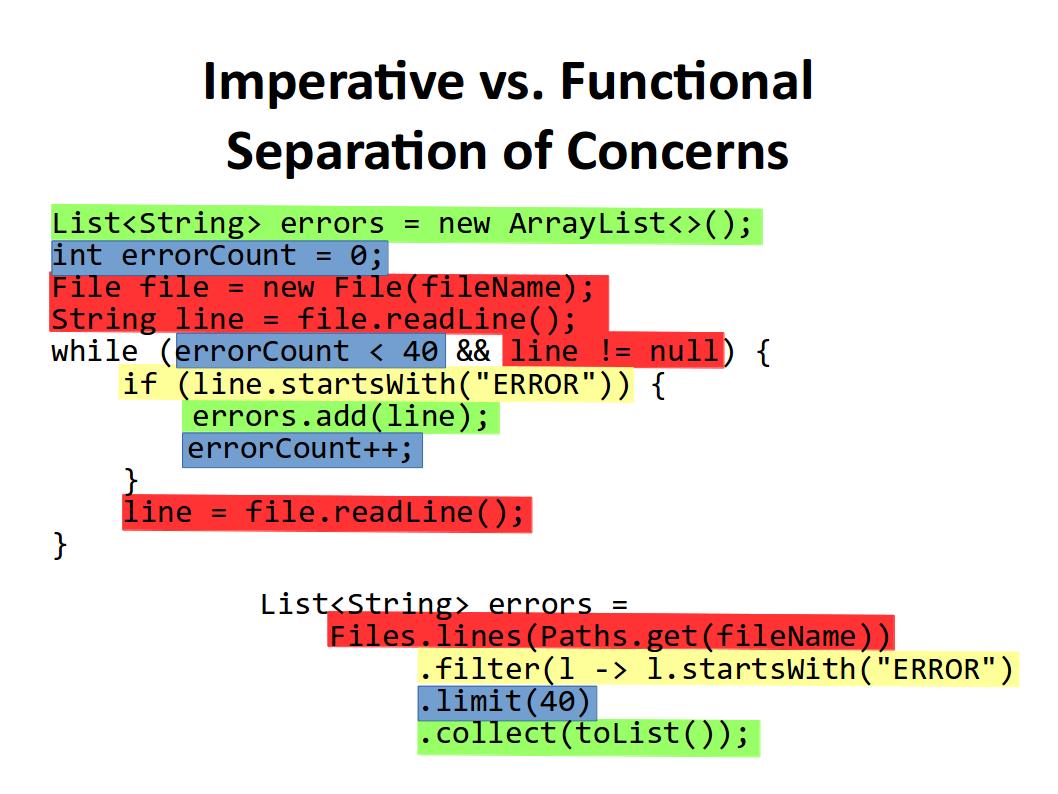

This is one of those things that's much harder to explain with prose than it is to just who with a simple visual1:

If you can't see the image, it shows two versions of the same code, with background highlights for different concerns. The linq code has all of the colors nicely grouped, while the traditional imperative code has the colors intermingled. The author argues (and I concur) that this result is typical of using linq vs using imperative code... that linq does a better job organizing your code to have a better flow between sections.

1 I believe this to be the original source: https://twitter.com/mariofusco/status/571999216039542784. Also note that this code is Java, but the C# would be similar.

讨论(0) -

Sometimes the sequences you need to return are just too large to fit in the memory. For example, about 3 months ago I took part in a project for data migration between MS SLQ databases. Data was exported in XML format. Yield return turned out to be quite useful with XmlReader. It made programming quite easier. For example, suppose a file had 1000 Customer elements - if you just read this file into memory, this will require to store all of them in memory at the same time, even if they are handled sequentially. So, you can use iterators in order to traverse the collection one by one. In that case you have to spend just memory for one element.

As it turned out, using XmlReader for our project was the only way to make the application work - it worked for a long time, but at least it did not hang the entire system and did not raise OutOfMemoryException. Of course, you can work with XmlReader without yield iterators. But iterators made my life much easier (I would not write the code for import so quickly and without troubles). Watch this page in order to see, how yield iterators are used for solving real problems (not just scientific with infinite sequences).

讨论(0) -

Here's my previous accepted answer to exactly the same question:

Yield keyword value added?

Another way to look at iterator methods is that they do the hard work of turning an algorithm "inside out". Consider a parser. It pulls text from a stream, looks for patterns in it and generates a high-level logical description of the content.

Now, I can make this easy for myself as a parser author by taking the SAX approach, in which I have a callback interface that I notify whenever I find the next piece of the pattern. So in the case of SAX, each time I find the start of an element, I call the

beginElementmethod, and so on.But this creates trouble for my users. They have to implement the handler interface and so they have to write a state machine class that responds to the callback methods. This is hard to get right, so the easiest thing to do is use a stock implementation that builds a DOM tree, and then they will have the convenience of being able to walk the tree. But then the whole structure gets buffered up in memory - not good.

But how about instead I write my parser as an iterator method?

IEnumerable<LanguageElement> Parse(Stream stream) { // imperative code that pulls from the stream and occasionally // does things like: yield return new BeginStatement("if"); // and so on... }That will be no harder to write than the callback-interface approach - just yield return an object derived from my

LanguageElementbase class instead of calling a callback method.The user can now use foreach to loop through my parser's output, so they get a very convenient imperative programming interface.

The result is that both sides of a custom API look like they're in control, and hence are easier to write and understand.

讨论(0) -

Take a look at this discussion on Eric White's blog (excellent blog by the way) on lazy versus eager evaluation.

讨论(0)

- 热议问题

加载中...

加载中...