What is the difference between Q-learning and Value Iteration?

How is Q-learning different from value iteration in reinforcement learning?

I know Q-learning is model-free and training samples are transitions (s, a, s\', r)

-

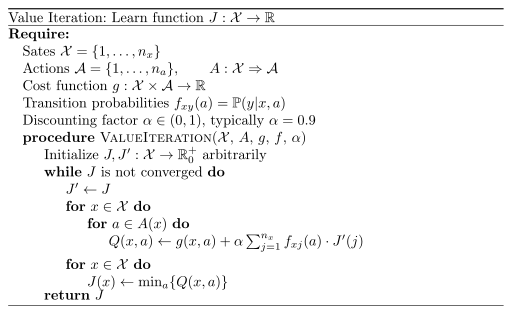

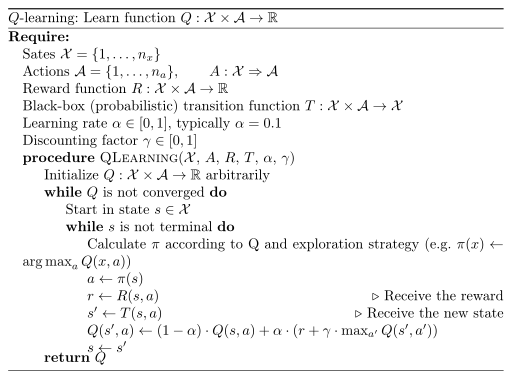

Value iteration is used when you have transition probabilities, that means when you know the probability of getting from state x into state x' with action a. In contrast, you might have a black box which allows you to simulate it, but you're not actually given the probability. So you are model-free. This is when you apply Q learning.

Also what is learned is different. With value iteration, you learn the expected cost when you are given a state x. With q-learning, you get the expected discounted cost when you are in state x and apply action a.

Here are the algorithms:

I'm currently writing down quite a bit about reinforcement learning for an exam. You might also be interested in my lecture notes. However, they are mostly in German.

- 热议问题

加载中...

加载中...