Sql: Optimizing BETWEEN clause

I wrote a statement that takes almost an hour to run so I am asking help so I can get to do this faster. So here we go:

I am making an inner join of two tables :

<-

This is quite a common problem.

Plain

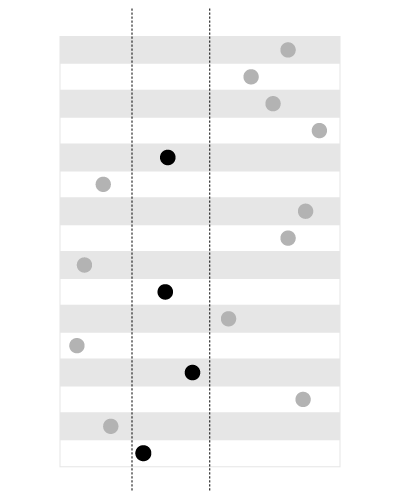

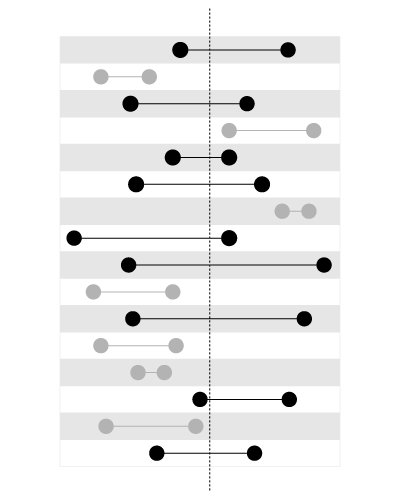

B-Treeindexes are not good for the queries like this:SELECT measures.measure as measure, measures.time as time, intervals.entry_time as entry_time, intervals.exit_time as exit_time FROM intervals JOIN measures ON measures.time BETWEEN intervals.entry_time AND intervals.exit_time ORDER BY time ASCAn index is good for searching the values within the given bounds, like this:

, but not for searching the bounds containing the given value, like this:

This article in my blog explains the problem in more detail:

- Adjacency list vs. nested sets: MySQL

(the nested sets model deals with the similar type of predicate).

You can make the index on

time, this way theintervalswill be leading in the join, the ranged time will be used inside the nested loops. This will require sorting ontime.You can create a spatial index on

intervals(available inMySQLusingMyISAMstorage) that would includestartandendin one geometry column. This way,measurescan lead in the join and no sorting will be needed.The spatial indexes, however, are more slow, so this will only be efficient if you have few measures but many intervals.

Since you have few intervals but many measures, just make sure you have an index on

measures.time:CREATE INDEX ix_measures_time ON measures (time)Update:

Here's a sample script to test:

BEGIN DBMS_RANDOM.seed(20091223); END; / CREATE TABLE intervals ( entry_time NOT NULL, exit_time NOT NULL ) AS SELECT TO_DATE('23.12.2009', 'dd.mm.yyyy') - level, TO_DATE('23.12.2009', 'dd.mm.yyyy') - level + DBMS_RANDOM.value FROM dual CONNECT BY level <= 1500 / CREATE UNIQUE INDEX ux_intervals_entry ON intervals (entry_time) / CREATE TABLE measures ( time NOT NULL, measure NOT NULL ) AS SELECT TO_DATE('23.12.2009', 'dd.mm.yyyy') - level / 720, CAST(DBMS_RANDOM.value * 10000 AS NUMBER(18, 2)) FROM dual CONNECT BY level <= 1080000 / ALTER TABLE measures ADD CONSTRAINT pk_measures_time PRIMARY KEY (time) / CREATE INDEX ix_measures_time_measure ON measures (time, measure) /This query:

SELECT SUM(measure), AVG(time - TO_DATE('23.12.2009', 'dd.mm.yyyy')) FROM ( SELECT * FROM ( SELECT /*+ ORDERED USE_NL(intervals measures) */ * FROM intervals JOIN measures ON measures.time BETWEEN intervals.entry_time AND intervals.exit_time ORDER BY time ) WHERE rownum <= 500000 )uses

NESTED LOOPSand returns in1.7seconds.This query:

SELECT SUM(measure), AVG(time - TO_DATE('23.12.2009', 'dd.mm.yyyy')) FROM ( SELECT * FROM ( SELECT /*+ ORDERED USE_MERGE(intervals measures) */ * FROM intervals JOIN measures ON measures.time BETWEEN intervals.entry_time AND intervals.exit_time ORDER BY time ) WHERE rownum <= 500000 )uses

MERGE JOINand I had to stop it after5minutes.Update 2:

You will most probably need to force the engine to use the correct table order in the join using a hint like this:

SELECT /*+ LEADING (intervals) USE_NL(intervals, measures) */ measures.measure as measure, measures.time as time, intervals.entry_time as entry_time, intervals.exit_time as exit_time FROM intervals JOIN measures ON measures.time BETWEEN intervals.entry_time AND intervals.exit_time ORDER BY time ASCThe

Oracle's optimizer is not smart enough to see that the intervals do not intersect. That's why it will most probably usemeasuresas a leading table (which would be a wise decision should the intervals intersect).Update 3:

WITH splits AS ( SELECT /*+ MATERIALIZE */ entry_range, exit_range, exit_range - entry_range + 1 AS range_span, entry_time, exit_time FROM ( SELECT TRUNC((entry_time - TO_DATE(1, 'J')) * 2) AS entry_range, TRUNC((exit_time - TO_DATE(1, 'J')) * 2) AS exit_range, entry_time, exit_time FROM intervals ) ), upper AS ( SELECT /*+ MATERIALIZE */ MAX(range_span) AS max_range FROM splits ), ranges AS ( SELECT /*+ MATERIALIZE */ level AS chunk FROM upper CONNECT BY level <= max_range ), tiles AS ( SELECT /*+ MATERIALIZE USE_MERGE (r s) */ entry_range + chunk - 1 AS tile, entry_time, exit_time FROM ranges r JOIN splits s ON chunk <= range_span ) SELECT /*+ LEADING(t) USE_HASH(m t) */ SUM(LENGTH(stuffing)) FROM tiles t JOIN measures m ON TRUNC((m.time - TO_DATE(1, 'J')) * 2) = tile AND m.time BETWEEN t.entry_time AND t.exit_timeThis query splits the time axis into the ranges and uses a

HASH JOINto join the measures and timestamps on the range values, with fine filtering later.See this article in my blog for more detailed explanations on how it works:

- Oracle: joining timestamps and time intervals

- 热议问题

加载中...

加载中...