HDFS

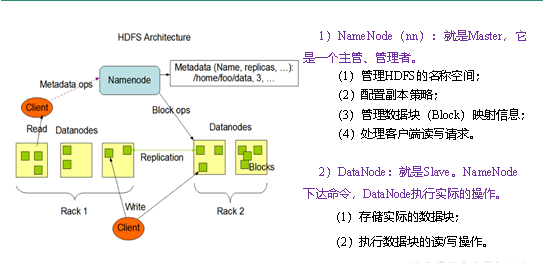

HDFS组成架构

HDFS文件块大小

HDFSShell)

1

bin/hadoop fs

dfsfs实现

2.命令

$ bin/hadoop fs [-appendToFile <localsrc> ... <dst>] [-cat [-ignoreCrc] <src> ...] [-checksum <src> ...] [-chgrp [-R] GROUP PATH...] [-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...] [-chown [-R] [OWNER][:[GROUP]] PATH...] [-copyFromLocal [-f] [-p] <localsrc> ... <dst>] [-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] [-count [-q] <path> ...] [-cp [-f] [-p] <src> ... <dst>] [-createSnapshot <snapshotDir> [<snapshotName>]] [-deleteSnapshot <snapshotDir> <snapshotName>] [-df [-h] [<path> ...]] [-du [-s] [-h] <path> ...] [-expunge] [-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] [-getfacl [-R] <path>] [-getmerge [-nl] <src> <localdst>] [-help [cmd ...]] [-ls [-d] [-h] [-R] [<path> ...]] [-mkdir [-p] <path> ...] [-moveFromLocal <localsrc> ... <dst>] [-moveToLocal <src> <localdst>] [-mv <src> ... <dst>] [-put [-f] [-p] <localsrc> ... <dst>] [-renameSnapshot <snapshotDir> <oldName> <newName>] [-rm [-f] [-r|-R] [-skipTrash] <src> ...] [-rmdir [--ignore-fail-on-non-empty] <dir> ...] [-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]] [-setrep [-R] [-w] <rep> <path> ...] [-stat [format] <path> ...] [-tail [-f] <file>] [-test -[defsz] <path>] [-text [-ignoreCrc] <src> ...] [-touchz <path> ...] [-usage [cmd ...]] 3实操

0Hadoop

$ sbin/start-dfs.sh $ sbin/start-yarn.sh

1-help

$ hadoop fs -help rm

2-ls:

$ hadoop fs -ls /

3-mkdirHDFS上创建目录

$ hadoop fs -mkdir -p /sanguo/shuguo

4-moveFromLocalHDFS

$ touch kongming.txt $ hadoop fs -moveFromLocal ./kongming.txt /sanguo/shuguo

5-appendToFile

$ touch liubei.txt $ vi liubei.txt 输入 san gu mao lu $ hadoop fs -appendToFile liubei.txt /sanguo/shuguo/kongming.txt

6-cat

$ hadoop fs -cat /sanguo/shuguo/kongming.txt

(7-chgrp -chmod-chownLinux

$ hadoop fs -chmod 666 /sanguo/shuguo/kongming.txt $ hadoop fs -chown atguigu:atguigu /sanguo/shuguo/kongming.txt

(8-copyFromLocalHDFS路径去

$ hadoop fs -copyFromLocal README.txt /

(9-copyToLocalHDFS拷贝到本地

$ hadoop fs -copyToLocal /sanguo/shuguo/kongming.txt ./

10-cp HDFS的一个路径拷贝到HDFS的另一个路径

$ hadoop fs -cp /sanguo/shuguo/kongming.txt /zhuge.txt

11-mvHDFS目录中移动文件

$ hadoop fs -mv /zhuge.txt /sanguo/shuguo/

12-getcopyToLocalHDFS下载文件到本地

$ hadoop fs -get /sanguo/shuguo/kongming.txt ./

13-getmergeHDFS /user/atguigu/test:log.1, log.2,log.3,...

$ hadoop fs -getmerge /user/atguigu/test/* ./zaiyiqi.txt

14-putcopyFromLocal

$ hadoop fs -put ./zaiyiqi.txt /user/atguigu/test/

(15-tail

$ hadoop fs -tail /sanguo/shuguo/kongming.txt

16-rm

$ hadoop fs -rm /user/atguigu/test/jinlian2.txt

17-rmdir

$ hadoop fs -mkdir /test $ hadoop fs -rmdir /test

18-du

$ hadoop fs -du -s -h /user/atguigu/test 2.7 K /user/atguigu/test $ hadoop fs -du -h /user/atguigu/test 1.3 K /user/atguigu/test/README.txt 15 /user/atguigu/test/jinlian.txt 1.4 K /user/atguigu/test/zaiyiqi.txt

19-setrepHDFS中文件的副本数量

$ hadoop fs -setrep 10 /sanguo/shuguo/kongming.txt

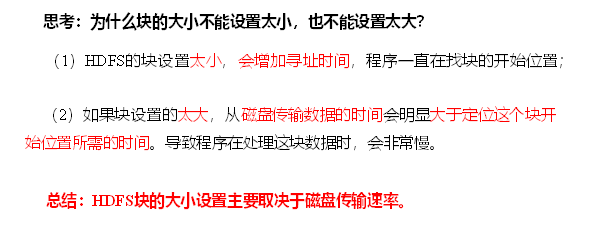

图

这里设置NameNodeDataNode

3台310台时副本10